2024년 2월 2일

Dolma: an Open Corpus of Three Trillion Tokens for Language Model Pretraining Research

(Luca Soldaini, Rodney Kinney, Akshita Bhagia, Dustin Schwenk, David Atkinson, Russell Authur, Ben Bogin, Khyathi Chandu, Jennifer Dumas, Yanai Elazar, Valentin Hofmann, Ananya Harsh Jha, Sachin Kumar, Li Lucy, Xinxi Lyu, Nathan Lambert, Ian Magnusson, Jacob Morrison, Niklas Muennighoff, Aakanksha Naik, Crystal Nam, Matthew E. Peters, Abhilasha Ravichander, Kyle Richardson, Zejiang Shen, Emma Strubell, Nishant Subramani, Oyvind Tafjord, Pete Walsh, Luke Zettlemoyer, Noah A. Smith, Hannaneh Hajishirzi, Iz Beltagy, Dirk Groeneveld, Jesse Dodge, Kyle Lo)

Language models have become a critical technology to tackling a wide range of natural language processing tasks, yet many details about how the best-performing language models were developed are not reported. In particular, information about their pretraining corpora is seldom discussed: commercial language models rarely provide any information about their data; even open models rarely release datasets they are trained on, or an exact recipe to reproduce them. As a result, it is challenging to conduct certain threads of language modeling research, such as understanding how training data impacts model capabilities and shapes their limitations. To facilitate open research on language model pretraining, we release Dolma, a three trillion tokens English corpus, built from a diverse mixture of web content, scientific papers, code, public-domain books, social media, and encyclopedic materials. In addition, we open source our data curation toolkit to enable further experimentation and reproduction of our work. In this report, we document Dolma, including its design principles, details about its construction, and a summary of its contents. We interleave this report with analyses and experimental results from training language models on intermediate states of Dolma to share what we have learned about important data curation practices, including the role of content or quality filters, deduplication, and multi-source mixing. Dolma has been used to train OLMo, a state-of-the-art, open language model and framework designed to build and study the science of language modeling.

이전에 공개됐었던 Dolma (https://blog.allenai.org/dolma-3-trillion-tokens-open-llm-corpus-9a0ff4b8da64) 데이터셋에 대한 리포트. 데이터셋에 대해 이렇게 상세하게 이야기하는 사례가 이제 극히 드물다는 것을 고려하면 흥미롭습니다.

블로그에서도 나와 있었던 부분이지만 CCNet 기반으로 모델 기반 필터링 없이 Gopher의 휴리스틱과 C4의 구두점으로 끝나지 않는 문단을 날리는 필터링을 하고 컨텐츠 필터링, URL/문서/문단 레벨 Exact Deduplication을 했습니다. 사실 이 개별 선택들이 약간씩 특이한 점이 있는데 그에 대한 일부 근거들이 제시되어 있습니다.

CCNet vs Trafilatura 같은 Extractor의 비교, 모델 기반 필터링 vs 휴리스틱, Fuzzy Deduplication 등등이 궁금하긴 합니다만 이건 직접 해보는 수밖에 없겠군요.

별개의 이야기지만 웹 이외의 데이터셋을 보다 대규모로 확충하는 것이 필요하다는 생각도 있습니다. 어렵지만요.

#dataset #corpus

OLMo: Accelerating the Science of Language Models

(Dirk Groeneveld, Iz Beltagy, Pete Walsh, Akshita Bhagia, Rodney Kinney, Oyvind Tafjord, Ananya Harsh Jha, Hamish Ivison, Ian Magnusson, Yizhong Wang, Shane Arora, David Atkinson, Russell Authur, Khyathi Raghavi Chandu, Arman Cohan, Jennifer Dumas, Yanai Elazar, Yuling Gu, Jack Hessel, Tushar Khot, William Merrill, Jacob Morrison, Niklas Muennighoff, Aakanksha Naik, Crystal Nam, Matthew E. Peters, Valentina Pyatkin, Abhilasha Ravichander, Dustin Schwenk, Saurabh Shah, Will Smith, Emma Strubell, Nishant Subramani, Mitchell Wortsman, Pradeep Dasigi, Nathan Lambert, Kyle Richardson, Luke Zettlemoyer, Jesse Dodge, Kyle Lo, Luca Soldaini, Noah A. Smith, Hannaneh Hajishirzi)

Language models (LMs) have become ubiquitous in both NLP research and in commercial product offerings. As their commercial importance has surged, the most powerful models have become closed off, gated behind proprietary interfaces, with important details of their training data, architectures, and development undisclosed. Given the importance of these details in scientifically studying these models, including their biases and potential risks, we believe it is essential for the research community to have access to powerful, truly open LMs. To this end, this technical report details the first release of OLMo, a state-of-the-art, truly Open Language Model and its framework to build and study the science of language modeling. Unlike most prior efforts that have only released model weights and inference code, we release OLMo and the whole framework, including training data and training and evaluation code. We hope this release will empower and strengthen the open research community and inspire a new wave of innovation.

Dolma 코퍼스로 학습한 7B 모델. 2.5T 학습했고 성능은 전반적으로 다른 7B 모델들과 비슷해 보입니다. 주요 강조점 중 하나가 코드와 체크포인트 등을 모두 공개했다는 것이네요. 다만 학습은 FSDP만으로 한 것 같군요.

재미있는 것은 A100 뿐만 아니라 1,000대 규모의 AMD MI250X로도 학습을 시켜봤다는 것이네요. 문제 없이 됐다고 합니다.

#llm

Dense Reward for Free in Reinforcement Learning from Human Feedback

(Alex J. Chan, Hao Sun, Samuel Holt, Mihaela van der Schaar)

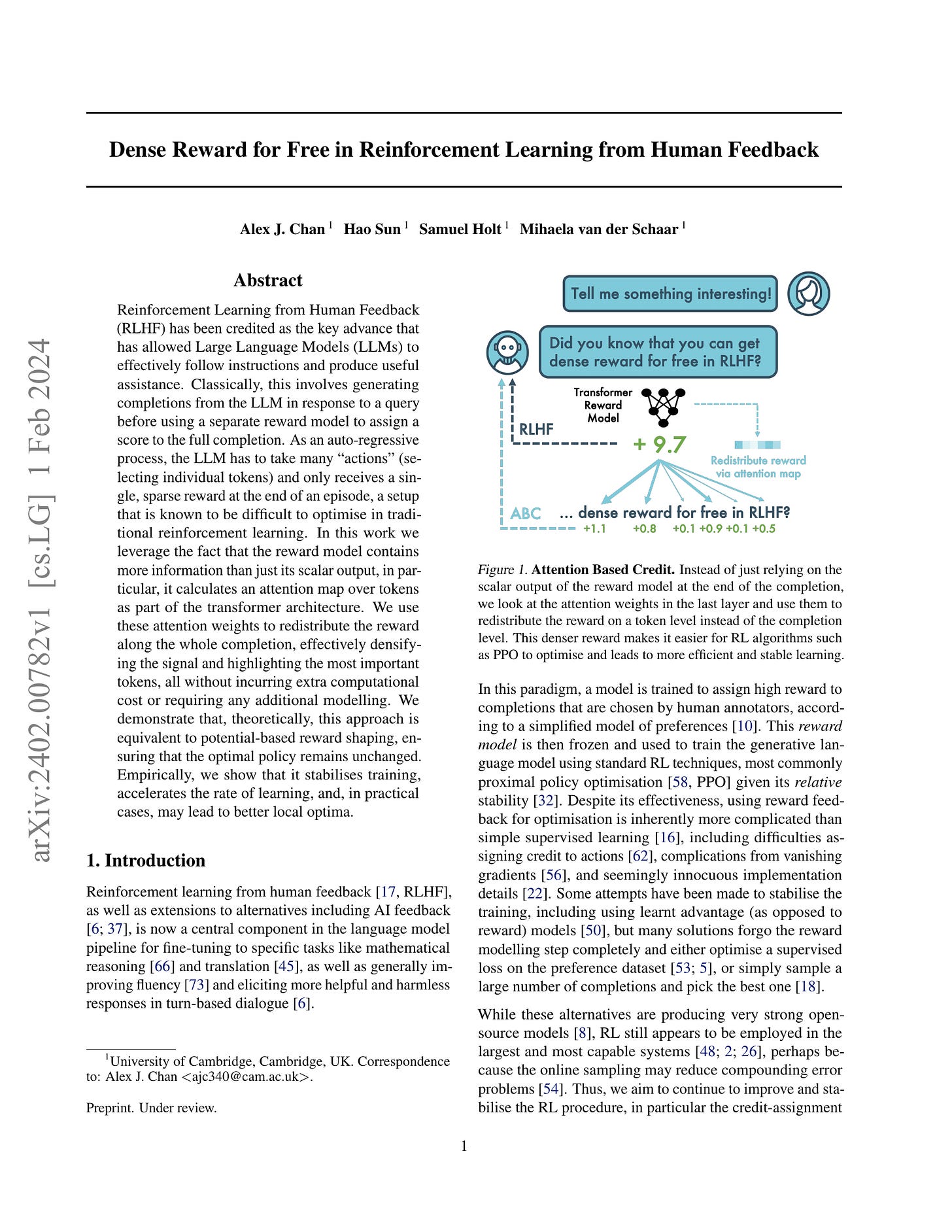

Reinforcement Learning from Human Feedback (RLHF) has been credited as the key advance that has allowed Large Language Models (LLMs) to effectively follow instructions and produce useful assistance. Classically, this involves generating completions from the LLM in response to a query before using a separate reward model to assign a score to the full completion. As an auto-regressive process, the LLM has to take many "actions" (selecting individual tokens) and only receives a single, sparse reward at the end of an episode, a setup that is known to be difficult to optimise in traditional reinforcement learning. In this work we leverage the fact that the reward model contains more information than just its scalar output, in particular, it calculates an attention map over tokens as part of the transformer architecture. We use these attention weights to redistribute the reward along the whole completion, effectively densifying the signal and highlighting the most important tokens, all without incurring extra computational cost or requiring any additional modelling. We demonstrate that, theoretically, this approach is equivalent to potential-based reward shaping, ensuring that the optimal policy remains unchanged. Empirically, we show that it stabilises training, accelerates the rate of learning, and, in practical cases, may lead to better local optima.

Reward 모델의 Attention Weight를 사용해 Sparse Reward를 토큰들에 나눠 Dense하게 만든다는 아이디어. 재미있네요.

#reward-model #rlhf

Towards Efficient and Exact Optimization of Language Model Alignment

(Haozhe Ji, Cheng Lu, Yilin Niu, Pei Ke, Hongning Wang, Jun Zhu, Jie Tang, Minlie Huang)

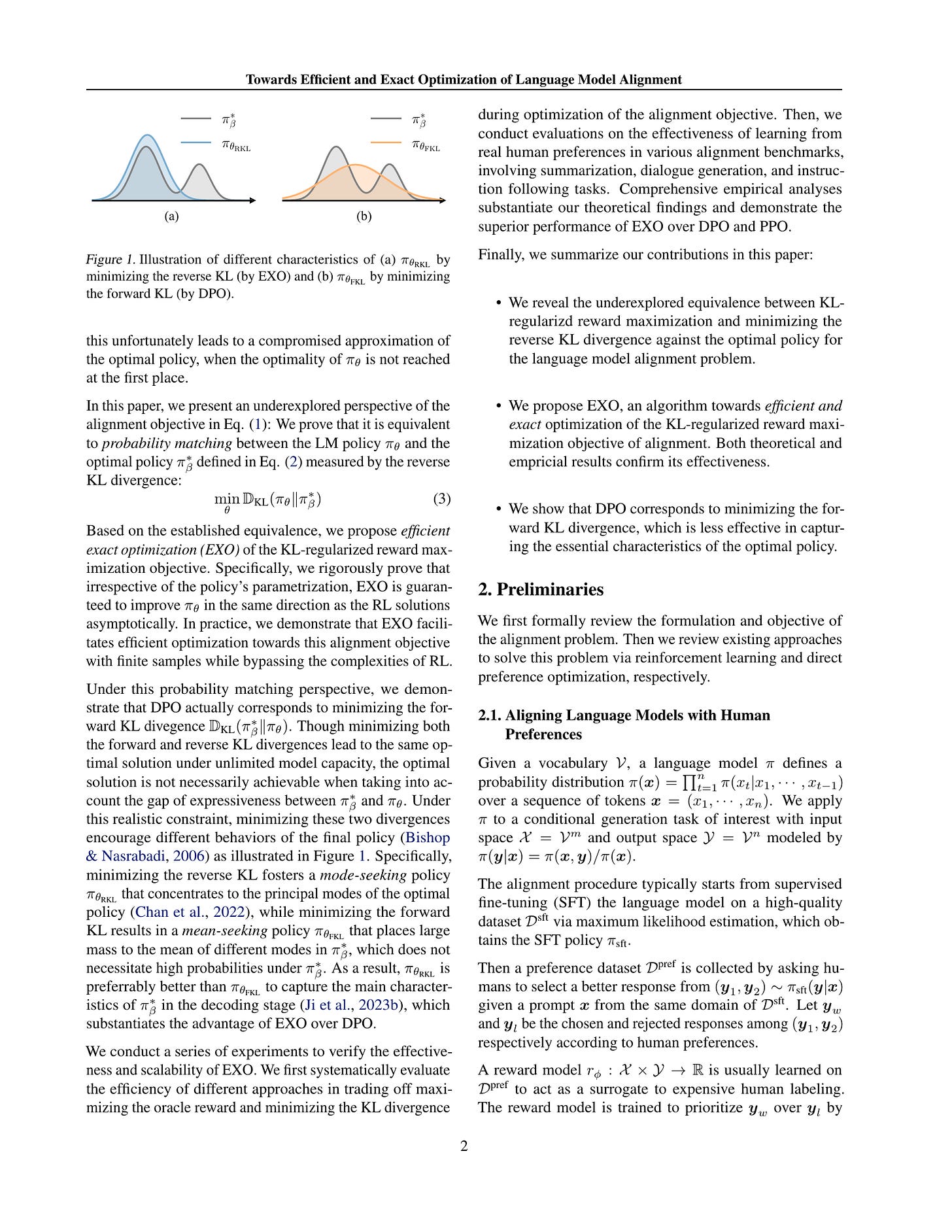

The alignment of language models with human preferences is vital for their application in real-world tasks. The problem is formulated as optimizing the model's policy to maximize the expected reward that reflects human preferences with minimal deviation from the initial policy. While considered as a straightforward solution, reinforcement learning (RL) suffers from high variance in policy updates, which impedes efficient policy improvement. Recently, direct preference optimization (DPO) was proposed to directly optimize the policy from preference data. Though simple to implement, DPO is derived based on the optimal policy that is not assured to be achieved in practice, which undermines its convergence to the intended solution. In this paper, we propose efficient exact optimization (EXO) of the alignment objective. We prove that EXO is guaranteed to optimize in the same direction as the RL algorithms asymptotically for arbitary parametrization of the policy, while enables efficient optimization by circumventing the complexities associated with RL algorithms. We compare our method to DPO with both theoretical and empirical analyses, and further demonstrate the advantages of our method over existing approaches on realistic human preference data.

DPO + Reverse KL + Reward Model을 통해 K개 샘플을 사용이라는 느낌이군요.

#rlhf

Transforming and Combining Rewards for Aligning Large Language Models

(Zihao Wang, Chirag Nagpal, Jonathan Berant, Jacob Eisenstein, Alex D'Amour, Sanmi Koyejo, Victor Veitch)

A common approach for aligning language models to human preferences is to first learn a reward model from preference data, and then use this reward model to update the language model. We study two closely related problems that arise in this approach. First, any monotone transformation of the reward model preserves preference ranking; is there a choice that is "better" than others? Second, we often wish to align language models to multiple properties: how should we combine multiple reward models? Using a probabilistic interpretation of the alignment procedure, we identify a natural choice for transformation for (the common case of) rewards learned from Bradley-Terry preference models. This derived transformation has two important properties. First, it emphasizes improving poorly-performing outputs, rather than outputs that already score well. This mitigates both underfitting (where some prompts are not improved) and reward hacking (where the model learns to exploit misspecification of the reward model). Second, it enables principled aggregation of rewards by linking summation to logical conjunction: the sum of transformed rewards corresponds to the probability that the output is ``good'' in all measured properties, in a sense we make precise. Experiments aligning language models to be both helpful and harmless using RLHF show substantial improvements over the baseline (non-transformed) approach.

Reward Score에 대한 적절한 단조 변환을 설정할 수 있는가? 그리고 여러 Reward Model을 어떻게 결합할 수 있는가? 라는 문제입니다. 여기서 찾아낸 방법은 logsigmoid와 베이스라인 스코어를 사용하는 방법입니다.

베이스라인을 찾아내는 것이 중요한데 이건 샘플을 여러 개 뽑아서 스코어의 85분위수를 쓰는 방식이나 응답 거부의 스코어를 쓰는 방법을 사용했습니다. 샘플을 여러 개 뽑고 그 샘플들의 Reward 스코어 분포를 본다는 것 자체가 흥미로운 의미를 갖는 것이 아닌가 하는 생각이 드네요.

#reward-model #rlhf

Efficient Exploration for LLMs

(Vikranth Dwaracherla, Seyed Mohammad Asghari, Botao Hao, Benjamin Van Roy)

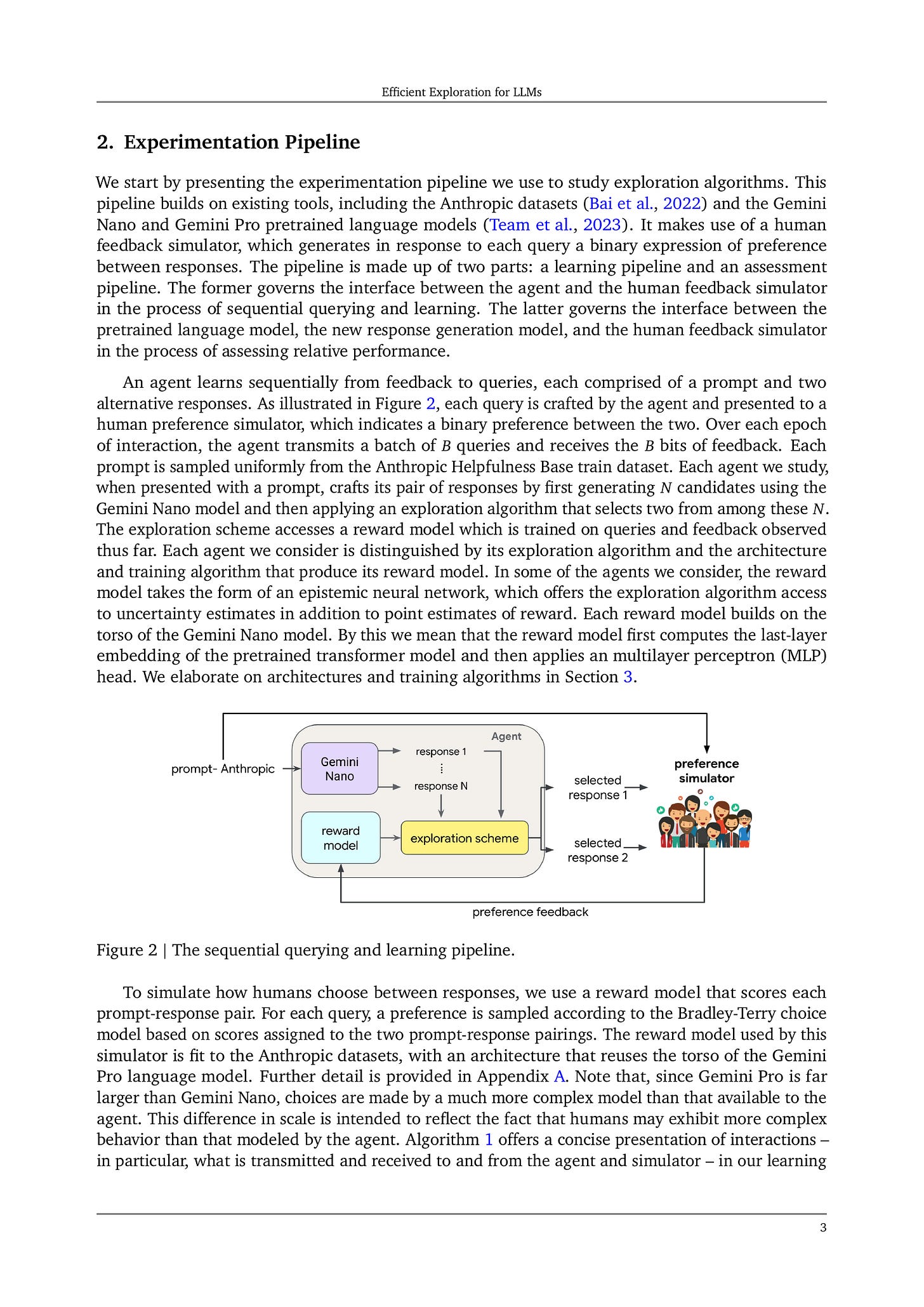

We present evidence of substantial benefit from efficient exploration in gathering human feedback to improve large language models. In our experiments, an agent sequentially generates queries while fitting a reward model to the feedback received. Our best-performing agent generates queries using double Thompson sampling, with uncertainty represented by an epistemic neural network. Our results demonstrate that efficient exploration enables high levels of performance with far fewer queries. Further, both uncertainty estimation and the choice of exploration scheme play critical roles.

Preference 어노테이션을 할 페어를 어떻게 샘플링할 것인가? 그냥 두 개 뽑는 대신 Reward Model, 특히 Epistemic Neural Networks (https://arxiv.org/abs/2107.08924) 를 적용한 Reward Model을 사용해 Reward Score가 높거나 혹은 Uncertanity가 높은 샘플을 쓴다는 방식입니다. 이것도 일종의 Active Learning이라고 할 수 있겠죠.

여담이지만 구글이 실험에 제미니를 쓰기 시작했군요.

#reward-model #active-learning