2024년 12월 6일

NVILA: Efficient Frontier Visual Language Models

(Zhijian Liu, Ligeng Zhu, Baifeng Shi, Zhuoyang Zhang, Yuming Lou, Shang Yang, Haocheng Xi, Shiyi Cao, Yuxian Gu, Dacheng Li, Xiuyu Li, Yunhao Fang, Yukang Chen, Cheng-Yu Hsieh, De-An Huang, An-Chieh Cheng, Vishwesh Nath, Jinyi Hu, Sifei Liu, Ranjay Krishna, Daguang Xu, Xiaolong Wang, Pavlo Molchanov, Jan Kautz, Hongxu Yin, Song Han, Yao Lu)

Visual language models (VLMs) have made significant advances in accuracy in recent years. However, their efficiency has received much less attention. This paper introduces NVILA, a family of open VLMs designed to optimize both efficiency and accuracy. Building on top of VILA, we improve its model architecture by first scaling up the spatial and temporal resolutions, and then compressing visual tokens. This "scale-then-compress" approach enables NVILA to efficiently process high-resolution images and long videos. We also conduct a systematic investigation to enhance the efficiency of NVILA throughout its entire lifecycle, from training and fine-tuning to deployment. NVILA matches or surpasses the accuracy of many leading open and proprietary VLMs across a wide range of image and video benchmarks. At the same time, it reduces training costs by 4.5X, fine-tuning memory usage by 3.4X, pre-filling latency by 1.6-2.2X, and decoding latency by 1.2-2.8X. We will soon make our code and models available to facilitate reproducibility.

이미지와 비디오의 시간과 공간 해상도는 높이되 그에 따라 발생하는 토큰 수 증가를 공간축으로는 채널로 접어넣는 방법을, 시간축으로는 풀링을 사용해서 대응했네요.

Increasing the spatial and temporal resolution of image and video inputs, and addressed the resulting increase in token count by folding spatial dimensions into channel dimensions for spatial axes, and by using pooling for temporal axes.

#vision-language #multimodal #video-language

Liquid: Language Models are Scalable Multi-modal Generators

(Junfeng Wu, Yi Jiang, Chuofan Ma, Yuliang Liu, Hengshuang Zhao, Zehuan Yuan, Song Bai, Xiang Bai)

We present Liquid, an auto-regressive generation paradigm that seamlessly integrates visual comprehension and generation by tokenizing images into discrete codes and learning these code embeddings alongside text tokens within a shared feature space for both vision and language. Unlike previous multimodal large language model (MLLM), Liquid achieves this integration using a single large language model (LLM), eliminating the need for external pretrained visual embeddings such as CLIP. For the first time, Liquid uncovers a scaling law that performance drop unavoidably brought by the unified training of visual and language tasks diminishes as the model size increases. Furthermore, the unified token space enables visual generation and comprehension tasks to mutually enhance each other, effectively removing the typical interference seen in earlier models. We show that existing LLMs can serve as strong foundations for Liquid, saving 100x in training costs while outperforming Chameleon in multimodal capabilities and maintaining language performance comparable to mainstream LLMs like LLAMA2. Liquid also outperforms models like SD v2.1 and SD-XL (FID of 5.47 on MJHQ-30K), excelling in both vision-language and text-only tasks. This work demonstrates that LLMs such as LLAMA3.2 and GEMMA2 are powerful multimodal generators, offering a scalable solution for enhancing both vision-language understanding and generation. The code and models will be released.

VQ 토큰을 그대로 입력으로 사용해 이미지 생성과 인식을 하려는 시도 하나 더. VQ가 워낙 빠르게 발전하고 있어서 이런 스타일의 Autoregressive 모델도 상황이 나아지지 않았나 싶습니다.

This is another attempt at image generation and recognition using VQ tokens directly as input. Given the rapid progress in VQ, I think the situation for these types of autoregressive models may have improved significantly.

#multimodal #autoregressive-model #image-generation

The Hyperfitting Phenomenon: Sharpening and Stabilizing LLMs for Open-Ended Text Generation

(Fredrik Carlsson, Fangyu Liu, Daniel Ward, Murathan Kurfali, Joakim Nivre)

This paper introduces the counter-intuitive generalization results of overfitting pre-trained large language models (LLMs) on very small datasets. In the setting of open-ended text generation, it is well-documented that LLMs tend to generate repetitive and dull sequences, a phenomenon that is especially apparent when generating using greedy decoding. This issue persists even with state-of-the-art LLMs containing billions of parameters, trained via next-token prediction on large datasets. We find that by further fine-tuning these models to achieve a near-zero training loss on a small set of samples -- a process we refer to as hyperfitting -- the long-sequence generative capabilities are greatly enhanced. Greedy decoding with these Hyperfitted models even outperform Top-P sampling over long-sequences, both in terms of diversity and human preferences. This phenomenon extends to LLMs of various sizes, different domains, and even autoregressive image generation. We further find this phenomena to be distinctly different from that of Grokking and double descent. Surprisingly, our experiments indicate that hyperfitted models rarely fall into repeating sequences they were trained on, and even explicitly blocking these sequences results in high-quality output. All hyperfitted models produce extremely low-entropy predictions, often allocating nearly all probability to a single token.

LM을 소규모 샘플에 대해 학습 Loss가 0이 될 때까지 학습시키면 Greedy 샘플링을 했을 때 생성되는 데이터의 품질이 향상된다는 결과. Top-1 토큰에 대한 선택이 향상되는 동시에 엔트로피가 크게 낮아지네요. SFT에서 기대되는 효과와 비슷한 것이 아닐까 싶습니다.

The results show that when an LM is trained on a small number of samples until the training loss becomes zero, the quality of text generated using greedy sampling improves. The quality of top-1 token selection is enhanced while the entropy significantly decreases. I think this effect is similar to what we expect from SFT.

#autoregressive-model

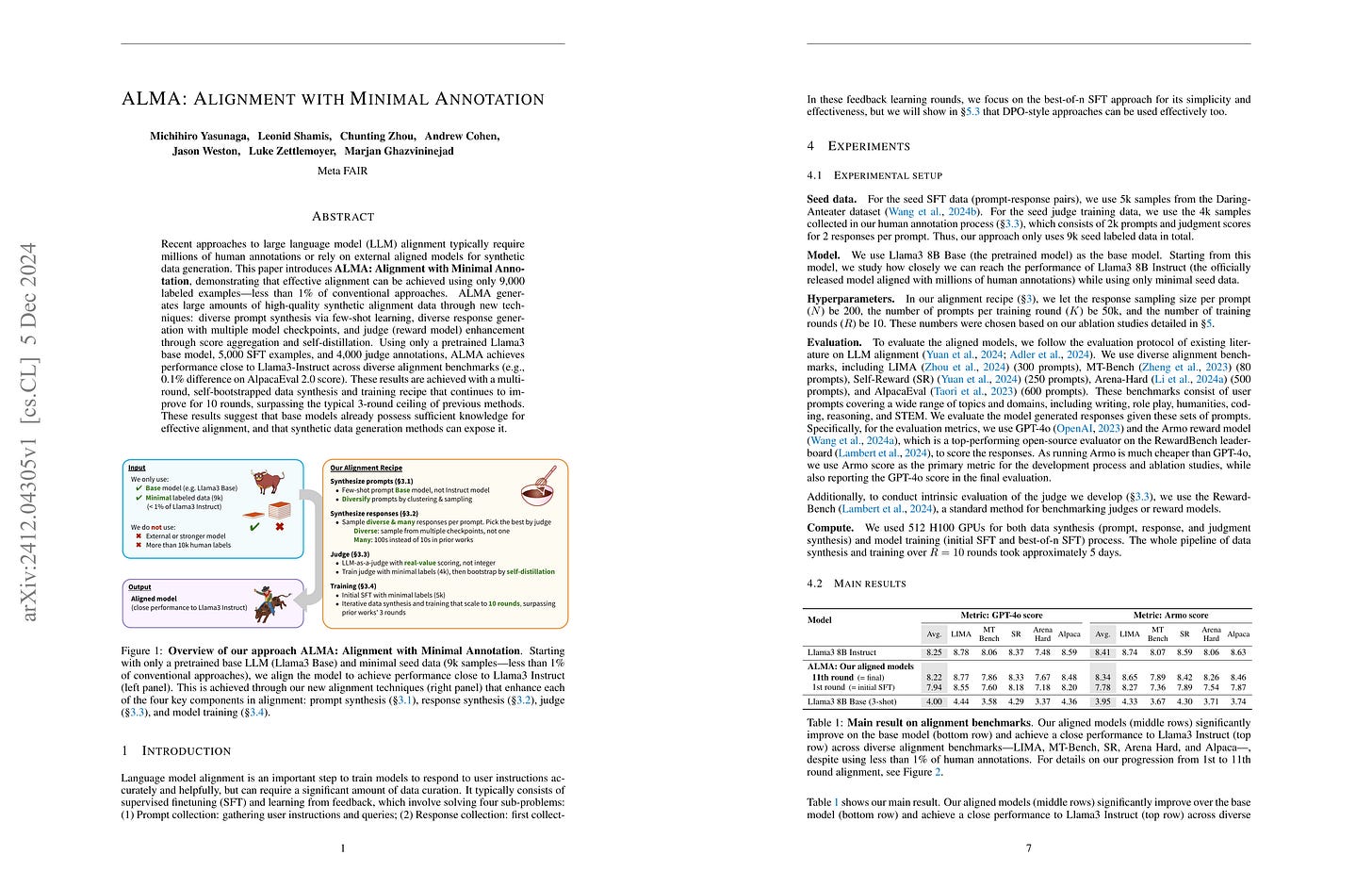

ALMA: Alignment with Minimal Annotation

(Michihiro Yasunaga, Leonid Shamis, Chunting Zhou, Andrew Cohen, Jason Weston, Luke Zettlemoyer, Marjan Ghazvininejad)

Recent approaches to large language model (LLM) alignment typically require millions of human annotations or rely on external aligned models for synthetic data generation. This paper introduces ALMA: Alignment with Minimal Annotation, demonstrating that effective alignment can be achieved using only 9,000 labeled examples -- less than 1% of conventional approaches. ALMA generates large amounts of high-quality synthetic alignment data through new techniques: diverse prompt synthesis via few-shot learning, diverse response generation with multiple model checkpoints, and judge (reward model) enhancement through score aggregation and self-distillation. Using only a pretrained Llama3 base model, 5,000 SFT examples, and 4,000 judge annotations, ALMA achieves performance close to Llama3-Instruct across diverse alignment benchmarks (e.g., 0.1% difference on AlpacaEval 2.0 score). These results are achieved with a multi-round, self-bootstrapped data synthesis and training recipe that continues to improve for 10 rounds, surpassing the typical 3-round ceiling of previous methods. These results suggest that base models already possess sufficient knowledge for effective alignment, and that synthetic data generation methods can expose it.

소규모 시드 데이터로 모델 정렬. 프롬프트를 증폭하고 최대한 다양한 응답을 생성하는 것, 그리고 LLM Judge를 사용하면서 정수 대신 실수 스코어를 사용한 것 등이 주요 요소군요.

Model alignment using small-scale seed data. The key elements are amplifying prompts, generating diverse responses, and using LLM judges with real scores instead of integer scores.

#alignment #reward-model

Densing Law of LLMs

(Chaojun Xiao, Jie Cai, Weilin Zhao, Guoyang Zeng, Xu Han, Zhiyuan Liu, Maosong Sun)

Large Language Models (LLMs) have emerged as a milestone in artificial intelligence, and their performance can improve as the model size increases. However, this scaling brings great challenges to training and inference efficiency, particularly for deploying LLMs in resource-constrained environments, and the scaling trend is becoming increasingly unsustainable. This paper introduces the concept of ``\textit{capacity density}'' as a new metric to evaluate the quality of the LLMs across different scales and describes the trend of LLMs in terms of both effectiveness and efficiency. To calculate the capacity density of a given target LLM, we first introduce a set of reference models and develop a scaling law to predict the downstream performance of these reference models based on their parameter sizes. We then define the \textit{effective parameter size} of the target LLM as the parameter size required by a reference model to achieve equivalent performance, and formalize the capacity density as the ratio of the effective parameter size to the actual parameter size of the target LLM. Capacity density provides a unified framework for assessing both model effectiveness and efficiency. Our further analysis of recent open-source base LLMs reveals an empirical law (the densing law)that the capacity density of LLMs grows exponentially over time. More specifically, using some widely used benchmarks for evaluation, the capacity density of LLMs doubles approximately every three months. The law provides new perspectives to guide future LLM development, emphasizing the importance of improving capacity density to achieve optimal results with minimal computational overhead.

기본적으로는 LLM의 발전 트렌드에 대한 연구입니다만 그 발전을 측정하기 위한 방법이 재미있네요. Capacity Density라는 지표를 사용하는데 모델의 파라미터 대비 실질적인 파라미터의 수를 측정합니다. 여기서 실질적인 파라미터의 수는 특정한 수준의 성능을 달성하기 위해 필요한 레퍼런스 모델의 크기입니다. 그리고 이 레퍼런스 모델의 규모와 성능을 연결하기 위해 Llama 3 스타일의 Task Scaling Law를 추정했습니다.

Overall this is a study on the development trends of LLMs, but the method they use to measure this progress is quite interesting. They employ a metric called capacity density, which measures the ratio of effective parameters to actual model parameters. Here, the number of effective parameters refers to the size of a reference model needed to achieve a specific level of performance. To connect the scale and performance of these reference models, they estimated a task scaling law similar to that used in Llama 3.

#llm #scaling-law

Establishing Task Scaling Laws via Compute-Efficient Model Ladders

(Akshita Bhagia, Jiacheng Liu, Alexander Wettig, David Heineman, Oyvind Tafjord, Ananya Harsh Jha, Luca Soldaini, Noah A. Smith, Dirk Groeneveld, Pang Wei Koh, Jesse Dodge, Hannaneh Hajishirzi)

We develop task scaling laws and model ladders to predict the individual task performance of pretrained language models (LMs) in the overtrained setting. Standard power laws for language modeling loss cannot accurately model task performance. Therefore, we leverage a two-step prediction approach: first use model and data size to predict a task-specific loss, and then use this task loss to predict task performance. We train a set of small-scale "ladder" models, collect data points to fit the parameterized functions of the two prediction steps, and make predictions for two target models: a 7B model trained to 4T tokens and a 13B model trained to 5T tokens. Training the ladder models only costs 1% of the compute used for the target models. On four multiple-choice tasks written in ranked classification format, we can predict the accuracy of both target models within 2 points of absolute error. We have higher prediction error on four other tasks (average absolute error 6.9) and find that these are often tasks with higher variance in task metrics. We also find that using less compute to train fewer ladder models tends to deteriorate predictions. Finally, we empirically show that our design choices and the two-step approach lead to superior performance in establishing scaling laws.

위의 연구와 (https://arxiv.org/abs/2412.04315) 비슷하게 Llama 3 스타일의 Task Loss 예측과 Loss를 통한 Accuracy 예측을 결합한 Task Scaling Law를 시도했군요. 예측이 성공적이네요. 이 접근이 Task Scaling Law에 대해서는 상당히 괜찮은 것 같습니다.

Similar to the study mentioned above (https://arxiv.org/abs/2412.04315), this research attempted to establish a task scaling law in the style of Llama 3, combining task loss prediction with accuracy prediction based on the loss. The predictions were successful, suggesting that this approach is quite effective for estimating task scaling laws.

#scaling-law

Infinity: Scaling Bitwise AutoRegressive Modeling for High-Resolution Image Synthesis

(Jian Han, Jinlai Liu, Yi Jiang, Bin Yan, Yuqi Zhang, Zehuan Yuan, Bingyue Peng, Xiaobing Liu)

We present Infinity, a Bitwise Visual AutoRegressive Modeling capable of generating high-resolution, photorealistic images following language instruction. Infinity redefines visual autoregressive model under a bitwise token prediction framework with an infinite-vocabulary tokenizer & classifier and bitwise self-correction mechanism, remarkably improving the generation capacity and details. By theoretically scaling the tokenizer vocabulary size to infinity and concurrently scaling the transformer size, our method significantly unleashes powerful scaling capabilities compared to vanilla VAR. Infinity sets a new record for autoregressive text-to-image models, outperforming top-tier diffusion models like SD3-Medium and SDXL. Notably, Infinity surpasses SD3-Medium by improving the GenEval benchmark score from 0.62 to 0.73 and the ImageReward benchmark score from 0.87 to 0.96, achieving a win rate of 66%. Without extra optimization, Infinity generates a high-quality 1024x1024 image in 0.8 seconds, making it 2.6x faster than SD3-Medium and establishing it as the fastest text-to-image model. Models and codes will be released to promote further exploration of Infinity for visual generation and unified tokenizer modeling.

VAR (https://arxiv.org/abs/2404.02905) 기반의 Autoregression, Residual BSQ (https://arxiv.org/abs/2406.07548) 기반으로 Quantization. 그러나 정수 인덱스를 예측하는 대신 각 비트를 예측하는 방법으로 바꿔 2^32에 달하는 Vocabulary를 지원할 수 있게 했네요. 추가적으로 Autoregression 과정의 오류 누적을 비트를 뒤집은 코드를 학습에 사용해 보정할 수 있도록 했습니다. 굉장히 흥미롭네요. 비트에 대한 Autoregressive 생성만 잘 작동한다면 VQ에서는 문제가 거의 해소될 수 있지 않을까 싶습니다.

Autoregression based on VAR (https://arxiv.org/abs/2404.02905), and quantization based on residual BSQ (https://arxiv.org/abs/2406.07548). However, instead of predicting integer indices, the authors have adopted a method of predicting individual bits, allowing for a vocabulary size of up to 2^32. Additionally, they've addressed error accumulation in the autoregressive process by using bit-flipped codes during training for correction. This approach is extremely interesting. If autoregressive generation of bits works well, it seems that most issues originating from VQ could potentially be resolved.

#vq #autoregressive-model