2024년 12월 25일

Mulberry: Empowering MLLM with o1-like Reasoning and Reflection via Collective Monte Carlo Tree Search

(Huanjin Yao, Jiaxing Huang, Wenhao Wu, Jingyi Zhang, Yibo Wang, Shunyu Liu, Yingjie Wang, Yuxin Song, Haocheng Feng, Li Shen, Dacheng Tao)

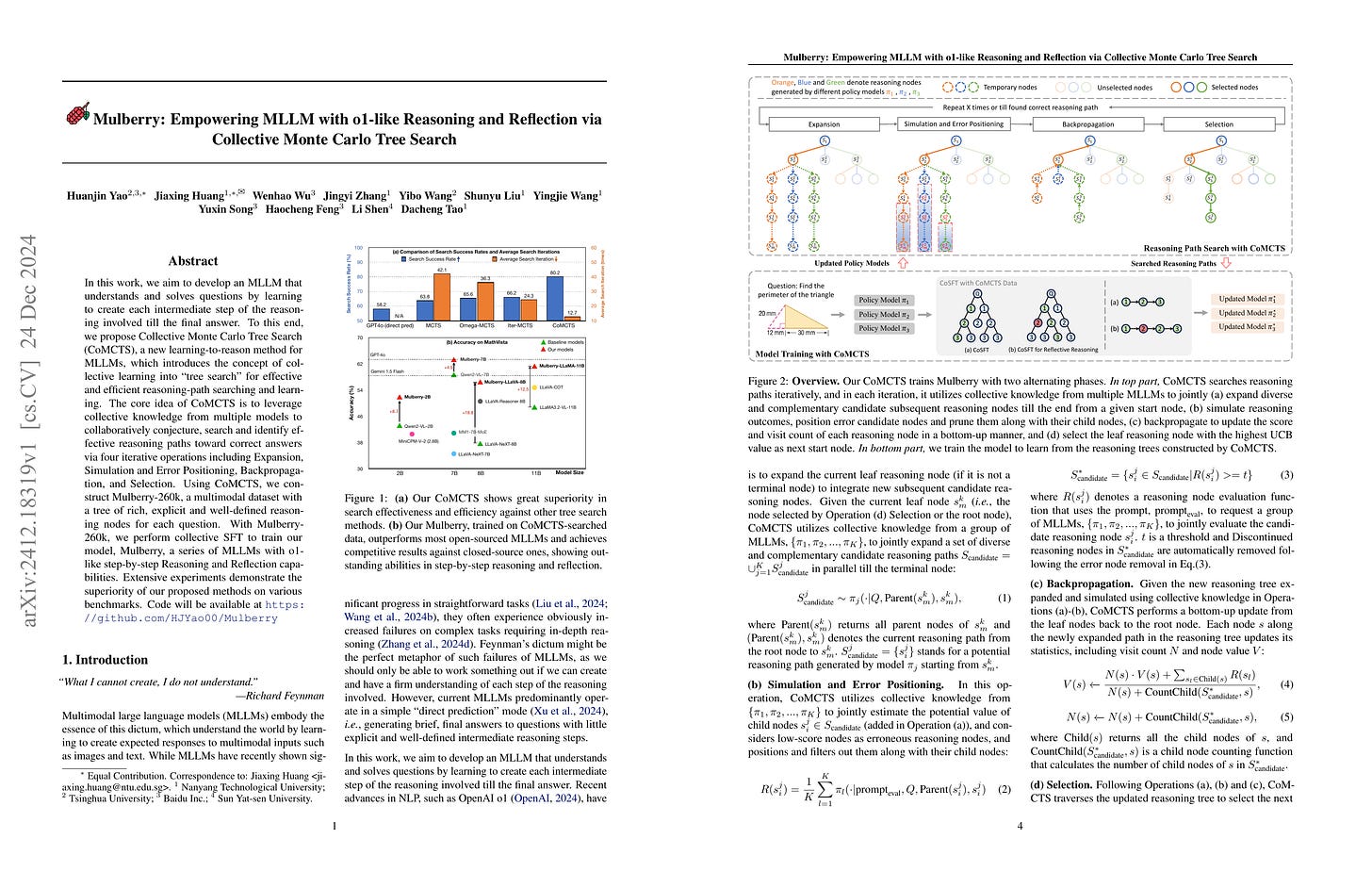

In this work, we aim to develop an MLLM that understands and solves questions by learning to create each intermediate step of the reasoning involved till the final answer. To this end, we propose Collective Monte Carlo Tree Search (CoMCTS), a new learning-to-reason method for MLLMs, which introduces the concept of collective learning into ``tree search'' for effective and efficient reasoning-path searching and learning. The core idea of CoMCTS is to leverage collective knowledge from multiple models to collaboratively conjecture, search and identify effective reasoning paths toward correct answers via four iterative operations including Expansion, Simulation and Error Positioning, Backpropagation, and Selection. Using CoMCTS, we construct Mulberry-260k, a multimodal dataset with a tree of rich, explicit and well-defined reasoning nodes for each question. With Mulberry-260k, we perform collective SFT to train our model, Mulberry, a series of MLLMs with o1-like step-by-step Reasoning and Reflection capabilities. Extensive experiments demonstrate the superiority of our proposed methods on various benchmarks. Code will be available at https://github.com/HJYao00/Mulberry

Vision-Language 모델에 대한 추론 능력 주입. 여러 개의 MLLM을 사용해 추론 경로를 생성하는 MCTS 기반이군요. 경로를 평가하는 것 또한 여러 MLLM을 프롬프팅해서 점수를 내는 방식으로 진행했네요.

o1에서 MCTS를 사용했는가도 논쟁이 되고 있죠. 그렇지만 사실 더 중요한 것은 어떻게 Reward를 부여했는가일 것이라고 생각합니다.

Injecting reasoning abilities into vision-language models. This method is based on MCTS using multiple MLLMs to generate reasoning paths. The evaluation of these paths is also conducted by prompting multiple MLLMs to provide scores.

There's ongoing debate about whether o1 used MCTS. However, I believe the more crucial aspect is how the reward was assigned.

#vision-language #multimodal #mcts #search #reasoning

Improving Multi-Step Reasoning Abilities of Large Language Models with Direct Advantage Policy Optimization

(Jiacai Liu, Chaojie Wang, Chris Yuhao Liu, Liang Zeng, Rui Yan, Yiwen Sun, Yang Liu, Yahui Zhou)

The role of reinforcement learning (RL) in enhancing the reasoning of large language models (LLMs) is becoming increasingly significant. Despite the success of RL in many scenarios, there are still many challenges in improving the reasoning of LLMs. One challenge is the sparse reward, which makes optimization difficult for RL and necessitates a large amount of data samples. Another challenge stems from the inherent instability of RL, particularly when using Actor-Critic (AC) methods to derive optimal policies, which often leads to unstable training processes. To address these issues, we introduce Direct Advantage Policy Optimization (DAPO), an novel step-level offline RL algorithm. Unlike standard alignment that rely solely outcome rewards to optimize policies (such as DPO), DAPO employs a critic function to predict the reasoning accuracy at each step, thereby generating dense signals to refine the generation strategy. Additionally, the Actor and Critic components in DAPO are trained independently, avoiding the co-training instability observed in standard AC algorithms like PPO. We train DAPO on mathematical and code query datasets and then evaluate its performance on multiple benchmarks. Our results show that DAPO can effectively enhance the mathematical and code capabilities on both SFT models and RL models, demonstrating the effectiveness of DAPO.

따로 학습한 Critic을 사용하는 오프라인 RL. Critic 학습에서 Generator와 Completer를 구분해 Generator로 추론 단계를 생성한 다음 Completer로 나머지 단계를 생성하고 정답과 비교하는 형태로 Value를 추정하는 방법을 사용했네요.

This is an offline RL method that uses a separately trained critic. For training the critic, they distinguish between a generator and a completer. The value is estimated by generating reasoning steps using the generator, then completing the rest of the trajectory with the completer, and finally comparing the result with the answer.

#reasoning #rl