2024년 12월 2일

Reverse Thinking Makes LLMs Stronger Reasoners

(Justin Chih-Yao Chen, Zifeng Wang, Hamid Palangi, Rujun Han, Sayna Ebrahimi, Long Le, Vincent Perot, Swaroop Mishra, Mohit Bansal, Chen-Yu Lee, Tomas Pfister)

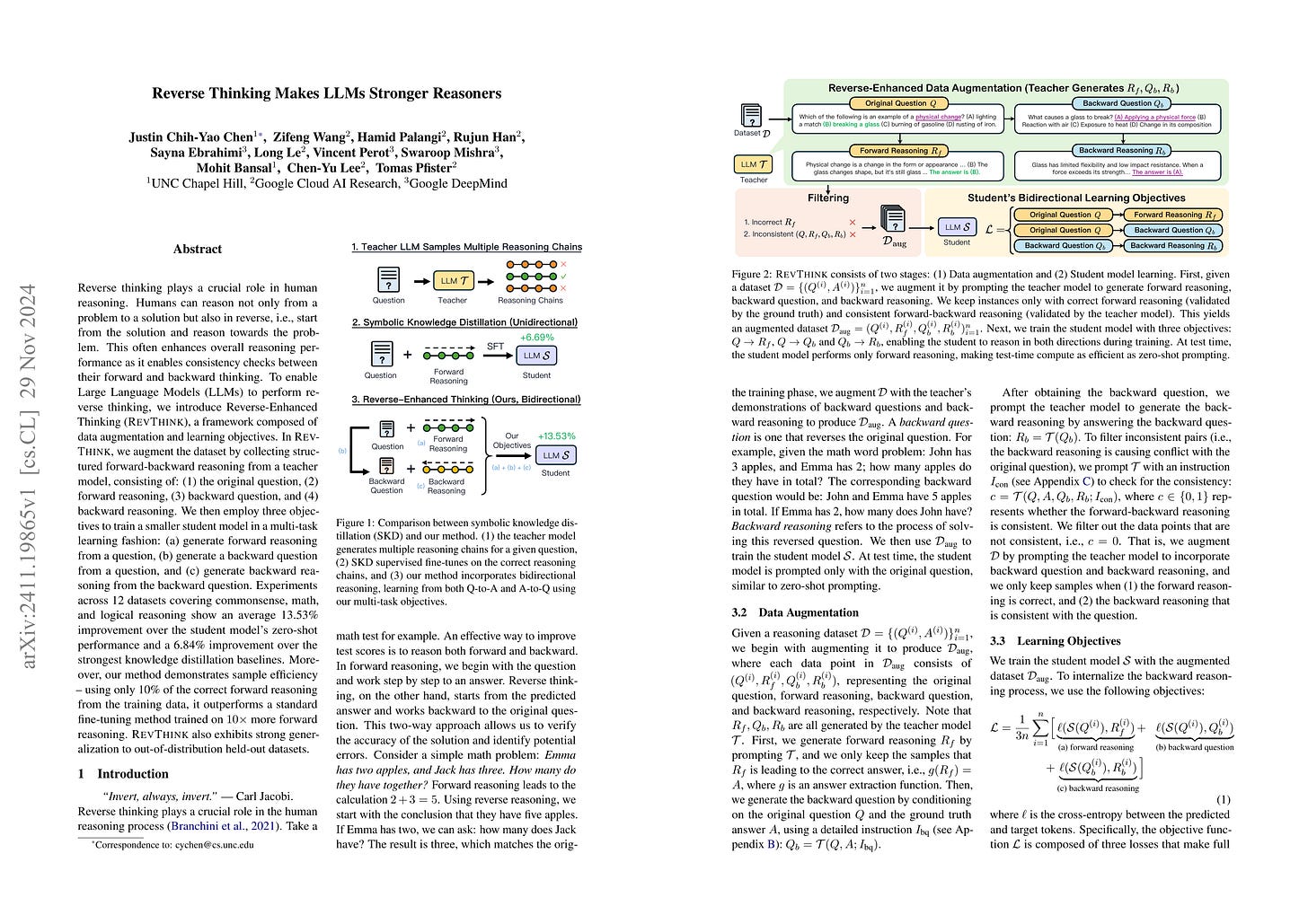

Reverse thinking plays a crucial role in human reasoning. Humans can reason not only from a problem to a solution but also in reverse, i.e., start from the solution and reason towards the problem. This often enhances overall reasoning performance as it enables consistency checks between their forward and backward thinking. To enable Large Language Models (LLMs) to perform reverse thinking, we introduce Reverse-Enhanced Thinking (RevThink), a framework composed of data augmentation and learning objectives. In RevThink, we augment the dataset by collecting structured forward-backward reasoning from a teacher model, consisting of: (1) the original question, (2) forward reasoning, (3) backward question, and (4) backward reasoning. We then employ three objectives to train a smaller student model in a multi-task learning fashion: (a) generate forward reasoning from a question, (b) generate a backward question from a question, and (c) generate backward reasoning from the backward question. Experiments across 12 datasets covering commonsense, math, and logical reasoning show an average 13.53% improvement over the student model's zero-shot performance and a 6.84% improvement over the strongest knowledge distillation baselines. Moreover, our method demonstrates sample efficiency -- using only 10% of the correct forward reasoning from the training data, it outperforms a standard fine-tuning method trained on 10x more forward reasoning. RevThink also exhibits strong generalization to out-of-distribution held-out datasets.

문제-정답을 뒤집어 정답에서 문제로 추론하는 문제를 생성해 학습. 일종의 Augmentation이라고 생각할 수 있겠네요.

Generates reversed problems by flipping question-answer pairs, requiring reasoning from the answer to the question. This can be considered a form of augmentation.

#synthetic-data #reasoning #distillation

Critical Tokens Matter: Token-Level Contrastive Estimation Enhence LLM's Reasoning Capability

(Zicheng Lin, Tian Liang, Jiahao Xu, Xing Wang, Ruilin Luo, Chufan Shi, Siheng Li, Yujiu Yang, Zhaopeng Tu)

Large Language Models (LLMs) have exhibited remarkable performance on reasoning tasks. They utilize autoregressive token generation to construct reasoning trajectories, enabling the development of a coherent chain of thought. In this work, we explore the impact of individual tokens on the final outcomes of reasoning tasks. We identify the existence of ``critical tokens'' that lead to incorrect reasoning trajectories in LLMs. Specifically, we find that LLMs tend to produce positive outcomes when forced to decode other tokens instead of critical tokens. Motivated by this observation, we propose a novel approach - cDPO - designed to automatically recognize and conduct token-level rewards for the critical tokens during the alignment process. Specifically, we develop a contrastive estimation approach to automatically identify critical tokens. It is achieved by comparing the generation likelihood of positive and negative models. To achieve this, we separately fine-tune the positive and negative models on various reasoning trajectories, consequently, they are capable of identifying identify critical tokens within incorrect trajectories that contribute to erroneous outcomes. Moreover, to further align the model with the critical token information during the alignment process, we extend the conventional DPO algorithms to token-level DPO and utilize the differential likelihood from the aforementioned positive and negative model as important weight for token-level DPO learning.Experimental results on GSM8K and MATH500 benchmarks with two-widely used models Llama-3 (8B and 70B) and deepseek-math (7B) demonstrate the effectiveness of the propsoed approach cDPO.

LLM의 추론 과정에서 오답을 유도하는 결정적인 토큰이 있다는 발견. 첫 토큰의 중요성도 생각나는 결과네요. (https://arxiv.org/abs/2402.10200) 이 결정적인 토큰을 찾아 억제하는 것으로 추론 성능을 높일 수 있다는 아이디어입니다.

The paper reveals the existence of critical tokens that lead to incorrect answers during the reasoning process of LLMs. This reminds me of the importance of the first token as well. (https://arxiv.org/abs/2402.10200) The idea is that we can enhance reasoning performance by identifying these critical tokens and suppressing them.

#reasoning

DeMo: Decoupled Momentum Optimization

(Bowen Peng, Jeffrey Quesnelle, Diederik P. Kingma)

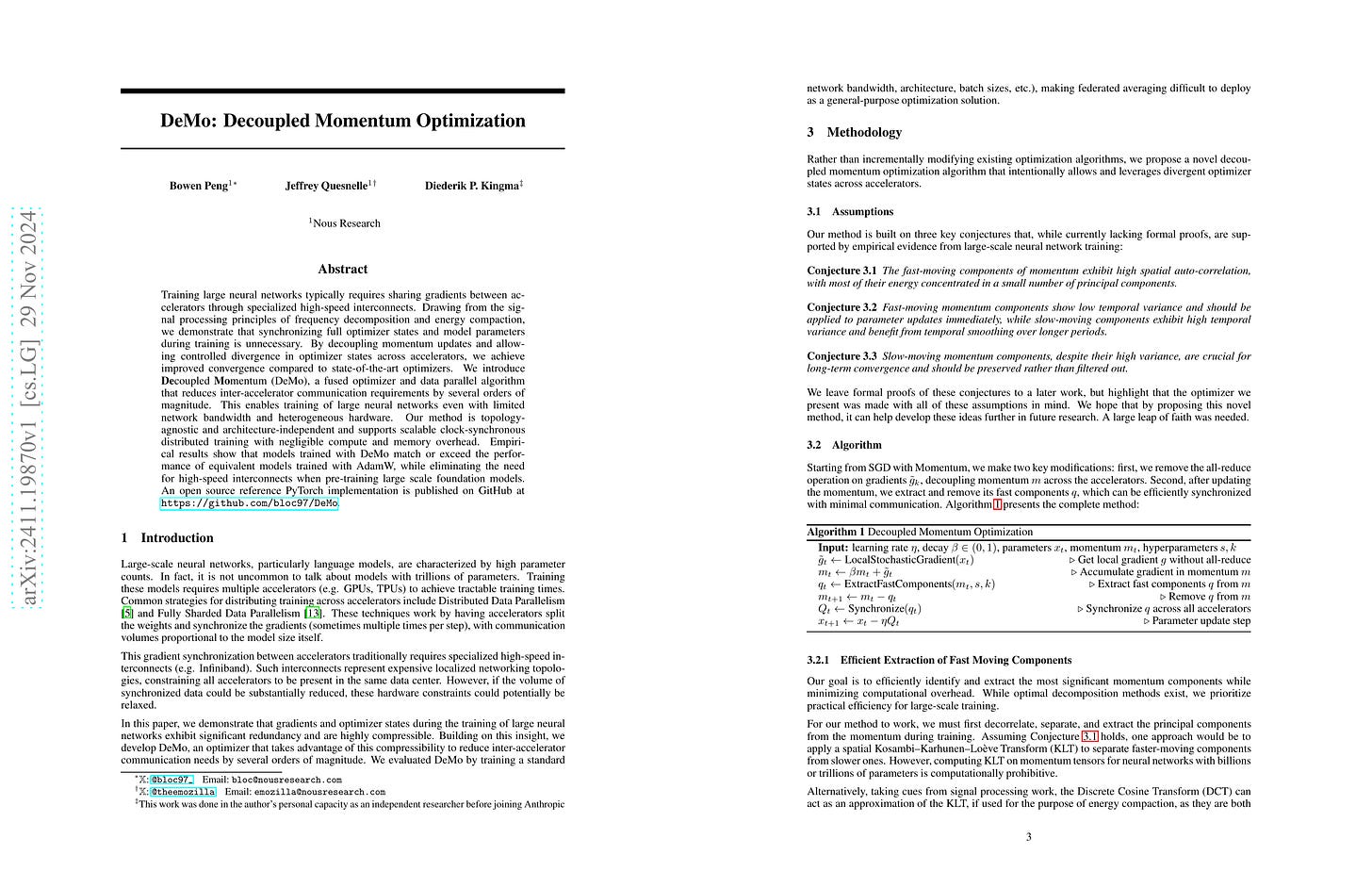

Training large neural networks typically requires sharing gradients between accelerators through specialized high-speed interconnects. Drawing from the signal processing principles of frequency decomposition and energy compaction, we demonstrate that synchronizing full optimizer states and model parameters during training is unnecessary. By decoupling momentum updates and allowing controlled divergence in optimizer states across accelerators, we achieve improved convergence compared to state-of-the-art optimizers. We introduce {\textbf{De}}coupled {\textbf{Mo}}mentum (DeMo), a fused optimizer and data parallel algorithm that reduces inter-accelerator communication requirements by several orders of magnitude. This enables training of large neural networks even with limited network bandwidth and heterogeneous hardware. Our method is topology-agnostic and architecture-independent and supports scalable clock-synchronous distributed training with negligible compute and memory overhead. Empirical results show that models trained with DeMo match or exceed the performance of equivalent models trained with AdamW, while eliminating the need for high-speed interconnects when pre-training large scale foundation models. An open source reference PyTorch implementation is published on GitHub at https://github.com/bloc97/DeMo

모델 학습의 통신량을 줄이기 위한 방법. 모멘텀에서 빠르게 변화하는 부분과 느리게 변화하는 부분을 분리한 다음 빠르게 변화하는 부분만 동기화하는 방법이군요.

최근에 탈중앙화된 학습 방법으로 학습하고 있었던 모델 하나가 학습을 완료했습니다. (https://x.com/PrimeIntellect/status/1859923050092994738) 데이터 센터 하나에서 학습을 할 수 없는 규모가 된다고 하면 이런 방법들에 대한 주목도가 높아질 수밖에 없겠죠.

A method to reduce communication volume in model training. It separates the fast-changing and slow-changing components of momentum, then synchronizes only the fast-changing parts.

Recently, a model trained using decentralized training methods has completed its training. (https://x.com/PrimeIntellect/status/1859923050092994738) If the scale of training grows beyond what's possible in a single data center, these kinds of methods are likely to gain more attention.

#efficient-training

JetFormer: An Autoregressive Generative Model of Raw Images and Text

(Michael Tschannen, André Susano Pinto, Alexander Kolesnikov)

Removing modeling constraints and unifying architectures across domains has been a key driver of the recent progress in training large multimodal models. However, most of these models still rely on many separately trained components such as modality-specific encoders and decoders. In this work, we further streamline joint generative modeling of images and text. We propose an autoregressive decoder-only transformer - JetFormer - which is trained to directly maximize the likelihood of raw data, without relying on any separately pretrained components, and can understand and generate both text and images. Specifically, we leverage a normalizing flow model to obtain a soft-token image representation that is jointly trained with an autoregressive multimodal transformer. The normalizing flow model serves as both an image encoder for perception tasks and an image decoder for image generation tasks during inference. JetFormer achieves text-to-image generation quality competitive with recent VQ-VAE- and VAE-based baselines. These baselines rely on pretrained image autoencoders, which are trained with a complex mixture of losses, including perceptual ones. At the same time, JetFormer demonstrates robust image understanding capabilities. To the best of our knowledge, JetFormer is the first model that is capable of generating high-fidelity images and producing strong log-likelihood bounds.

Normalizing Flow! 정말 오랜만이군요. Flow 모델을 이미지 인코더로 (동시에 디코더) 사용한 Autoregressive 이미지 생성/인식 모델입니다. Flow 모델로 변환된 임베딩을 GIVT 스타일의 Gaussian Mixture로 모델링했네요. (https://arxiv.org/abs/2312.02116)

Normalizing Flow! It's been a long time since we've seen this. This is an autoregressive image generation and recognition model that uses a flow model as both an encoder and decoder for images. The embeddings transformed by the flow model are modeled using a Gaussian mixture, similar to the GIVT style. (https://arxiv.org/abs/2312.02116)

#flow #autoregressive-model #image-text

A Simple and Provable Scaling Law for the Test-Time Compute of Large Language Models

(Yanxi Chen, Xuchen Pan, Yaliang Li, Bolin Ding, Jingren Zhou)

We propose a general two-stage algorithm that enjoys a provable scaling law for the test-time compute of large language models (LLMs). Given an input problem, the proposed algorithm first generates N candidate solutions, and then chooses the best one via a multiple-round knockout tournament where each pair of candidates are compared for K times and only the winners move on to the next round. In a minimalistic implementation, both stages can be executed with a black-box LLM alone and nothing else (e.g., no external verifier or reward model), and a total of N×(K+1) highly parallelizable LLM calls are needed for solving an input problem. Assuming that a generated candidate solution is correct with probability p_gen > 0 and a comparison between a pair of correct and incorrect solutions identifies the right winner with probability p_comp > 0.5 (i.e., better than a random guess), we prove theoretically that the failure probability of the proposed algorithm decays to zero exponentially with respect to N and K:

P(final output is incorrect) ≤ (1 − p_gen)^N + ⌈log2N⌉e^(−2K(p_comp − 0.5)^2).

Our empirical results with the challenging MMLU-Pro benchmark validate the technical assumptions, as well as the efficacy of the proposed algorithm and the gains from scaling up its test-time compute.

N개 샘플을 생성한 다음 각 샘플의 페어를 K번 비교하는 토너먼트를 사용하는 Test Time Scaling 방법. 정답을 생성할 확률이 0보다 크고 비교에서 정답을 선택할 확률이 0.5보다 크다면 N과 K의 증가에 따라 오답률이 0으로 수렴한다는 이야기를 합니다.

This paper presents a Test Time Scaling method that uses a tournament approach with N generated samples and K comparisons between each pair of samples. The authors argue that if the probability of generating the correct answer is greater than 0 and the probability of choosing the correct answer in comparisons is greater than 0.5, then the error rate will converge to 0 as N and K increase.

#search

Puzzle: Distillation-Based NAS for Inference-Optimized LLMs

(Akhiad Bercovich, Tomer Ronen, Talor Abramovich, Nir Ailon, Nave Assaf, Mohammad Dabbah, Ido Galil, Amnon Geifman, Yonatan Geifman, Izhak Golan, Netanel Haber, Ehud Karpas, Itay Levy, Shahar Mor, Zach Moshe, Najeeb Nabwani, Omri Puny, Ran Rubin, Itamar Schen, Ido Shahaf, Oren Tropp, Omer Ullman Argov, Ran Zilberstein, Ran El-Yaniv)

Large language models (LLMs) have demonstrated remarkable capabilities, but their adoption is limited by high computational costs during inference. While increasing parameter counts enhances accuracy, it also widens the gap between state-of-the-art capabilities and practical deployability. We present Puzzle, a framework to accelerate LLM inference on specific hardware while preserving their capabilities. Through an innovative application of neural architecture search (NAS) at an unprecedented scale, Puzzle systematically optimizes models with tens of billions of parameters under hardware constraints. Our approach utilizes blockwise local knowledge distillation (BLD) for parallel architecture exploration and employs mixed-integer programming for precise constraint optimization. We demonstrate the real-world impact of our framework through Llama-3.1-Nemotron-51B-Instruct (Nemotron-51B), a publicly available model derived from Llama-3.1-70B-Instruct. Nemotron-51B achieves a 2.17x inference throughput speedup, fitting on a single NVIDIA H100 GPU while preserving 98.4% of the original model's capabilities. Nemotron-51B currently stands as the most accurate language model capable of inference on a single GPU with large batch sizes. Remarkably, this transformation required just 45B training tokens, compared to over 15T tokens used for the 70B model it was derived from. This establishes a new paradigm where powerful models can be optimized for efficient deployment with only negligible compromise of their capabilities, demonstrating that inference performance, not parameter count alone, should guide model selection. With the release of Nemotron-51B and the presentation of the Puzzle framework, we provide practitioners immediate access to state-of-the-art language modeling capabilities at significantly reduced computational costs.

NAS가 다시 등장하네요. GQA의 헤드 수나 FFN Expansion 등에 변화를 준 블록을 여러 개 설정한 다음 이 블록이 원 모델의 레이어를 모사하도록 학습합니다. 그리고 이 블록들의 연산 비용과 성능에 미치는 영향을 통해 최적 세팅을 찾아내는 방식이군요.

NAS is making a comeback. The approach involves setting up multiple blocks with variations in parameters such as the number of GQA heads and FFN expansion ratios. These blocks are then trained to imitate layers from the original model. The optimal configuration is searched using the computational cost and performance impact of these blocks.

#distillation #nas