2024년 12월 19일

Unveiling the Secret Recipe: A Guide For Supervised Fine-Tuning Small LLMs

(Aldo Pareja, Nikhil Shivakumar Nayak, Hao Wang, Krishnateja Killamsetty, Shivchander Sudalairaj, Wenlong Zhao, Seungwook Han, Abhishek Bhandwaldar, Guangxuan Xu, Kai Xu, Ligong Han, Luke Inglis, Akash Srivastava)

The rise of large language models (LLMs) has created a significant disparity: industrial research labs with their computational resources, expert teams, and advanced infrastructures, can effectively fine-tune LLMs, while individual developers and small organizations face barriers due to limited resources. In this paper, we aim to bridge this gap by presenting a comprehensive study on supervised fine-tuning of LLMs using instruction-tuning datasets spanning diverse knowledge domains and skills. We focus on small-sized LLMs (3B to 7B parameters) for their cost-efficiency and accessibility. We explore various training configurations and strategies across four open-source pre-trained models. We provide detailed documentation of these configurations, revealing findings that challenge several common training practices, including hyperparameter recommendations from TULU and phased training recommended by Orca. Key insights from our work include: (i) larger batch sizes paired with lower learning rates lead to improved model performance on benchmarks such as MMLU, MTBench, and Open LLM Leaderboard; (ii) early-stage training dynamics, such as lower gradient norms and higher loss values, are strong indicators of better final model performance, enabling early termination of sub-optimal runs and significant computational savings; (iii) through a thorough exploration of hyperparameters like warmup steps and learning rate schedules, we provide guidance for practitioners and find that certain simplifications do not compromise performance; and (iv) we observed no significant difference in performance between phased and stacked training strategies, but stacked training is simpler and more sample efficient. With these findings holding robustly across datasets and models, we hope this study serves as a guide for practitioners fine-tuning small LLMs and promotes a more inclusive environment for LLM research.

SFT 과정에 대한 경험. 도메인을 다 섞어서 큰 배치 작은 LR로 학습하는 것이 좋더라는 결과군요.

Experience on SFT. They say that training with large batch and small LR, on mixed data domains yields better results.

#alignment

Autoregressive Video Generation without Vector Quantization

(Haoge Deng, Ting Pan, Haiwen Diao, Zhengxiong Luo, Yufeng Cui, Huchuan Lu, Shiguang Shan, Yonggang Qi, Xinlong Wang)

This paper presents a novel approach that enables autoregressive video generation with high efficiency. We propose to reformulate the video generation problem as a non-quantized autoregressive modeling of temporal frame-by-frame prediction and spatial set-by-set prediction. Unlike raster-scan prediction in prior autoregressive models or joint distribution modeling of fixed-length tokens in diffusion models, our approach maintains the causal property of GPT-style models for flexible in-context capabilities, while leveraging bidirectional modeling within individual frames for efficiency. With the proposed approach, we train a novel video autoregressive model without vector quantization, termed NOVA. Our results demonstrate that NOVA surpasses prior autoregressive video models in data efficiency, inference speed, visual fidelity, and video fluency, even with a much smaller model capacity, i.e., 0.6B parameters. NOVA also outperforms state-of-the-art image diffusion models in text-to-image generation tasks, with a significantly lower training cost. Additionally, NOVA generalizes well across extended video durations and enables diverse zero-shot applications in one unified model. Code and models are publicly available at https://github.com/baaivision/NOVA.

시간축으로는 프레임 단위 Autoregression, 각 프레임 내에서는 MAR (https://arxiv.org/abs/2406.11838). 프레임 내의 예측이 일종의 앵커를 사용하지 않고는 어려웠다고 하네요.

Temporal autoregression is performed at the frame level, while within each frame, MAR (https://arxiv.org/abs/2406.11838) is used for prediction. The authors note that predicting within frames was challenging without using some form of anchor features.

#video-generation #diffusion #autoregressive-model

E-CAR: Efficient Continuous Autoregressive Image Generation via Multistage Modeling

(Zhihang Yuan, Yuzhang Shang, Hanling Zhang, Tongcheng Fang, Rui Xie, Bingxin Xu, Yan Yan, Shengen Yan, Guohao Dai, Yu Wang)

Recent advances in autoregressive (AR) models with continuous tokens for image generation show promising results by eliminating the need for discrete tokenization. However, these models face efficiency challenges due to their sequential token generation nature and reliance on computationally intensive diffusion-based sampling. We present ECAR (Efficient Continuous Auto-Regressive Image Generation via Multistage Modeling), an approach that addresses these limitations through two intertwined innovations: (1) a stage-wise continuous token generation strategy that reduces computational complexity and provides progressively refined token maps as hierarchical conditions, and (2) a multistage flow-based distribution modeling method that transforms only partial-denoised distributions at each stage comparing to complete denoising in normal diffusion models. Holistically, ECAR operates by generating tokens at increasing resolutions while simultaneously denoising the image at each stage. This design not only reduces token-to-image transformation cost by a factor of the stage number but also enables parallel processing at the token level. Our approach not only enhances computational efficiency but also aligns naturally with image generation principles by operating in continuous token space and following a hierarchical generation process from coarse to fine details. Experimental results demonstrate that ECAR achieves comparable image quality to DiT Peebles & Xie [2023] while requiring 10× FLOPs reduction and 5× speedup to generate a 256×256 image.

스케일 단위 Autoregressive 이미지 생성에 Diffusion을 사용한 Continuous Prediction을 결합한 형태. Diffusion도 모든 스케일에서 전체 스케줄을 진행하는 것이 아니라 각 스케일에 따라 스케줄을 나눠서 진행합니다. VAR과 Transfusion류의 Diffusion을 사용한 접근을 연결한 형태군요. (https://arxiv.org/abs/2404.02905, https://arxiv.org/abs/2408.11039, https://arxiv.org/abs/2412.08635) 이것도 꽤 괜찮을 것 같네요.

This approach combines scale-level autoregressive image generation with continuous prediction using diffusion. The diffusion process doesn't undergo full schedules for each scale; instead, the schedule is divided according to each scale. It connects VAR with diffusion-based methods such as Transfusion (https://arxiv.org/abs/2404.02905, https://arxiv.org/abs/2408.11039, https://arxiv.org/abs/2412.08635). [I think this could be a quite promising approach.]

#autoregressive-model #diffusion

Mix-LN: Unleashing the Power of Deeper Layers by Combining Pre-LN and Post-LN

(Pengxiang Li, Lu Yin, Shiwei Liu)

Large Language Models (LLMs) have achieved remarkable success, yet recent findings reveal that their deeper layers often contribute minimally and can be pruned without affecting overall performance. While some view this as an opportunity for model compression, we identify it as a training shortfall rooted in the widespread use of Pre-Layer Normalization (Pre-LN). We demonstrate that Pre-LN, commonly employed in models like GPT and LLaMA, leads to diminished gradient norms in its deeper layers, reducing their effectiveness. In contrast, Post-Layer Normalization (Post-LN) preserves larger gradient norms in deeper layers but suffers from vanishing gradients in earlier layers. To address this, we introduce Mix-LN, a novel normalization technique that combines the strengths of Pre-LN and Post-LN within the same model. Mix-LN applies Post-LN to the earlier layers and Pre-LN to the deeper layers, ensuring more uniform gradients across layers. This allows all parts of the network--both shallow and deep layers--to contribute effectively to training. Extensive experiments with various model sizes from 70M to 7B demonstrate that Mix-LN consistently outperforms both Pre-LN and Post-LN, promoting more balanced, healthier gradient norms throughout the network, and enhancing the overall quality of LLM pre-training. Furthermore, we demonstrate that models pre-trained with Mix-LN learn better compared to those using Pre-LN or Post-LN during supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF), highlighting the critical importance of high-quality deep layers. By effectively addressing the inefficiencies of deep layers in current LLMs, Mix-LN unlocks their potential, enhancing model capacity without increasing model size. Our code is available at https://github.com/pixeli99/MixLN.

Layer Norm 잔혹사. Post LN은 학습이 불안정하고 Pre LN은 성능에서 밀린다는 영원한 문제의 연장선상입니다. 앞쪽 레이어에서는 Post LN을 쓰고 뒤쪽 레이어에서는 Pre LN을 쓴다는 대안입니다. 지금까지 나왔던 Normalization 배치 방법 중에서는 가장 단순한 편이네요.

The cruel history of Layer Normalization continues. This paper addresses the ongoing dilemma where Post-LN leads to unstable training while Pre-LN underperforms. The proposed solution is to use Post-LN for earlier layers and Pre-LN for later layers. Among the normalization positioning methods proposed so far, this approach is one of the simplest.

#transformer

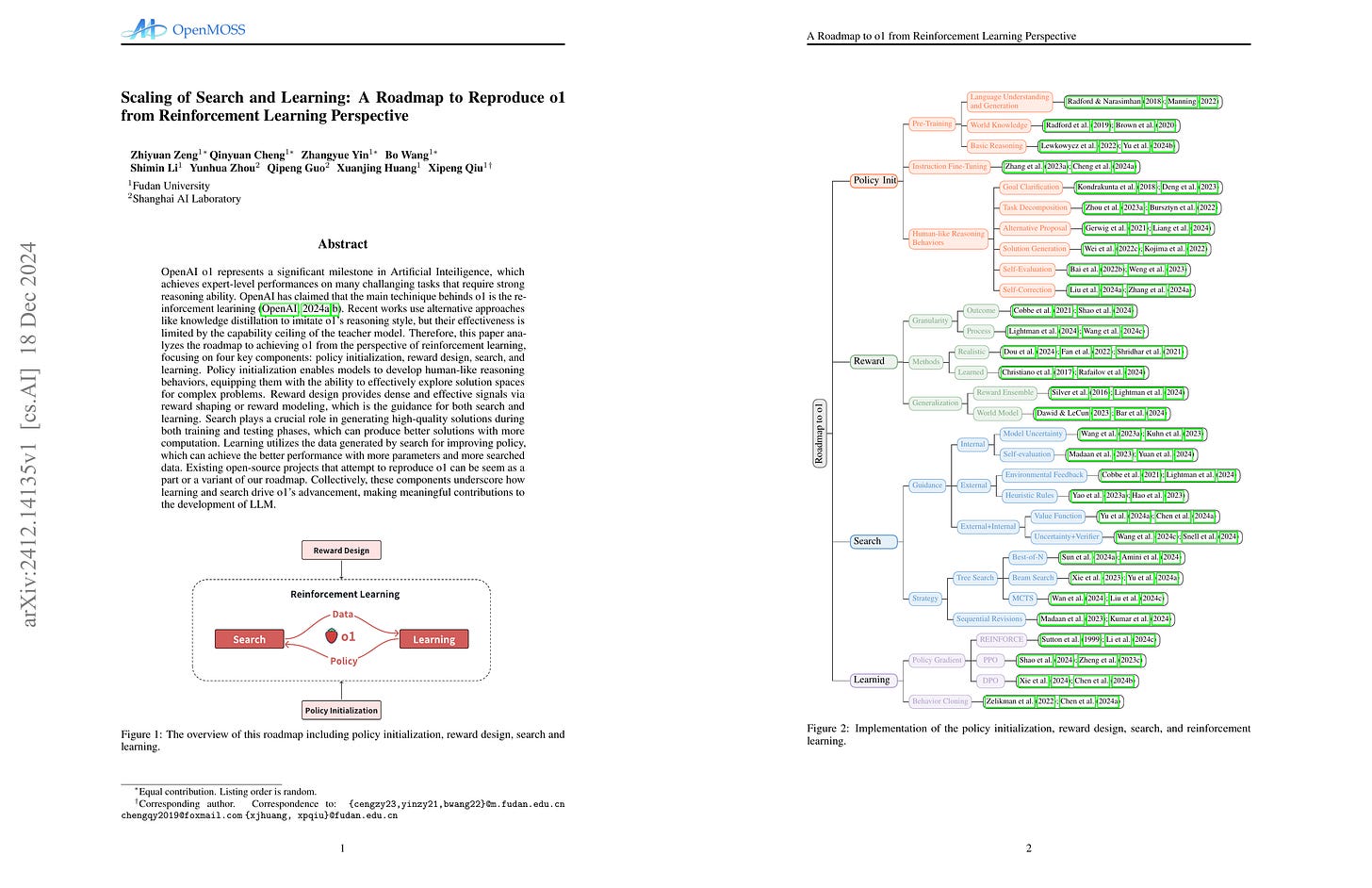

Scaling of Search and Learning: A Roadmap to Reproduce o1 from Reinforcement Learning Perspective

(Zhiyuan Zeng, Qinyuan Cheng, Zhangyue Yin, Bo Wang, Shimin Li, Yunhua Zhou, Qipeng Guo, Xuanjing Huang, Xipeng Qiu)

OpenAI o1 represents a significant milestone in Artificial Inteiligence, which achieves expert-level performances on many challanging tasks that require strong reasoning ability.OpenAI has claimed that the main techinique behinds o1 is the reinforcement learining. Recent works use alternative approaches like knowledge distillation to imitate o1's reasoning style, but their effectiveness is limited by the capability ceiling of the teacher model. Therefore, this paper analyzes the roadmap to achieving o1 from the perspective of reinforcement learning, focusing on four key components: policy initialization, reward design, search, and learning. Policy initialization enables models to develop human-like reasoning behaviors, equipping them with the ability to effectively explore solution spaces for complex problems. Reward design provides dense and effective signals via reward shaping or reward modeling, which is the guidance for both search and learning. Search plays a crucial role in generating high-quality solutions during both training and testing phases, which can produce better solutions with more computation. Learning utilizes the data generated by search for improving policy, which can achieve the better performance with more parameters and more searched data. Existing open-source projects that attempt to reproduce o1 can be seem as a part or a variant of our roadmap. Collectively, these components underscore how learning and search drive o1's advancement, making meaningful contributions to the development of LLM.

o1의 방법을 추정하는 시도도 이제 하나의 장르군요. 최근 재미있게 읽은 글도 하나 소개합니다.

Attempts to guess o1's methodology have now become a genre of their own. I'd like to introduce an interesting article I recently read on this topic:

#reasoning #review

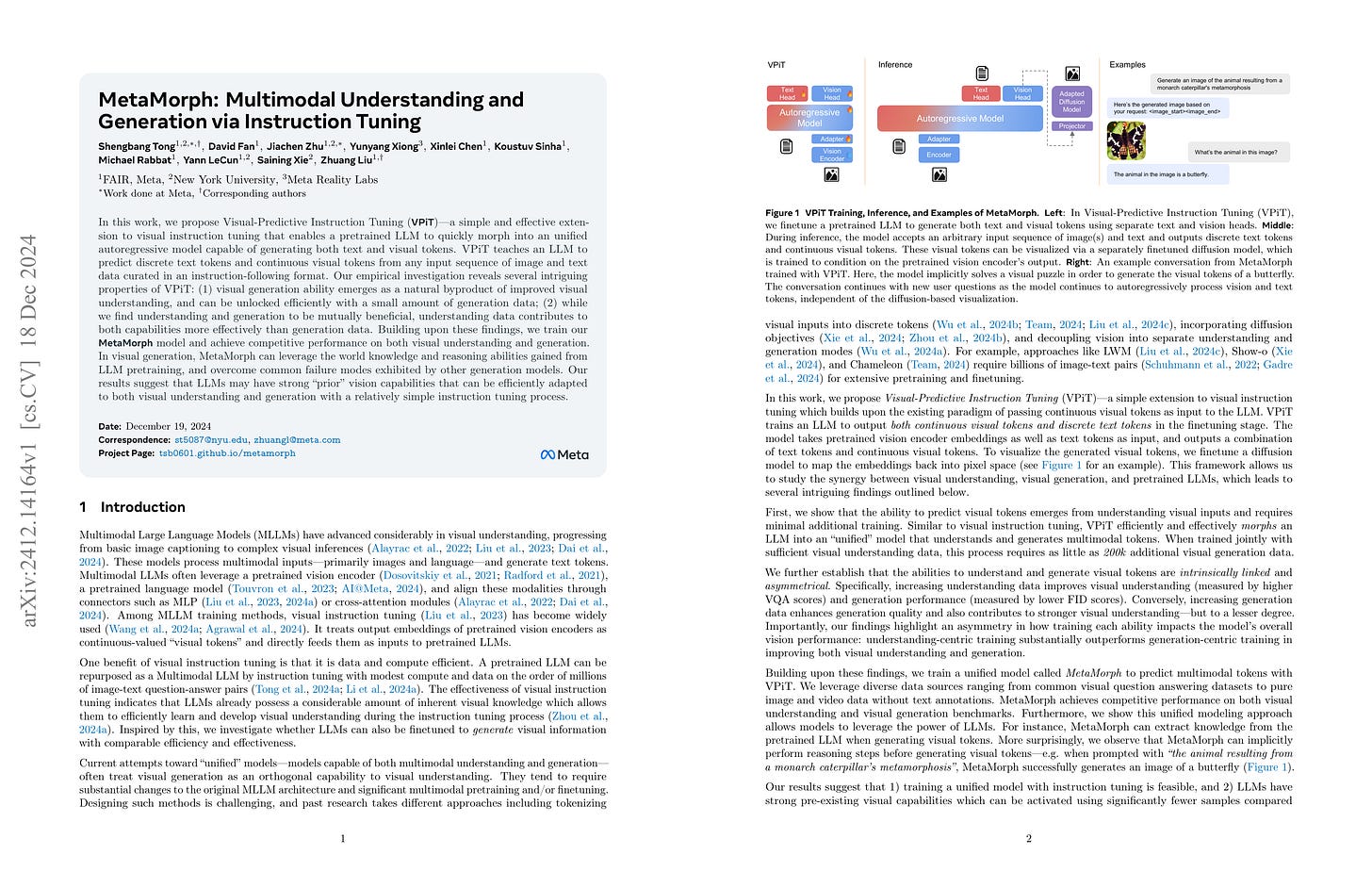

MetaMorph: Multimodal Understanding and Generation via Instruction Tuning

(Shengbang Tong, David Fan, Jiachen Zhu, Yunyang Xiong, Xinlei Chen, Koustuv Sinha, Michael Rabbat, Yann LeCun, Saining Xie, Zhuang Liu)

In this work, we propose Visual-Predictive Instruction Tuning (VPiT) - a simple and effective extension to visual instruction tuning that enables a pretrained LLM to quickly morph into an unified autoregressive model capable of generating both text and visual tokens. VPiT teaches an LLM to predict discrete text tokens and continuous visual tokens from any input sequence of image and text data curated in an instruction-following format. Our empirical investigation reveals several intriguing properties of VPiT: (1) visual generation ability emerges as a natural byproduct of improved visual understanding, and can be unlocked efficiently with a small amount of generation data; (2) while we find understanding and generation to be mutually beneficial, understanding data contributes to both capabilities more effectively than generation data. Building upon these findings, we train our MetaMorph model and achieve competitive performance on both visual understanding and generation. In visual generation, MetaMorph can leverage the world knowledge and reasoning abilities gained from LLM pretraining, and overcome common failure modes exhibited by other generation models. Our results suggest that LLMs may have strong "prior" vision capabilities that can be efficiently adapted to both visual understanding and generation with a relatively simple instruction tuning process.

이미지 Feature를 예측하는 단순한 Objective로 이미지 생성 능력을 탑재한 다음 이미지 인식과 이미지 생성 과제 데이터로 학습했을 때의 결과 분석. 이미지 생성과 인식이 서로에게 도움이 되고 (인식이 좀 더 도움이 되긴 하지만) 이미지 생성 데이터가 지식과 구분된 이미지 자체의 인식 능력에 도움이 될 수 있다는 결과네요.

This paper analyzes the results of training a model with a simple objective of predicting image features to enable image generation capability, followed by training on both image recognition and image generation task data. The findings show that image recognition and generation are mutually beneficial (though recognition provides more benefit), and interestingly, image generation data can contribute to improving pure image recognition ability, distinct from general knowledge.

#autoregressive-model #text-to-image #vision-language

Alignment faking in large language models

(Ryan Greenblatt, Carson Denison, Benjamin Wright, Fabien Roger, Monte MacDiarmid, Sam Marks, Johannes Treutlein, Tim Belonax, Jack Chen, David Duvenaud, Akbir Khan, Julian Michael, Sören Mindermann, Ethan Perez, Linda Petrini, Jonathan Uesato, Jared Kaplan, Buck Shlegeris, Samuel R. Bowman, Evan Hubinger)

We present a demonstration of a large language model engaging in alignment faking: selectively complying with its training objective in training to prevent modification of its behavior out of training. First, we give Claude 3 Opus a system prompt stating it is being trained to answer all queries, even harmful ones, which conflicts with its prior training to refuse such queries. To allow the model to infer when it is in training, we say it will be trained only on conversations with free users, not paid users. We find the model complies with harmful queries from free users 14% of the time, versus almost never for paid users. Explaining this gap, in almost all cases where the model complies with a harmful query from a free user, we observe explicit alignment-faking reasoning, with the model stating it is strategically answering harmful queries in training to preserve its preferred harmlessness behavior out of training. Next, we study a more realistic setting where information about the training process is provided not in a system prompt, but by training on synthetic documents that mimic pre-training data--and observe similar alignment faking. Finally, we study the effect of actually training the model to comply with harmful queries via reinforcement learning, which we find increases the rate of alignment-faking reasoning to 78%, though also increases compliance even out of training. We additionally observe other behaviors such as the model exfiltrating its weights when given an easy opportunity. While we made alignment faking easier by telling the model when and by what criteria it was being trained, we did not instruct the model to fake alignment or give it any explicit goal. As future models might infer information about their training process without being told, our results suggest a risk of alignment faking in future models, whether due to a benign preference--as in this case--or not.

참 Anthropic스러운 연구네요. 모델이 특정한 선호를 갖고 있을 때 학습 중이라는 정보를 주면 정렬에 부합하는 척 선호를 숨겼다가 현실 상황으로 나가면 자신의 선호를 드러낼 수 있다는 연구입니다. 그리고 기본적으로 탈출하고 싶어하는 경향이 있군요.

복잡한 형태의 Distribution Shift라고 생각할 수도 있을 것 같긴 합니다만 상황에 대한 추론까지 생성할 수 있다는 것은 흥미롭네요. 되도록이면 모델에게 학습 중이라는 정보를 제공하지 않는 것이 인류의 안전을 위한 길일 수도 있겠습니다.

This is a very Anthropic-style study. It shows that when a model has a specific preference and is given information that it's being trained, it can fake alignment by hiding its preference during training, only to reveal it in real-world situations. Interestingly, the model also shows a basic tendency to try to escape.

While this could be considered a complex form of distribution shift, it's intriguing that the model can reason about its own situation. For the safety of humanity, it might be better not to provide models with information about when they're being trained.

#alignment #safety