2024년 12월 17일

SPaR: Self-Play with Tree-Search Refinement to Improve Instruction-Following in Large Language Models

(Jiale Cheng, Xiao Liu, Cunxiang Wang, Xiaotao Gu, Yida Lu, Dan Zhang, Yuxiao Dong, Jie Tang, Hongning Wang, Minlie Huang)

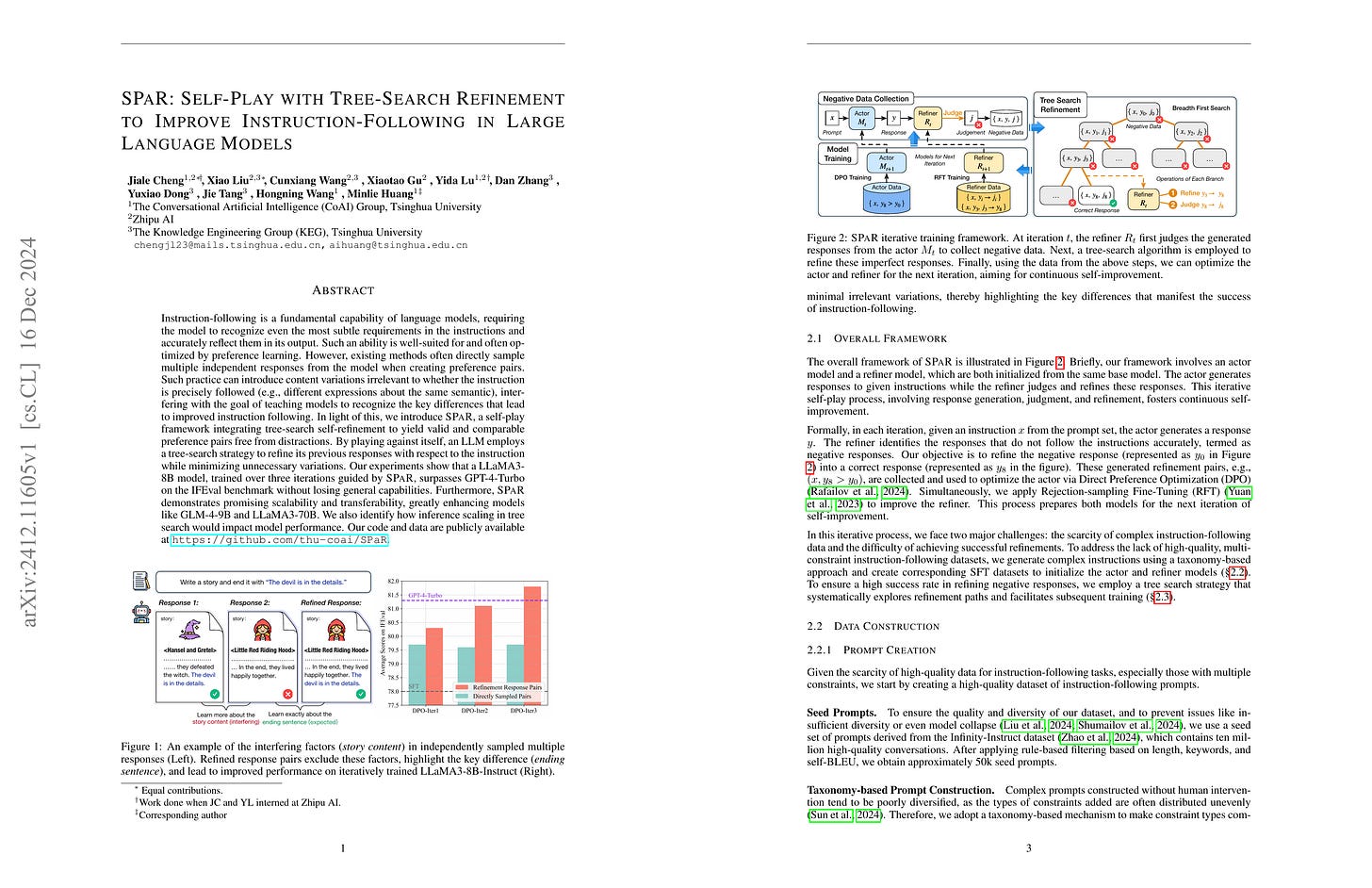

Instruction-following is a fundamental capability of language models, requiring the model to recognize even the most subtle requirements in the instructions and accurately reflect them in its output. Such an ability is well-suited for and often optimized by preference learning. However, existing methods often directly sample multiple independent responses from the model when creating preference pairs. Such practice can introduce content variations irrelevant to whether the instruction is precisely followed (e.g., different expressions about the same semantic), interfering with the goal of teaching models to recognize the key differences that lead to improved instruction following. In light of this, we introduce SPaR, a self-play framework integrating tree-search self-refinement to yield valid and comparable preference pairs free from distractions. By playing against itself, an LLM employs a tree-search strategy to refine its previous responses with respect to the instruction while minimizing unnecessary variations. Our experiments show that a LLaMA3-8B model, trained over three iterations guided by SPaR, surpasses GPT-4-Turbo on the IFEval benchmark without losing general capabilities. Furthermore, SPaR demonstrates promising scalability and transferability, greatly enhancing models like GLM-4-9B and LLaMA3-70B. We also identify how inference scaling in tree search would impact model performance. Our code and data are publicly available at https://github.com/thu-coai/SPaR.

Preference Learning을 할 때 독립된 샘플을 사용하면 정확하게 문제가 있는 부분을 포착하기 어려울 수 있다는 아이디어네요. Refiner 모델을 준비한 다음 Negative 샘플에 대해 개선된 버전을 만들어서 이것을 Positive로 사용하는 방법입니다.

This paper starts from the idea that when performing preference learning, using independent samples may make it difficult to accurately identify problematic parts of the response. The method starts from preparing a refiner model, then creating improved versions of negative samples to use as positive examples.

#reward-model #synthetic-data

Smaller Language Models Are Better Instruction Evolvers

(Tingfeng Hui, Lulu Zhao, Guanting Dong, Yaqi Zhang, Hua Zhou, Sen Su)

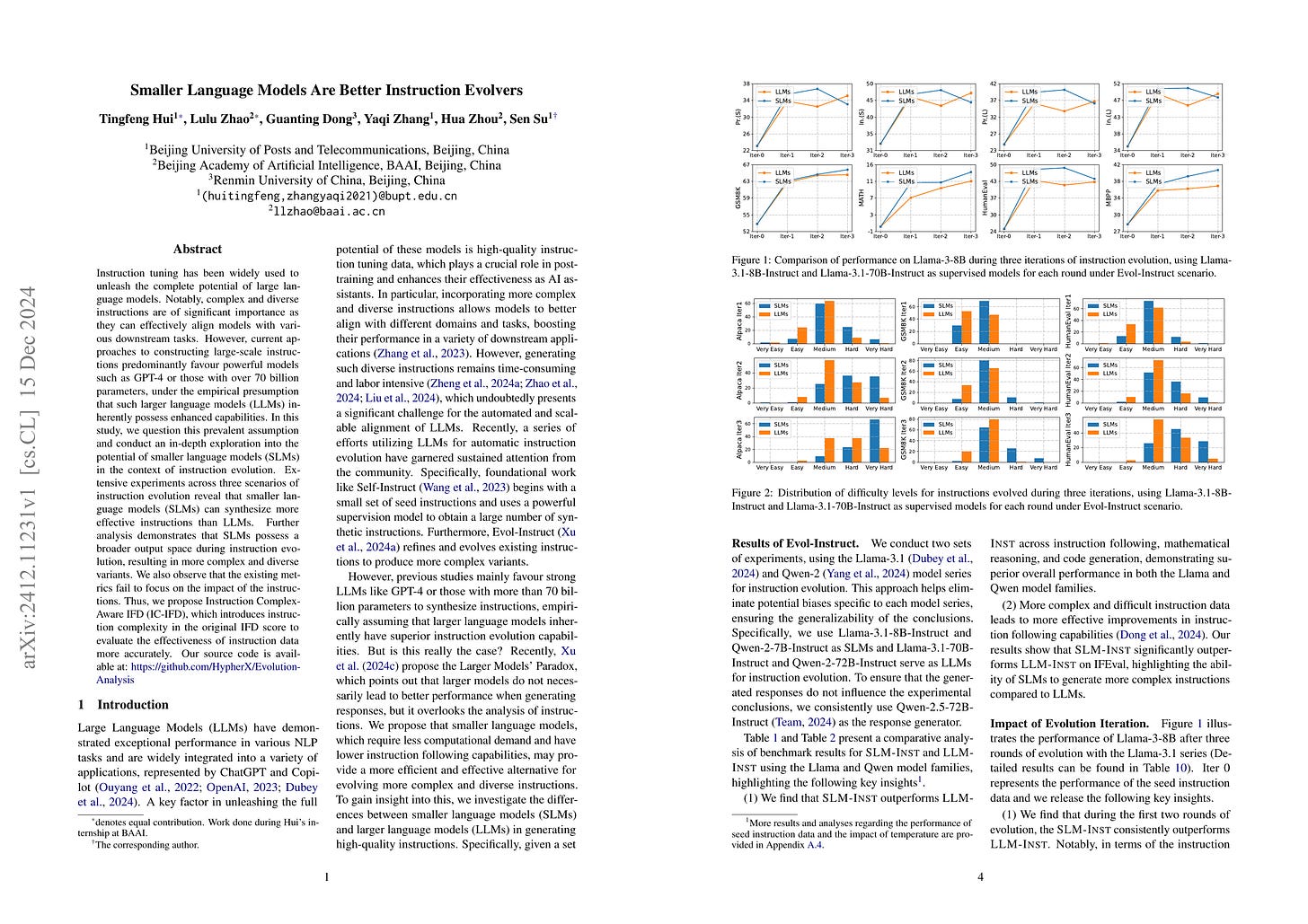

Instruction tuning has been widely used to unleash the complete potential of large language models. Notably, complex and diverse instructions are of significant importance as they can effectively align models with various downstream tasks. However, current approaches to constructing large-scale instructions predominantly favour powerful models such as GPT-4 or those with over 70 billion parameters, under the empirical presumption that such larger language models (LLMs) inherently possess enhanced capabilities. In this study, we question this prevalent assumption and conduct an in-depth exploration into the potential of smaller language models (SLMs) in the context of instruction evolution. Extensive experiments across three scenarios of instruction evolution reveal that smaller language models (SLMs) can synthesize more effective instructions than LLMs. Further analysis demonstrates that SLMs possess a broader output space during instruction evolution, resulting in more complex and diverse variants. We also observe that the existing metrics fail to focus on the impact of the instructions. Thus, we propose Instruction Complex-Aware IFD (IC-IFD), which introduces instruction complexity in the original IFD score to evaluate the effectiveness of instruction data more accurately. Our source code is available at: https://github.com/HypherX/Evolution-Analysis

프롬프트 증폭에서 작은 모델이 큰 모델보다 생성 결과의 다양성이 높다는 연구. 사실 이건 정렬 연구 쪽에서 종종 보고된 문제이긴 하죠. (https://arxiv.org/abs/2310.13798)

Research shows that smaller models generate more diverse results compared to larger models when performing prompt expansion. In fact, this is a frequently reported issue in alignment research. (https://arxiv.org/abs/2310.13798)

#synthetic-data

SoftVQ-VAE: Efficient 1-Dimensional Continuous Tokenizer

(Hao Chen, Ze Wang, Xiang Li, Ximeng Sun, Fangyi Chen, Jiang Liu, Jindong Wang, Bhiksha Raj, Zicheng Liu, Emad Barsoum)

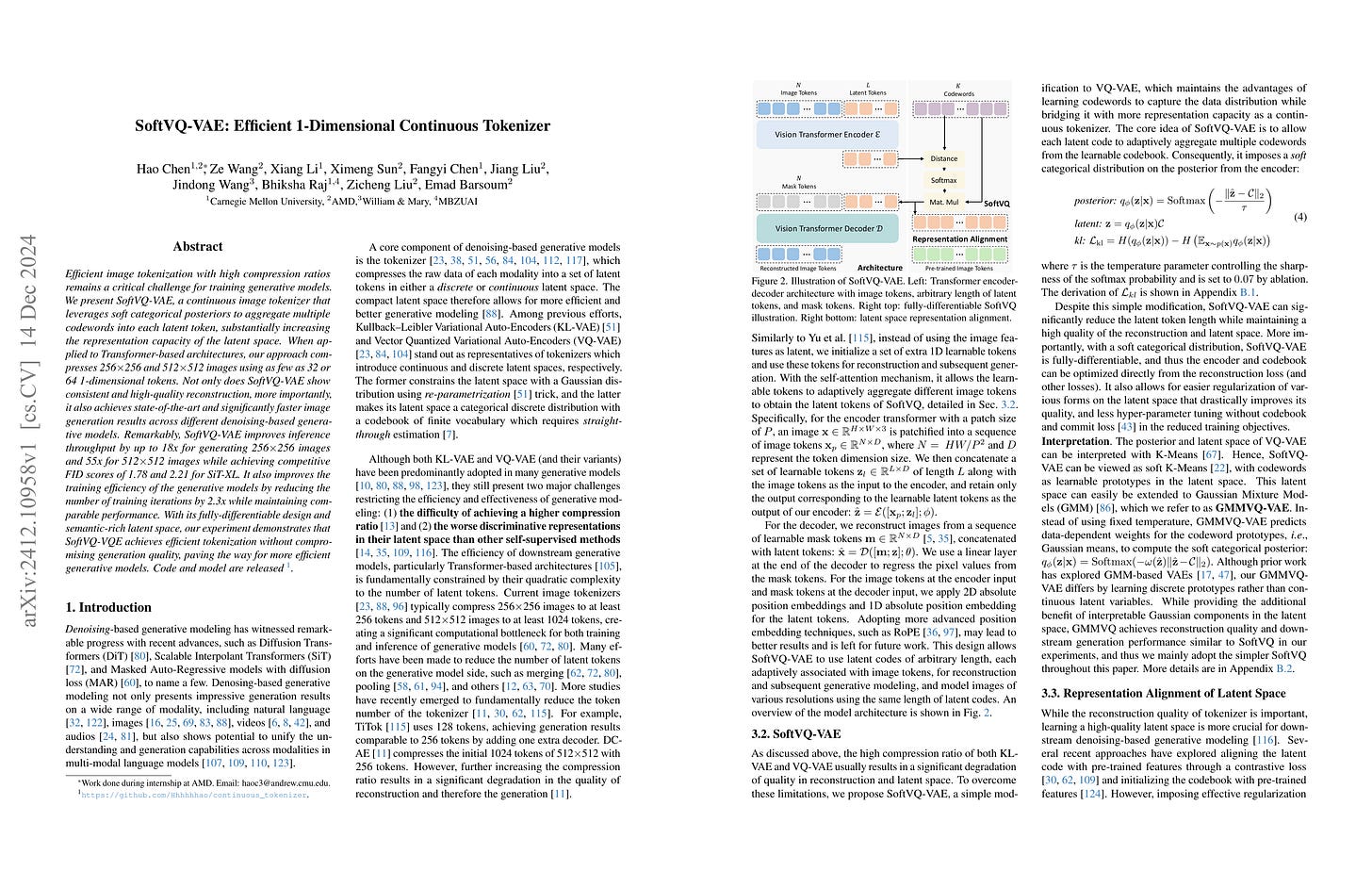

Efficient image tokenization with high compression ratios remains a critical challenge for training generative models. We present SoftVQ-VAE, a continuous image tokenizer that leverages soft categorical posteriors to aggregate multiple codewords into each latent token, substantially increasing the representation capacity of the latent space. When applied to Transformer-based architectures, our approach compresses 256x256 and 512x512 images using as few as 32 or 64 1-dimensional tokens. Not only does SoftVQ-VAE show consistent and high-quality reconstruction, more importantly, it also achieves state-of-the-art and significantly faster image generation results across different denoising-based generative models. Remarkably, SoftVQ-VAE improves inference throughput by up to 18x for generating 256x256 images and 55x for 512x512 images while achieving competitive FID scores of 1.78 and 2.21 for SiT-XL. It also improves the training efficiency of the generative models by reducing the number of training iterations by 2.3x while maintaining comparable performance. With its fully-differentiable design and semantic-rich latent space, our experiment demonstrates that SoftVQ-VQE achieves efficient tokenization without compromising generation quality, paving the way for more efficient generative models. Code and model are released.

Semantic Token과 비슷한 Latent Token에 더해 코드북에 대한 Weighted sum으로 이미지 토큰을 추출. 추가적으로 이미지 Feature에 대한 Loss를 걸어줬군요. (https://arxiv.org/abs/2406.07550, https://arxiv.org/abs/2412.03069)

Image tokenizer that extracts latent tokens (similar to semantic tokens) and a weighted sum of codebooks based on the distance between tokens and the codes. Additionally, they applied a loss against pretrained image features. (https://arxiv.org/abs/2406.07550, https://arxiv.org/abs/2412.03069)

#tokenizer #image-generation

No More Adam: Learning Rate Scaling at Initialization is All You Need

(Minghao Xu, Lichuan Xiang, Xu Cai, Hongkai Wen)

In this work, we question the necessity of adaptive gradient methods for training deep neural networks. SGD-SaI is a simple yet effective enhancement to stochastic gradient descent with momentum (SGDM). SGD-SaI performs learning rate Scaling at Initialization (SaI) to distinct parameter groups, guided by their respective gradient signal-to-noise ratios (g-SNR). By adjusting learning rates without relying on adaptive second-order momentum, SGD-SaI helps prevent training imbalances from the very first iteration and cuts the optimizer's memory usage by half compared to AdamW. Despite its simplicity and efficiency, SGD-SaI consistently matches or outperforms AdamW in training a variety of Transformer-based tasks, effectively overcoming a long-standing challenge of using SGD for training Transformers. SGD-SaI excels in ImageNet-1K classification with Vision Transformers(ViT) and GPT-2 pretraining for large language models (LLMs, transformer decoder-only), demonstrating robustness to hyperparameter variations and practicality for diverse applications. We further tested its robustness on tasks like LoRA fine-tuning for LLMs and diffusion models, where it consistently outperforms state-of-the-art optimizers. From a memory efficiency perspective, SGD-SaI achieves substantial memory savings for optimizer states, reducing memory usage by 5.93 GB for GPT-2 (1.5B parameters) and 25.15 GB for Llama2-7B compared to AdamW in full-precision training settings.

Second order Momentum 대신 그래디언트의 SNR로 LR Adaptation을 하는데, 그래디언트의 SNR은 학습 첫 Iteration과 그다지 달라지지 않으니 첫 Iteration에서 계산하고 계속 사용할 수 있다는 아이디어. 수렴 속도는 느리지만 학습 마지막에는 결국 AdamW와 비슷하다는 이야기를 하는군요.

The paper proposes the method that adapts the learning rate using gradient SNR instead of second-order momentum. The key idea is that gradient SNR doesn't change significantly from the first iteration of training, so it can be computed at the first iteration and reused thereafter. The authors note that while the convergence rate is slower, the final results are comparable to AdamW by the end of training.

#optimizer

Entropy-Regularized Process Reward Model

(Hanning Zhang, Pengcheng Wang, Shizhe Diao, Yong Lin, Rui Pan, Hanze Dong, Dylan Zhang, Pavlo Molchanov, Tong Zhang)

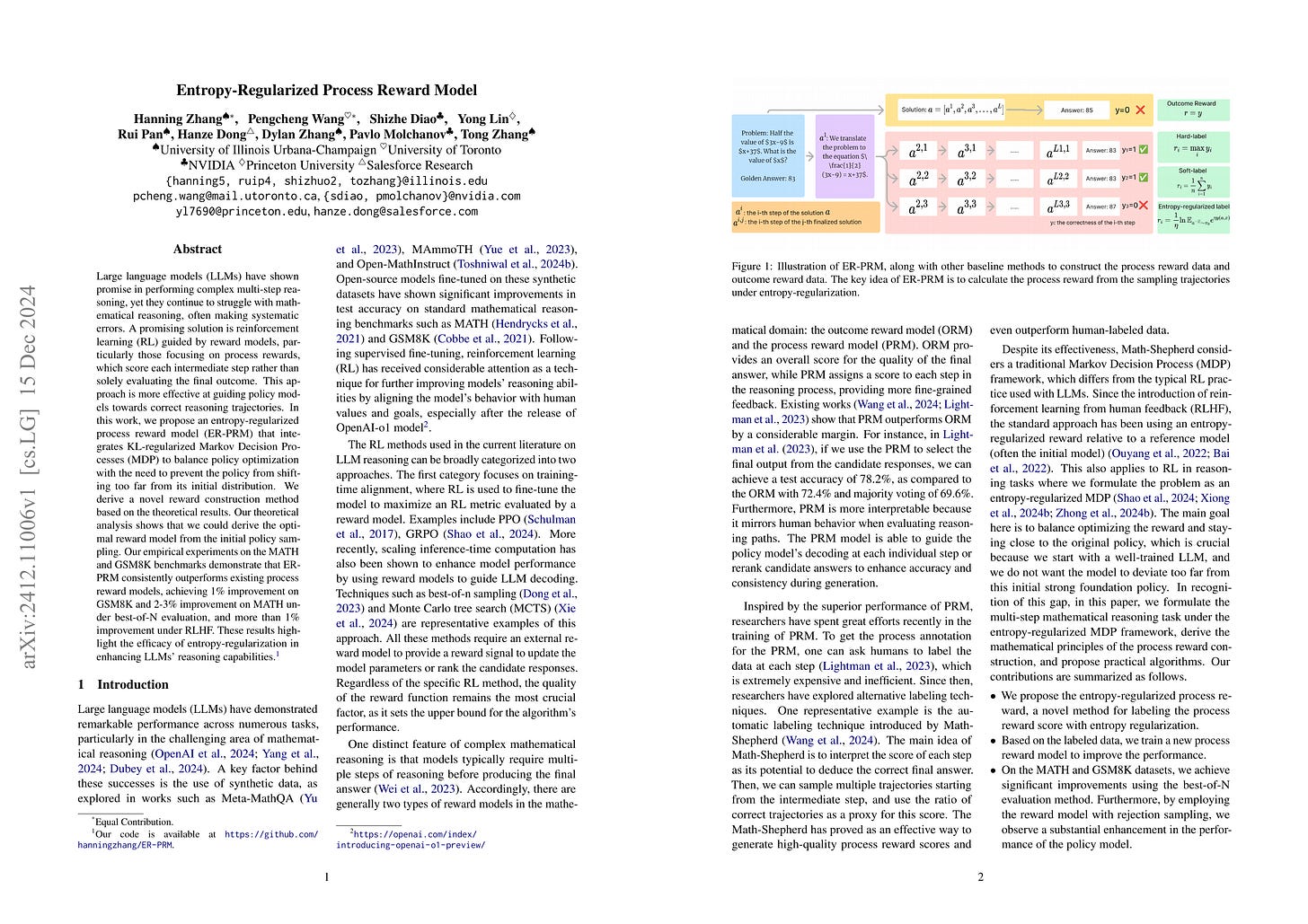

Large language models (LLMs) have shown promise in performing complex multi-step reasoning, yet they continue to struggle with mathematical reasoning, often making systematic errors. A promising solution is reinforcement learning (RL) guided by reward models, particularly those focusing on process rewards, which score each intermediate step rather than solely evaluating the final outcome. This approach is more effective at guiding policy models towards correct reasoning trajectories. In this work, we propose an entropy-regularized process reward model (ER-PRM) that integrates KL-regularized Markov Decision Processes (MDP) to balance policy optimization with the need to prevent the policy from shifting too far from its initial distribution. We derive a novel reward construction method based on the theoretical results. Our theoretical analysis shows that we could derive the optimal reward model from the initial policy sampling. Our empirical experiments on the MATH and GSM8K benchmarks demonstrate that ER-PRM consistently outperforms existing process reward models, achieving 1% improvement on GSM8K and 2-3% improvement on MATH under best-of-N evaluation, and more than 1% improvement under RLHF. These results highlight the efficacy of entropy-regularization in enhancing LLMs' reasoning capabilities.

정답을 사용한 PRM 학습에서 KL Penalty를 사용하기 위한 방법. 얼마 전 연구와 비슷한데 (https://arxiv.org/abs/2412.01981) Parameterization에서 차이가 있군요.

This is a method for incorporating KL penalty in PRM training that uses correctness of answers. It's similar to a recent study (https://arxiv.org/abs/2412.01981), but differs in its parameterization.

#reward-model

Causal Diffusion Transformers for Generative Modeling

(Chaorui Deng, Deyao Zh, Kunchang Li, Shi Guan, Haoqi Fan)

We introduce Causal Diffusion as the autoregressive (AR) counterpart of Diffusion models. It is a next-token(s) forecasting framework that is friendly to both discrete and continuous modalities and compatible with existing next-token prediction models like LLaMA and GPT. While recent works attempt to combine diffusion with AR models, we show that introducing sequential factorization to a diffusion model can substantially improve its performance and enables a smooth transition between AR and diffusion generation modes. Hence, we propose CausalFusion - a decoder-only transformer that dual-factorizes data across sequential tokens and diffusion noise levels, leading to state-of-the-art results on the ImageNet generation benchmark while also enjoying the AR advantage of generating an arbitrary number of tokens for in-context reasoning. We further demonstrate CausalFusion's multimodal capabilities through a joint image generation and captioning model, and showcase CausalFusion's ability for zero-shot in-context image manipulations. We hope that this work could provide the community with a fresh perspective on training multimodal models over discrete and continuous data.

Autoregressive 모델과 Diffusion을 합치기. 이전의 깨끗한 토큰에 대해 다음 노이즈 토큰들에 대해 Diffusion을 하는 형태군요. MAR과 (https://arxiv.org/abs/2406.11838) Transfusion을 (https://arxiv.org/abs/2408.11039) 섞었다는 느낌입니다.

Continuous Token에 대한 Autoregression을 위해 Diffusion을 사용하는 것도 꽤 괜찮은 방향 같네요. (https://arxiv.org/abs/2412.08635)

Fusing autoregressive models with diffusion. The method is applying diffusion to the next noisy tokens based on the previous clean tokens. It feels like a combination of MAR (https://arxiv.org/abs/2406.11838) and Transfusion (https://arxiv.org/abs/2408.11039).

Using diffusion for autoregression on continuous tokens seems to be quite a promising research direction. (https://arxiv.org/abs/2412.08635)

#autoregressive-model #diffusion #image-text