2024년 11월 21일

Loss-to-Loss Prediction: Scaling Laws for All Datasets

(David Brandfonbrener, Nikhil Anand, Nikhil Vyas, Eran Malach, Sham Kakade)

While scaling laws provide a reliable methodology for predicting train loss across compute scales for a single data distribution, less is known about how these predictions should change as we change the distribution. In this paper, we derive a strategy for predicting one loss from another and apply it to predict across different pre-training datasets and from pre-training data to downstream task data. Our predictions extrapolate well even at 20x the largest FLOP budget used to fit the curves. More precisely, we find that there are simple shifted power law relationships between (1) the train losses of two models trained on two separate datasets when the models are paired by training compute (train-to-train), (2) the train loss and the test loss on any downstream distribution for a single model (train-to-test), and (3) the test losses of two models trained on two separate train datasets (test-to-test). The results hold up for pre-training datasets that differ substantially (some are entirely code and others have no code at all) and across a variety of downstream tasks. Finally, we find that in some settings these shifted power law relationships can yield more accurate predictions than extrapolating single-dataset scaling laws.

아주 흥미로운 결과네요. 하나의 데이터셋에 대한 Loss를 다른 데이터셋에 대한 Loss로 변환하는 Scaling Law입니다. 따라서 학습 데이터셋 사이에서 또는 학습 데이터셋과 평가 데이터셋에서, 그리고 서로 다른 데이터셋에 대해 학습한 모델의 평가 데이터셋에 대한 Loss의 관계를 추정할 수 있습니다.

기본적인 함수형은 Kaplan Scaling Law를 엔트로피의 하한으로 평행이동하고 지수를 도입한 형태네요.

These are very interesting results. This is a scaling law that transforms the loss from one dataset to another. As a result, we can estimate the relationship between losses across training datasets, between training and evaluation datasets, and even between models trained on different datasets when evaluated on a test set.

The basic functional form is based on the Kaplan scaling law, but it's shifted by a lower bound of entropy and introduces an exponent.

#scaling-law

When Precision Meets Position: BFloat16 Breaks Down RoPE in Long-Context Training

(Haonan Wang, Qian Liu, Chao Du, Tongyao Zhu, Cunxiao Du, Kenji Kawaguchi, Tianyu Pang)

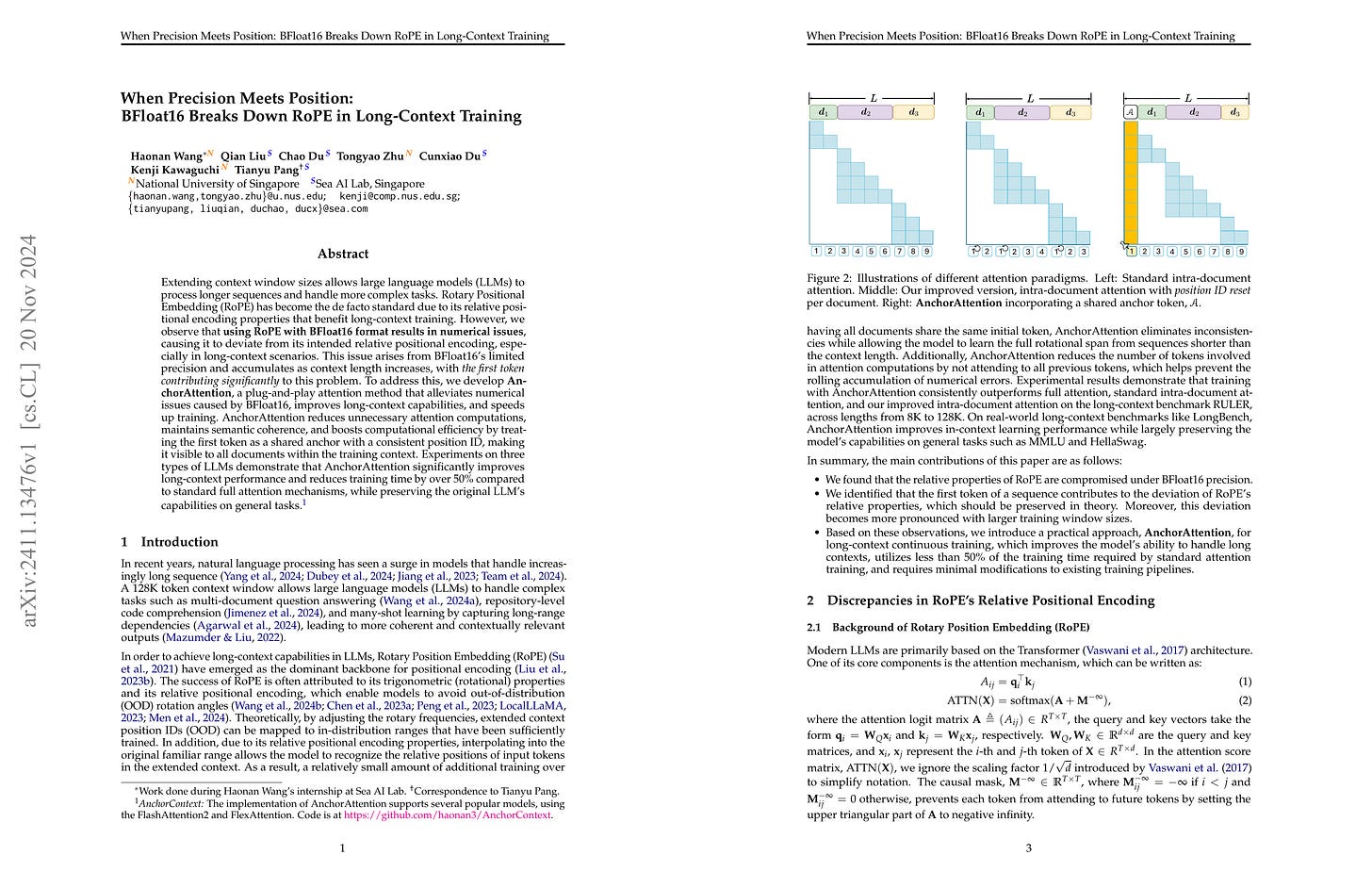

Extending context window sizes allows large language models (LLMs) to process longer sequences and handle more complex tasks. Rotary Positional Embedding (RoPE) has become the de facto standard due to its relative positional encoding properties that benefit long-context training. However, we observe that using RoPE with BFloat16 format results in numerical issues, causing it to deviate from its intended relative positional encoding, especially in long-context scenarios. This issue arises from BFloat16's limited precision and accumulates as context length increases, with the first token contributing significantly to this problem. To address this, we develop AnchorAttention, a plug-and-play attention method that alleviates numerical issues caused by BFloat16, improves long-context capabilities, and speeds up training. AnchorAttention reduces unnecessary attention computations, maintains semantic coherence, and boosts computational efficiency by treating the first token as a shared anchor with a consistent position ID, making it visible to all documents within the training context. Experiments on three types of LLMs demonstrate that AnchorAttention significantly improves long-context performance and reduces training time by over 50% compared to standard full attention mechanisms, while preserving the original LLM's capabilities on general tasks. Our code is available at https://github.com/haonan3/AnchorContext.

BFloat16 + RoPE에서 발생하는 문제. 분석이 좀 많습니다. Long Context 문제를 태클하다가 발견한 것이 아닌가 싶네요.

BFloat16으로 RoPE를 사용하면 RoPE의 Relative Positional Encoding으로서의 효과가 떨어진다는 것이 요점입니다. 즉 위치를 이동하면 Attention이 크게 달라지는 것이죠. Long Context로 갈수록 이 효과가 더 커진다고 합니다. 그리고 가장 큰 차이는 첫 토큰에 대한 Attention에서 발생한다고 하네요.

따라서 문서 내 마스킹으로 Context Length를 줄이고 각 문서마다 위치 ID를 0으로 리셋하면 이 문제를 개선할 수 있지만 다양한 위치 ID에 대한 학습이 적어지죠. 이에 대해 레지스터 토큰을 사용하면 된다는 아이디어입니다. 결과적으로는 BoS 토큰을 쓰자는 것이죠.

딥 러닝 판은 저정밀도 연산에 워낙 익숙해서 대체로 간과되긴 하지만 (그리고 대체로 큰 문제가 없기도 하죠.) 가끔 수치 문제가 이렇게 기습할 때가 있습니다.

Issues with BFloat16 + RoPE. This paper contains many analysis. I suspect this was discovered while tackling long context tasks.

The key point is that using RoPE with BFloat16 reduces its effectiveness as a relative positional encoding. This means attention values change significantly when positions are shifted. This effect intensifies with longer contexts. The most substantial difference occurs in the attention to the first token.

To mitigate this, one could limit context length through intra-document attention masking and reset position IDs to 0 for each document. However, this approach limits training on various position IDs due to it would be bounded by document length. The paper proposes using register tokens, essentially introducing BoS tokens.

While low-precision computations are commonplace in deep learning and often overlooked (usually without major issues), numerical precision problems can occasionally catch us off guard.

#positional-encoding

REDUCIO! Generating 1024×1024 Video within 16 Seconds using Extremely Compressed Motion Latents

(Rui Tian, Qi Dai, Jianmin Bao, Kai Qiu, Yifan Yang, Chong Luo, Zuxuan Wu, Yu-Gang Jiang)

Commercial video generation models have exhibited realistic, high-fidelity results but are still restricted to limited access. One crucial obstacle for large-scale applications is the expensive training and inference cost. In this paper, we argue that videos contain much more redundant information than images, thus can be encoded by very few motion latents based on a content image. Towards this goal, we design an image-conditioned VAE to encode a video to an extremely compressed motion latent space. This magic Reducio charm enables 64x reduction of latents compared to a common 2D VAE, without sacrificing the quality. Training diffusion models on such a compact representation easily allows for generating 1K resolution videos. We then adopt a two-stage video generation paradigm, which performs text-to-image and text-image-to-video sequentially. Extensive experiments show that our Reducio-DiT achieves strong performance in evaluation, though trained with limited GPU resources. More importantly, our method significantly boost the efficiency of video LDMs both in training and inference. We train Reducio-DiT in around 3.2K training hours in total and generate a 16-frame 1024*1024 video clip within 15.5 seconds on a single A100 GPU. Code released at https://github.com/microsoft/Reducio-VAE .

비디오 토크나이저를 3D 인코더 하나를 사용하는 대신 이미지 인코더와 3D 인코더의 조합으로 구성했군요. 이미지 정보가 주어진다면 움직임과 관련된 정보는 훨씬 고압축 하는 것이 가능하다는 아이디어죠.

Instead of using a single 3D encoder, they designed the video tokenizer as a combination of an image encoder and a 3D encoder. The key idea is that if image information is provided, motion-related information can be compressed much more compactly

#video-generation #tokenizer

BALROG: Benchmarking Agentic LLM and VLM Reasoning On Games

(Davide Paglieri, Bartłomiej Cupiał, Samuel Coward, Ulyana Piterbarg, Maciej Wolczyk, Akbir Khan, Eduardo Pignatelli, Łukasz Kuciński, Lerrel Pinto, Rob Fergus, Jakob Nicolaus Foerster, Jack Parker-Holder, Tim Rocktäschel)

Large Language Models (LLMs) and Vision Language Models (VLMs) possess extensive knowledge and exhibit promising reasoning abilities; however, they still struggle to perform well in complex, dynamic environments. Real-world tasks require handling intricate interactions, advanced spatial reasoning, long-term planning, and continuous exploration of new strategies-areas in which we lack effective methodologies for comprehensively evaluating these capabilities. To address this gap, we introduce BALROG, a novel benchmark designed to assess the agentic capabilities of LLMs and VLMs through a diverse set of challenging games. Our benchmark incorporates a range of existing reinforcement learning environments with varying levels of difficulty, including tasks that are solvable by non-expert humans in seconds to extremely challenging ones that may take years to master (e.g., the NetHack Learning Environment). We devise fine-grained metrics to measure performance and conduct an extensive evaluation of several popular open-source and closed-source LLMs and VLMs. Our findings indicate that while current models achieve partial success in the easier games, they struggle significantly with more challenging tasks. Notably, we observe severe deficiencies in vision-based decision-making, as models perform worse when visual representations of the environments are provided. We release BALROG as an open and user-friendly benchmark to facilitate future research and development in the agentic community.

게임을 통해서 LLM/VLM의 에이전트로서의 성능을 평가하는 벤치마크. 어쩌면 게임과 강화학습이 LLM/VLM을 통해 귀환할지도 모르겠네요.

This is a benchmark that evaluates the agentic capabilities of LLMs and VLMs through games. It's possible that games and reinforcement learning might make a comeback through LLMs and VLMs.

#benchmark #agent

Training Bilingual LMs with Data Constraints in the Targeted Language

(Skyler Seto, Maartje ter Hoeve, He Bai, Natalie Schluter, David Grangier)

Large language models are trained on massive scrapes of the web, as required by current scaling laws. Most progress is made for English, given its abundance of high-quality pretraining data. For most other languages, however, such high quality pretraining data is unavailable. In this work, we study how to boost pretrained model performance in a data constrained target language by enlisting data from an auxiliary language for which high quality data is available. We study this by quantifying the performance gap between training with data in a data-rich auxiliary language compared with training in the target language, exploring the benefits of translation systems, studying the limitations of model scaling for data constrained languages, and proposing new methods for upsampling data from the auxiliary language. Our results show that stronger auxiliary datasets result in performance gains without modification to the model or training objective for close languages, and, in particular, that performance gains due to the development of more information-rich English pretraining datasets can extend to targeted language settings with limited data.

영어 데이터셋 같은 대량의 데이터셋을 확보할 수 있는 언어를 통한 데이터 양이 적은 언어에 대한 성능 개선 패턴 분석. 고품질의 영어 데이터셋을 활용하면 성능이 향상되는데 데이터셋을 걸러내는 것보다는 고가치의 정보가 포함되는 것이 중요하다는 것, 그리고 기계 번역 데이터 등이 도움이 된다는 결과네요. 다만 늘 그렇듯 언어마다 패턴이 다르다는 문제가 있긴 합니다.

This paper analyzes patterns of performance improvement for low-resource languages by leveraging languages with abundant datasets, such as English. The results show that utilizing high-quality English datasets can enhance performance, with the key factor being the inclusion of high-value information rather than merely filtering the dataset. Machine-translated data can also be beneficial. However, as always, there's a challenge in that patterns differ across languages.

#multilingual

Hardware Scaling Trends and Diminishing Returns in Large-Scale Distributed Training

(Jared Fernandez, Luca Wehrstedt, Leonid Shamis, Mostafa Elhoushi, Kalyan Saladi, Yonatan Bisk, Emma Strubell, Jacob Kahn)

Dramatic increases in the capabilities of neural network models in recent years are driven by scaling model size, training data, and corresponding computational resources. To develop the exceedingly large networks required in modern applications, such as large language models (LLMs), model training is distributed across tens of thousands of hardware accelerators (e.g. GPUs), requiring orchestration of computation and communication across large computing clusters. In this work, we demonstrate that careful consideration of hardware configuration and parallelization strategy is critical for effective (i.e. compute- and cost-efficient) scaling of model size, training data, and total computation. We conduct an extensive empirical study of the performance of large-scale LLM training workloads across model size, hardware configurations, and distributed parallelization strategies. We demonstrate that: (1) beyond certain scales, overhead incurred from certain distributed communication strategies leads parallelization strategies previously thought to be sub-optimal in fact become preferable; and (2) scaling the total number of accelerators for large model training quickly yields diminishing returns even when hardware and parallelization strategies are properly optimized, implying poor marginal performance per additional unit of power or GPU-hour.

클러스터 크기가 증가할수록 연산과 중첩할 수 없는 통신 비용이 학습 스루풋을 지배한다는 분석. 하드웨어가 발전할수록 연산력 증가에 비해 통신 속도의 증가는 더디기 때문에 상황이 더 안 좋아진다는 이야기입니다. 블랙웰에서 NVLink로 72개 GPU가 연결된 것이 도움이 될지도 모르겠네요.

As the cluster size increases, communication costs that cannot be overlapped with computation begin to dominate overall training throughput. As hardware advances, the increase in communication speed lags behind the increase in computational power, making the situation even worse. Perhaps the NVLink connecting 72 GPUs, introduced in Blackwell, could be helpful in addressing this issue.

#scaling #parallelism #efficient-training

MemoryFormer: Minimize Transformer Computation by Removing Fully-Connected Layers

(Ning Ding, Yehui Tang, Haochen Qin, Zhenli Zhou, Chao Xu, Lin Li, Kai Han, Heng Liao, Yunhe Wang)

In order to reduce the computational complexity of large language models, great efforts have been made to to improve the efficiency of transformer models such as linear attention and flash-attention. However, the model size and corresponding computational complexity are constantly scaled up in pursuit of higher performance. In this work, we present MemoryFormer, a novel transformer architecture which significantly reduces the computational complexity (FLOPs) from a new perspective. We eliminate nearly all the computations of the transformer model except for the necessary computation required by the multi-head attention operation. This is made possible by utilizing an alternative method for feature transformation to replace the linear projection of fully-connected layers. Specifically, we first construct a group of in-memory lookup tables that store a large amount of discrete vectors to replace the weight matrix used in linear projection. We then use a hash algorithm to retrieve a correlated subset of vectors dynamically based on the input embedding. The retrieved vectors combined together will form the output embedding, which provides an estimation of the result of matrix multiplication operation in a fully-connected layer. Compared to conducting matrix multiplication, retrieving data blocks from memory is a much cheaper operation which requires little computations. We train MemoryFormer from scratch and conduct extensive experiments on various benchmarks to demonstrate the effectiveness of the proposed model.

이진 코드 기반 해시 테이블로 FFN을 대체. Finite Scalar Quantization으로 FFN을 대체했다는 느낌이군요. Product Key Memory도 그렇고 요즘 이런 시도를 많이 하네요.

Replacing FFN with a hash table based on binary codes. It feels like they're replacing FFN with finite scalar quantization. Along with product key memory, we're seeing many similar approaches these days.

#transformer