2024년 11월 20일

Ultra-Sparse Memory Network

(Zihao Huang, Qiyang Min, Hongzhi Huang, Defa Zhu, Yutao Zeng, Ran Guo, Xun Zhou)

It is widely acknowledged that the performance of Transformer models is exponentially related to their number of parameters and computational complexity. While approaches like Mixture of Experts (MoE) decouple parameter count from computational complexity, they still face challenges in inference due to high memory access costs. This work introduces UltraMem, incorporating large-scale, ultra-sparse memory layer to address these limitations. Our approach significantly reduces inference latency while maintaining model performance. We also investigate the scaling laws of this new architecture, demonstrating that it not only exhibits favorable scaling properties but outperforms traditional models. In our experiments, we train networks with up to 20 million memory slots. The results show that our method achieves state-of-the-art inference speed and model performance within a given computational budget.

Product Key Memory를 가져왔네요. (https://arxiv.org/abs/1907.05242) 여러 개선들을 붙이고 Tensor Decomposition과 Linear Projection을 통한 메모리 증폭을 결합했군요. 최근에도 Product Key Memory를 사용해보려는 시도가 있었죠. (https://arxiv.org/abs/2407.04153)

Trying the product key memory (https://arxiv.org/abs/1907.05242) for sparse layer. Authors have incorporated various improvements and combined it with tensor decomposition and memory expansion with linear projection. There have been recent attempts to utilize product key memory as well (https://arxiv.org/abs/2407.04153).

#moe #transformer

Enhancing Reasoning Capabilities of LLMs via Principled Synthetic Logic Corpus

(Terufumi Morishita, Gaku Morio, Atsuki Yamaguchi, Yasuhiro Sogawa)

Large language models (LLMs) are capable of solving a wide range of tasks, yet they have struggled with reasoning. To address this, we propose Additional Logic Training (ALT), which aims to enhance LLMs' reasoning capabilities by program-generated logical reasoning samples. We first establish principles for designing high-quality samples by integrating symbolic logic theory and previous empirical insights. Then, based on these principles, we construct a synthetic corpus named Formal Logic Deduction Diverse (FLD^×2), comprising numerous samples of multi-step deduction with unknown facts, diverse reasoning rules, diverse linguistic expressions, and challenging distractors. Finally, we empirically show that ALT on FLD^×2 substantially enhances the reasoning capabilities of state-of-the-art LLMs, including LLaMA-3.1-70B. Improvements include gains of up to 30 points on logical reasoning benchmarks, up to 10 points on math and coding benchmarks, and 5 points on the benchmark suite BBH.

논리 추론 데이터를 생성해서 모델을 학습시킨다는 아이디어.

Train the model using synthetically generated logical reasoning data.

#reasoning #synthetic-data

Procedural Knowledge in Pretraining Drives Reasoning in Large Language Models

(Laura Ruis, Maximilian Mozes, Juhan Bae, Siddhartha Rao Kamalakara, Dwarak Talupuru, Acyr Locatelli, Robert Kirk, Tim Rocktäschel, Edward Grefenstette, Max Bartolo)

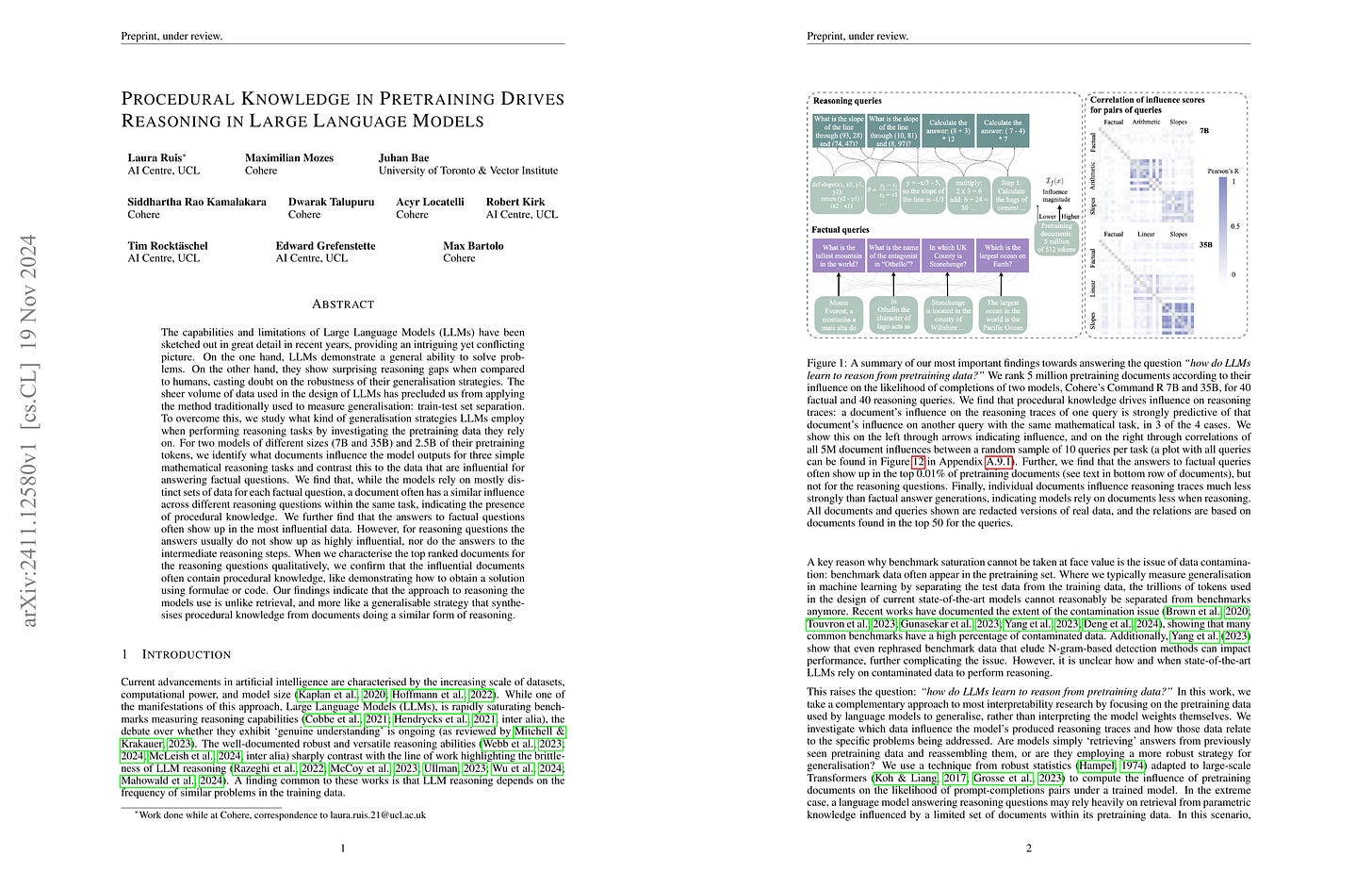

The capabilities and limitations of Large Language Models have been sketched out in great detail in recent years, providing an intriguing yet conflicting picture. On the one hand, LLMs demonstrate a general ability to solve problems. On the other hand, they show surprising reasoning gaps when compared to humans, casting doubt on the robustness of their generalisation strategies. The sheer volume of data used in the design of LLMs has precluded us from applying the method traditionally used to measure generalisation: train-test set separation. To overcome this, we study what kind of generalisation strategies LLMs employ when performing reasoning tasks by investigating the pretraining data they rely on. For two models of different sizes (7B and 35B) and 2.5B of their pretraining tokens, we identify what documents influence the model outputs for three simple mathematical reasoning tasks and contrast this to the data that are influential for answering factual questions. We find that, while the models rely on mostly distinct sets of data for each factual question, a document often has a similar influence across different reasoning questions within the same task, indicating the presence of procedural knowledge. We further find that the answers to factual questions often show up in the most influential data. However, for reasoning questions the answers usually do not show up as highly influential, nor do the answers to the intermediate reasoning steps. When we characterise the top ranked documents for the reasoning questions qualitatively, we confirm that the influential documents often contain procedural knowledge, like demonstrating how to obtain a solution using formulae or code. Our findings indicate that the approach to reasoning the models use is unlike retrieval, and more like a generalisable strategy that synthesises procedural knowledge from documents doing a similar form of reasoning.

Influence function을 사용해 LLM이 지식과 추론 과제에 대해 응답할 때 어떤 문서에서 영향을 받았는지 분석했군요. 결과가 꽤 재미있습니다. 추론 과제에 대해서는 구체적인 정답이 등장한 문서가 아니라 추론 방법, 즉 절차적 지식이 포함된 문서에 영향을 받고, 따라서 같은 추론 과제에 대해서는 영향을 받은 문서들이 비슷한 경향이 있습니다. (높은 상관관계) 반대로 지식을 묻는 경우엔 정답이 등장한 구체적인 문서에 영향을 받는군요. 그리고 추론 과제에서는 중요한 문서들이 수학, StackExchange, arXiv, 코드였다는 것까지. Anthropic이 Influence function을 사용해 분석했을 때처럼 (https://arxiv.org/abs/2308.03296) LLM이 도메인을 넘나드는 정보를 활용할 수 있다는 사례겠네요.

Using influence functions, this study analyzed which documents influenced LLMs when generating responses to knowledge and reasoning tasks. The results are quite interesting. For reasoning tasks, LLMs were influenced by documents containing procedural knowledge rather than those with specific answers. Consequently, influential documents for similar reasoning tasks tended to be alike (showing high correlation). Conversely, for knowledge-based questions, LLMs were influenced by specific documents containing the answers. For reasoning tasks, the most influential documents were related to mathematics, StackExchange, arXiv, and code. Similar to Anthropic's study using influence functions (https://arxiv.org/abs/2308.03296), this research demonstrates that LLMs can utilize cross-domain information for tasks.

#mechanistic-interpretation #reasoning

Unlocking State-Tracking in Linear RNNs Through Negative Eigenvalues

(Riccardo Grazzi, Julien Siems, Jörg K.H. Franke, Arber Zela, Frank Hutter, Massimiliano Pontil)

Linear Recurrent Neural Networks (LRNNs) such as Mamba, RWKV, GLA, mLSTM, and DeltaNet have emerged as efficient alternatives to Transformers in large language modeling, offering linear scaling with sequence length and improved training efficiency. However, LRNNs struggle to perform state-tracking which may impair performance in tasks such as code evaluation or tracking a chess game. Even parity, the simplest state-tracking task, which non-linear RNNs like LSTM handle effectively, cannot be solved by current LRNNs. Recently, Sarrof et al. (2024) demonstrated that the failure of LRNNs like Mamba to solve parity stems from restricting the value range of their diagonal state-transition matrices to [0, 1] and that incorporating negative values can resolve this issue. We extend this result to non-diagonal LRNNs, which have recently shown promise in models such as DeltaNet. We prove that finite precision LRNNs with state-transition matrices having only positive eigenvalues cannot solve parity, while complex eigenvalues are needed to count modulo 3. Notably, we also prove that LRNNs can learn any regular language when their state-transition matrices are products of identity minus vector outer product matrices, each with eigenvalues in the range [-1, 1]. Our empirical results confirm that extending the eigenvalue range of models like Mamba and DeltaNet to include negative values not only enables them to solve parity but consistently improves their performance on state-tracking tasks. Furthermore, pre-training LRNNs with an extended eigenvalue range for language modeling achieves comparable performance and stability while showing promise on code and math data. Our work enhances the expressivity of modern LRNNs, broadening their applicability without changing the cost of training or inference.

Linear RNN이 병렬화를 추구하면서 표현력을 희생했다는 연구들이 있었죠. (https://arxiv.org/abs/2404.08819, https://arxiv.org/abs/2405.17394) 여기서는 Linear RNN의 표현력을 제약하는 것이 Transition 행렬의 고윳값이 양수라는 것 때문이라고 분석했네요. 따라서 고윳값이 음수가 될 수 있도록 바꾸면 표현력이 향상됩니다.

There have been studies showing that linear RNNs sacrificed representational capacity while pursuing parallelization (https://arxiv.org/abs/2404.08819, https://arxiv.org/abs/2405.17394). This study analyzes that the limitation in representational power of linear RNNs stems from the fact that the eigenvalues of their transition matrices are positive. Therefore, by modifying the formulation to allow negative eigenvalues, the representational capacity of linear RNNs can be improved.

#state-space-model