2024년 11월 15일

Adaptive Decoding via Latent Preference Optimization

(Shehzaad Dhuliawala, Ilia Kulikov, Ping Yu, Asli Celikyilmaz, Jason Weston, Sainbayar Sukhbaatar, Jack Lanchantin)

During language model decoding, it is known that using higher temperature sampling gives more creative responses, while lower temperatures are more factually accurate. However, such models are commonly applied to general instruction following, which involves both creative and fact seeking tasks, using a single fixed temperature across all examples and tokens. In this work, we introduce Adaptive Decoding, a layer added to the model to select the sampling temperature dynamically at inference time, at either the token or example level, in order to optimize performance. To learn its parameters we introduce Latent Preference Optimization (LPO) a general approach to train discrete latent variables such as choices of temperature. Our method outperforms all fixed decoding temperatures across a range of tasks that require different temperatures, including UltraFeedback, Creative Story Writing, and GSM8K.

샘플 단위 혹은 토큰 단위로 적절한 Temperature를 설정하는 모델 학습시키기. 토큰 단위 Temperature는 꽤 흥미로운 방향일 듯 하네요. 창조적이어야 할 부분에 대해서만 Temperature를 높인다거나 하는 가능성이 나타날 수 있겠죠.

Training a model that sets appropriate temperatures at either the sample or token level. Setting temperatures for individual tokens seems like a particularly interesting direction. This could allow for possibilities such as increasing the temperature only for parts that need to be creative.

#alignment #rlhf

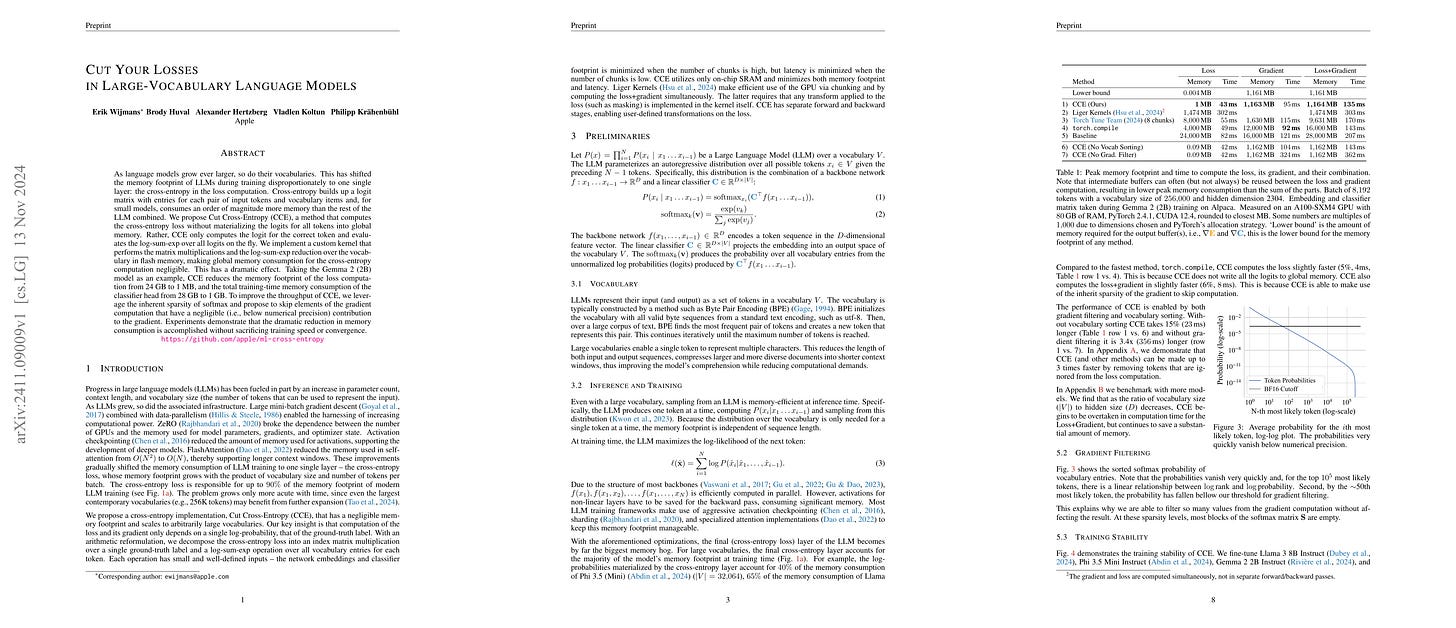

Cut Your Losses in Large-Vocabulary Language Models

(Erik Wijmans, Brody Huval, Alexander Hertzberg, Vladlen Koltun, Philipp Krähenbühl)

As language models grow ever larger, so do their vocabularies. This has shifted the memory footprint of LLMs during training disproportionately to one single layer: the cross-entropy in the loss computation. Cross-entropy builds up a logit matrix with entries for each pair of input tokens and vocabulary items and, for small models, consumes an order of magnitude more memory than the rest of the LLM combined. We propose Cut Cross-Entropy (CCE), a method that computes the cross-entropy loss without materializing the logits for all tokens into global memory. Rather, CCE only computes the logit for the correct token and evaluates the log-sum-exp over all logits on the fly. We implement a custom kernel that performs the matrix multiplications and the log-sum-exp reduction over the vocabulary in flash memory, making global memory consumption for the cross-entropy computation negligible. This has a dramatic effect. Taking the Gemma 2 (2B) model as an example, CCE reduces the memory footprint of the loss computation from 24 GB to 1 MB, and the total training-time memory consumption of the classifier head from 28 GB to 1 GB. To improve the throughput of CCE, we leverage the inherent sparsity of softmax and propose to skip elements of the gradient computation that have a negligible (i.e., below numerical precision) contribution to the gradient. Experiments demonstrate that the dramatic reduction in memory consumption is accomplished without sacrificing training speed or convergence.

메모리 효율적인 Cross Entropy 구현이 하나 더 나왔군요.

One more memory efficient implementation of cross entropy.

#efficient-training

On the Surprising Effectiveness of Attention Transfer for Vision Transformers

(Alexander C. Li, Yuandong Tian, Beidi Chen, Deepak Pathak, Xinlei Chen)

Conventional wisdom suggests that pre-training Vision Transformers (ViT) improves downstream performance by learning useful representations. Is this actually true? We investigate this question and find that the features and representations learned during pre-training are not essential. Surprisingly, using only the attention patterns from pre-training (i.e., guiding how information flows between tokens) is sufficient for models to learn high quality features from scratch and achieve comparable downstream performance. We show this by introducing a simple method called attention transfer, where only the attention patterns from a pre-trained teacher ViT are transferred to a student, either by copying or distilling the attention maps. Since attention transfer lets the student learn its own features, ensembling it with a fine-tuned teacher also further improves accuracy on ImageNet. We systematically study various aspects of our findings on the sufficiency of attention maps, including distribution shift settings where they underperform fine-tuning. We hope our exploration provides a better understanding of what pre-training accomplishes and leads to a useful alternative to the standard practice of fine-tuning

ViT의 Distillation에서 Teacher의 Attention Map을 그대로 가져오거나 Distill 하면 Teacher의 성능을 거의 재현할 수 있다는 연구. ViT에서는 좋은 Attention 패턴을 학습하는데 시간이 오래 걸린다는 결과들이 많았죠. 그런 의미에서는 자연스러운 것 같기도 합니다.

언어 모델이나 모델 크기에 차이가 있을 때는 어떨지 궁금하네요. 물론 언어 모델에서는 Attention Map을 Distill 하는 것 자체가 까다로운 일이긴 합니다만.

This study shows that in ViT distillation, we can almost recover the teacher's performance by directly copying or distilling the teacher's attention maps. There have been many studies indicating that it takes a long time for ViTs to learn good attention patterns. In that sense, this result could be natural.

I'm curious about how this would work with language models or when there are differences in model sizes. Distilling attention maps in language models is a challenging, however.

#distillation #vit