2024년 11월 12일

Edify Image: High-Quality Image Generation with Pixel Space Laplacian Diffusion Models

(NVIDIA: Yuval Atzmon, Maciej Bala, Yogesh Balaji, Tiffany Cai, Yin Cui, Jiaojiao Fan, Yunhao Ge, Siddharth Gururani, Jacob Huffman, Ronald Isaac, Pooya Jannaty, Tero Karras, Grace Lam, J. P. Lewis, Aaron Licata, Yen-Chen Lin, Ming-Yu Liu, Qianli Ma, Arun Mallya, Ashlee Martino-Tarr, Doug Mendez, Seungjun Nah, Chris Pruett, Fitsum Reda, Jiaming Song, Ting-Chun Wang, Fangyin Wei, Xiaohui Zeng, Yu Zeng, Qinsheng Zhang)

We introduce Edify Image, a family of diffusion models capable of generating photorealistic image content with pixel-perfect accuracy. Edify Image utilizes cascaded pixel-space diffusion models trained using a novel Laplacian diffusion process, in which image signals at different frequency bands are attenuated at varying rates. Edify Image supports a wide range of applications, including text-to-image synthesis, 4K upsampling, ControlNets, 360 HDR panorama generation, and finetuning for image customization.

와 라플라시안 피라미드! 라플라시안 피라미드로 분해된 이미지들에 대한 Diffusion Process의 합으로 Diffusion Process를 구성했군요. 실제 구현에서는 웨이블릿 공간에서 작동하도록 했습니다.

Wow, Laplacian pyramid! They've constructed the overall diffusion process as a sum of diffusion processes on images decomposed by a Laplacian pyramid. Also, in the actual implementation, the model operates in wavelet space.

#diffusion

Aioli: A Unified Optimization Framework for Language Model Data Mixing

(Mayee F. Chen, Michael Y. Hu, Nicholas Lourie, Kyunghyun Cho, Christopher Ré)

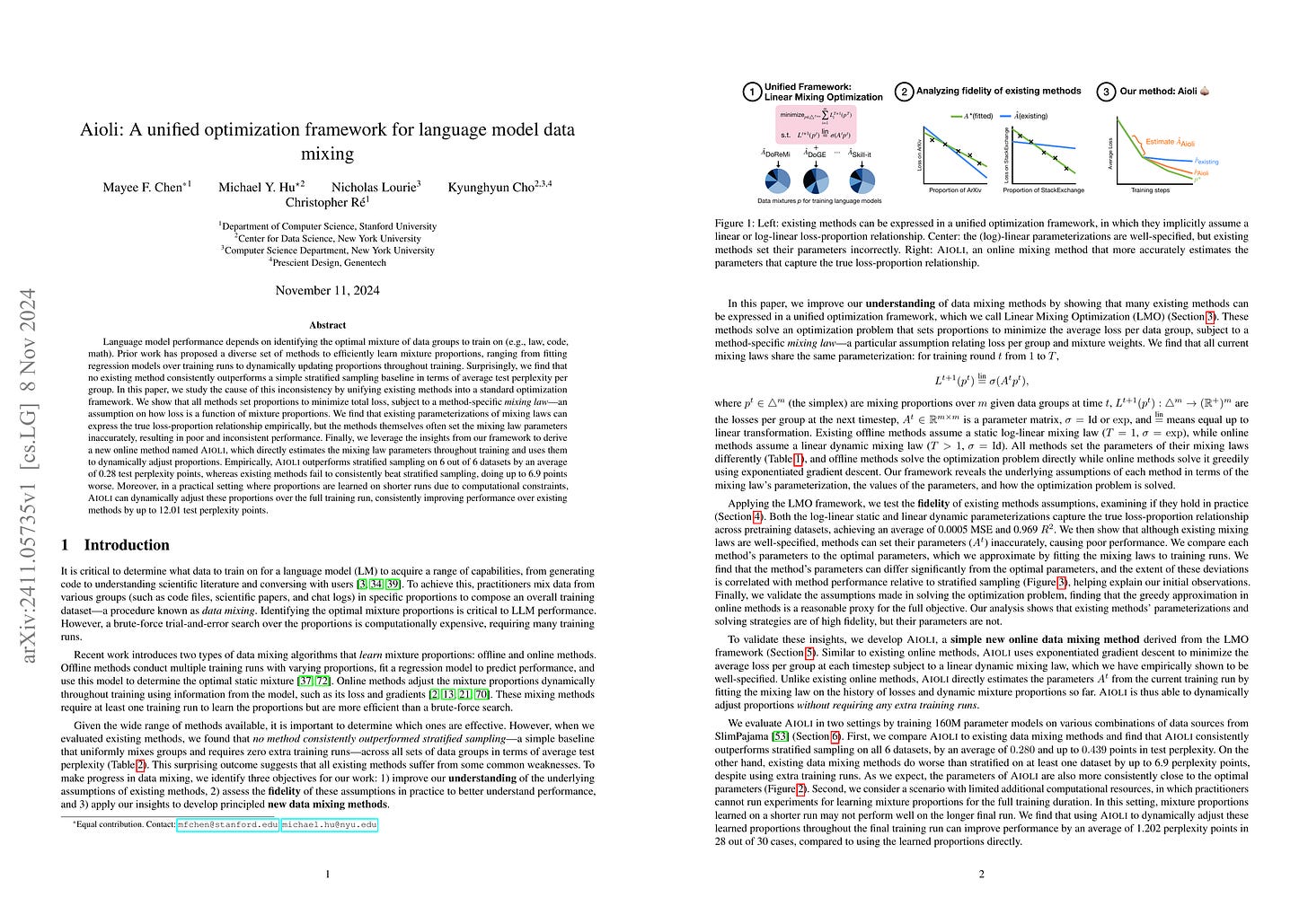

Language model performance depends on identifying the optimal mixture of data groups to train on (e.g., law, code, math). Prior work has proposed a diverse set of methods to efficiently learn mixture proportions, ranging from fitting regression models over training runs to dynamically updating proportions throughout training. Surprisingly, we find that no existing method consistently outperforms a simple stratified sampling baseline in terms of average test perplexity per group. In this paper, we study the cause of this inconsistency by unifying existing methods into a standard optimization framework. We show that all methods set proportions to minimize total loss, subject to a method-specific mixing law -- an assumption on how loss is a function of mixture proportions. We find that existing parameterizations of mixing laws can express the true loss-proportion relationship empirically, but the methods themselves often set the mixing law parameters inaccurately, resulting in poor and inconsistent performance. Finally, we leverage the insights from our framework to derive a new online method named Aioli, which directly estimates the mixing law parameters throughout training and uses them to dynamically adjust proportions. Empirically, Aioli outperforms stratified sampling on 6 out of 6 datasets by an average of 0.28 test perplexity points, whereas existing methods fail to consistently beat stratified sampling, doing up to 6.9 points worse. Moreover, in a practical setting where proportions are learned on shorter runs due to computational constraints, Aioli can dynamically adjust these proportions over the full training run, consistently improving performance over existing methods by up to 12.01 test perplexity points.

온라인으로 학습 분포를 조절하는 방법에 대한 연구. DoReMi 같은 기존 방법들을 통합해서 관찰했을 때 서로 다른 데이터셋이 다른 데이터셋의 Loss에 미치는 영향을 나타내는 행렬 A에 대한 추정이 부정확하다고 하네요. 이 부분을 개선한 알고리즘입니다.

A study on methods for online adjustment of dataset proportions during training. After analyzing previous methods like DoReMi from a unified perspective, it was found that the estimation of matrix A, which represents the influence of one dataset on the loss of other datasets, is inaccurate. The proposed algorithm improves upon this aspect.

#dataset #efficient-training

ADOPT: Modified Adam Can Converge with Any β_2 with the Optimal Rate

(Shohei Taniguchi, Keno Harada, Gouki Minegishi, Yuta Oshima, Seong Cheol Jeong, Go Nagahara, Tomoshi Iiyama, Masahiro Suzuki, Yusuke Iwasawa, Yutaka Matsuo)

Adam is one of the most popular optimization algorithms in deep learning. However, it is known that Adam does not converge in theory unless choosing a hyperparameter, i.e., β_2, in a problem-dependent manner. There have been many attempts to fix the non-convergence (e.g., AMSGrad), but they require an impractical assumption that the gradient noise is uniformly bounded. In this paper, we propose a new adaptive gradient method named ADOPT, which achieves the optimal convergence rate of O(1/sqrt(T)) with any choice of β_2 without depending on the bounded noise assumption. ADOPT addresses the non-convergence issue of Adam by removing the current gradient from the second moment estimate and changing the order of the momentum update and the normalization by the second moment estimate. We also conduct intensive numerical experiments, and verify that our ADOPT achieves superior results compared to Adam and its variants across a wide range of tasks, including image classification, generative modeling, natural language processing, and deep reinforcement learning. The implementation is available at https://github.com/iShohei220/adopt.

Adam의 수렴이 β_2에 영향을 받는 문제에 대한 수정. 방법은 간단하게 파라미터 업데이트 이후 모멘텀을 업데이트하고 Second moment를 사용한 Normalization을 모멘텀 업데이트로 이동시키는 것이네요. LaProp과 비슷하군요. (https://arxiv.org/abs/2002.04839)

테스트해본 사람들 사이에서는 괜찮은 것 같다는 평이 있네요. (https://x.com/wightmanr/status/1854930826279940579)

This paper addresses the issue of Adam's convergence being affected by β_2. The solution is straightforward: updating the momentum after parameter updates and moving the normalization using the second moment into the momentum update. This approach is similar to LaProp (https://arxiv.org/abs/2002.04839).

Among those who have tested it, there are positive reviews suggesting that it works well. (https://x.com/wightmanr/status/1854930826279940579)

#optimizer

The Super Weight in Large Language Models

(Mengxia Yu, De Wang, Qi Shan, Colorado Reed, Alvin Wan)

Recent works have shown a surprising result: a small fraction of Large Language Model (LLM) parameter outliers are disproportionately important to the quality of the model. LLMs contain billions of parameters, so these small fractions, such as 0.01%, translate to hundreds of thousands of parameters. In this work, we present an even more surprising finding: Pruning as few as a single parameter can destroy an LLM's ability to generate text -- increasing perplexity by 3 orders of magnitude and reducing zero-shot accuracy to guessing. We propose a data-free method for identifying such parameters, termed super weights, using a single forward pass through the model. We additionally find that these super weights induce correspondingly rare and large activation outliers, termed super activations. When preserved with high precision, super activations can improve simple round-to-nearest quantization to become competitive with state-of-the-art methods. For weight quantization, we similarly find that by preserving the super weight and clipping other weight outliers, round-to-nearest quantization can scale to much larger block sizes than previously considered. To facilitate further research into super weights, we provide an index of super weight coordinates for common, openly available LLMs.

트랜스포머 LLM에는 극소수지만 성능에 절대적인 영향을 미치는 가중치가 있다는 분석. 생각보다 초기 레이어에 있고 이를 억제하면 Stopword만 생성하게 되는군요.

Super Weight의 효과가 아웃라이어로 모두 설명되는 것은 아니겠지만 아웃라이어와 관련해선 요즘 Adam과 회전을 통한 분석이 재미있네요. (https://arxiv.org/abs/2405.19279, https://arxiv.org/abs/2409.11321, https://arxiv.org/abs/2410.19964, https://arxiv.org/abs/2410.17174)

This analysis reveals that transformer LLMs contain a very small number of weights that have a crucial impact on model performance. These weights are located in earlier layers than I expected, and suppressing them causes the model to generate only stopwords.

While the effect of super weights cannot be entirely explained by outliers, recent studies linking outliers with Adam and rotation are intriguing. (https://arxiv.org/abs/2405.19279, https://arxiv.org/abs/2409.11321, https://arxiv.org/abs/2410.19964, https://arxiv.org/abs/2410.17174)

#quantization #transformer

On Reward Functions for Self-Improving General-Purpose Reasoning

(Anonymous authors)

Prompting a Large Language Model (LLM) to output Chain-of-Thought (CoT) reasoning improves performance on complex problem-solving tasks. Further, several popular approaches exist to “self-improve” the abilities of LLMs to use CoT on tasks where supervised (question, answer) datasets are available. However, an emerging line of work explores whether self-improvement is possible without supervised datasets, instead utilizing the same large, general-purpose text corpora as used during pre-training. These pre-training datasets encompass large parts of human knowledge and dwarf all finetuning datasets in size. Self-improving CoT abilities on such general datasets could enhance reasoning for any general-purpose text generation task, and doing so at pre-training scale may unlock unprecedented reasoning abilities. In this paper, we outline the path towards self-improving CoT reasoning at pre-training scale and address fundamental challenges in this direction. We start by framing this as a reinforcement learning problem: given the first n tokens from a large pre-training corpus, the model generates a CoT and receives a reward based on how well the CoT helps predict the following m tokens. We then investigate a fundamental question: What constitutes a suitable reward function for learning to reason during general language modelling? We outline the desirable qualities of such a reward function and empirically demonstrate how different functions affect what reasoning is learnt and where reasoning is rewarded. Using these insights, we introduce a novel reward function called Reasoning Advantage (RA) that facilitates self-improving CoT reasoning on freeform question-answering (QA) data, where answers are unstructured and difficult to verify. Equipped with a suitable reward function, we explore the optimisation of it on general-purpose text using offline RL. Our analysis indicates that future work should investigate more powerful optimisation algorithms, potentially moving towards more online algorithms that better explore the space of CoT generations.

웹 텍스트와 같이 특별히 정답이 지정되지 않은 텍스트를 사용해 CoT를 생성하고 추론하는 능력을 학습시킬 수 있는가? 그를 위해서는 어떤 Reward를 사용해야 하는가? 여기에서 제안하는 것은 CoT가 주어졌을 때의 Likelihood를 Reward로 사용하되 CoT가 없는 경우의 Likelihood를 베이스라인으로 사용하고 Normalization/Clipping을 적용하는 것이군요.

추론 능력은 지금 LLM 업계에서 가장 중요한 화두인 것 같네요. 다만 이를 어떻게 모델에 주입할 수 있는지에 대해서 알려진 것이 없죠. OpenAI도 여러 시도들 중에서 성공한 시도가 o1으로 이어졌다고 했으니 많이 탐색하는 수밖에 없을 것 같네요.

Can we train the capability of generating CoT and performing reasoning using texts without specific answers, such as plain web texts? What kind of reward should be used for this purpose? This study suggests using the likelihood of suffixes given CoT as a reward, but with the likelihood without CoT as a baseline, and applying normalization and clipping.

Reasoning capability seems to be the hottest topic in the LLM field right now. However, it's not well known how we can inject this capability into the model. As OpenAI also mentioned that o1 is the result of one successful attempt among various tries, it seems we just need to explore this problem extensively.

#reasoning #synthetic-data