2024년 10월 3일

Not All LLM Reasoners Are Created Equal

(Arian Hosseini, Alessandro Sordoni, Daniel Toyama, Aaron Courville, Rishabh Agarwal)

We study the depth of grade-school math (GSM) problem-solving capabilities of LLMs. To this end, we evaluate their performance on pairs of existing math word problems together so that the answer to the second problem depends on correctly answering the first problem. Our findings reveal a significant reasoning gap in most LLMs, that is performance difference between solving the compositional pairs and solving each question independently. This gap is more pronounced in smaller, more cost-efficient, and math-specialized models. Moreover, instruction-tuning recipes and code generation have varying effects across LLM sizes, while finetuning on GSM can lead to task overfitting. Our analysis indicates that large reasoning gaps are not because of test-set leakage, but due to distraction from additional context and poor second-hop reasoning. Overall, LLMs exhibit systematic differences in their reasoning abilities, despite what their performance on standard benchmarks indicates.

GSM8K 문제들을 이어붙여서 복합 문제를 만든 다음 평가. 문제를 연결해서 추론 난이도를 높이는 연구는 최근에도 나왔었죠. (https://arxiv.org/abs/2410.00151)

흥미로운 지점은 모델 크기와 Instruction Tuning의 전후 성능 차이를 분석한 부분이네요. 작은 모델에서는 Instruction Tuning으로 성능을 크게 올릴 수 있지만 그것이 실용적인 성능 차이로는 이어지지 않을 수 있다는 것이죠.

The study evaluates LLMs using composite problems created by concatenating GSM8K problems. This approach of combining problems to increase reasoning difficulty has been appeared in recent research as well. (https://arxiv.org/abs/2410.00151)

An interesting aspect is their analysis of performance differences before and after instruction tuning, with respect to model sizes. For smaller models, instruction tuning can significantly improve benchmark performance, but this may not necessarily translate to practical, real-world performance improvements.

*#benchmark #llm #reasoning

FlashMask: Efficient and Rich Mask Extension of FlashAttention

(Guoxia Wang, Jinle Zeng, Xiyuan Xiao, Siming Wu, Jiabin Yang, Lujing Zheng, Zeyu Chen, Jiang Bian, Dianhai Yu, Haifeng Wang)

The computational and memory demands of vanilla attention scale quadratically with the sequence length N, posing significant challenges for processing long sequences in Transformer models. FlashAttention alleviates these challenges by eliminating the O(N^2) memory dependency and reducing attention latency through IO-aware memory optimizations. However, its native support for certain attention mask types is limited, and it does not inherently accommodate more complex masking requirements. Previous approaches resort to using dense masks with O(N^2) memory complexity, leading to inefficiencies. In this paper, we propose FlashMask, an extension of FlashAttention that introduces a column-wise sparse representation of attention masks. This approach efficiently represents a wide range of mask types and facilitates the development of optimized kernel implementations. By adopting this novel representation, FlashMask achieves linear memory complexity O(N), suitable for modeling long-context sequences. Moreover, this representation enables kernel optimizations that eliminate unnecessary computations by leveraging sparsity in the attention mask, without sacrificing computational accuracy, resulting in higher computational efficiency. We evaluate FlashMask's performance in fine-tuning and alignment training of LLMs such as SFT, LoRA, DPO, and RM. FlashMask achieves significant throughput improvements, with end-to-end speedups ranging from 1.65x to 3.22x compared to existing FlashAttention dense method. Additionally, our kernel-level comparisons demonstrate that FlashMask surpasses the latest counterpart, FlexAttention, by 12.1% to 60.7% in terms of kernel TFLOPs/s, achieving 37.8% to 62.3% of the theoretical maximum FLOPs/s on the A100 GPU. The code is open-sourced on PaddlePaddle and integrated into PaddleNLP, supporting models with over 100 billion parameters for contexts up to 128K tokens.

보다 유연한 Attention Mask를 지원하는 Attention 커널. FlexAttention과 비슷하군요. FlexAttention과 같은 도구 덕분에 Attention Mask를 여러모로 실험해볼 수 있는 여지가 생겼죠. Prefix LM이나 이미지 같은 모달리티에 대한 Bidirectional Attention이 가장 대표적이겠네요.

This is an attention kernel that supports more flexible attention masks. It's similar to FlexAttention. Thanks to tools like FlexAttention, we now have the opportunity to experiment with various attention masks. The most notable examples would be Prefix LM or bidirectional attention for modalities such as images.

#efficiency

nGPT: Normalized Transformer with Representation Learning on the Hypersphere

(Ilya Loshchilov, Cheng-Ping Hsieh, Simeng Sun, Boris Ginsburg)

We propose a novel neural network architecture, the normalized Transformer (nGPT) with representation learning on the hypersphere. In nGPT, all vectors forming the embeddings, MLP, attention matrices and hidden states are unit norm normalized. The input stream of tokens travels on the surface of a hypersphere, with each layer contributing a displacement towards the target output predictions. These displacements are defined by the MLP and attention blocks, whose vector components also reside on the same hypersphere. Experiments show that nGPT learns much faster, reducing the number of training steps required to achieve the same accuracy by a factor of 4 to 20, depending on the sequence length.

Transformer Residual Block을 Norm(x + a(Norm(x) - x))과 같은 형태로 설정. 과거와 최근의 Post Norm의 결합과 비슷한 형태군요. 거기에 Weight들을 매 학습 스텝마다 Normalize 해주는 것과 Scaling Factor들이 추가됐습니다. 이는 EDM2가 생각나게 하네요. (https://arxiv.org/abs/2312.02696)

과거의 Post Norm을 생각하면 깊이에 따른 불안정성이 있는데 이 세팅에서는 어떨지 모르겠네요. Layer Norm의 위치와 관련된 이전 연구들을 참조하는 것이 좋을지도 모르겠습니다.

The Transformer residual block is set up in the form of Norm(x + a(Norm(x) - x)). This structure is similar to a combination of past and recent Post-Norm approaches. Additionally, weights are normalized at every training step, and scaling factors are introduced. This reminds me of EDM2 (https://arxiv.org/abs/2312.02696).

In the past, Post-Norm configurations showed instability as network depth increased. I'm curious how this setting might behave differently. It might be worthwhile to reference previous research on the positioning of layer normalization.

#transformer #normalization

ImageFolder: Autoregressive Image Generation with Folded Tokens

(Xiang Li, Hao Chen, Kai Qiu, Jason Kuen, Jiuxiang Gu, Bhiksha Raj, Zhe Lin)

Image tokenizers are crucial for visual generative models, e.g., diffusion models (DMs) and autoregressive (AR) models, as they construct the latent representation for modeling. Increasing token length is a common approach to improve the image reconstruction quality. However, tokenizers with longer token lengths are not guaranteed to achieve better generation quality. There exists a trade-off between reconstruction and generation quality regarding token length. In this paper, we investigate the impact of token length on both image reconstruction and generation and provide a flexible solution to the tradeoff. We propose ImageFolder, a semantic tokenizer that provides spatially aligned image tokens that can be folded during autoregressive modeling to improve both generation efficiency and quality. To enhance the representative capability without increasing token length, we leverage dual-branch product quantization to capture different contexts of images. Specifically, semantic regularization is introduced in one branch to encourage compacted semantic information while another branch is designed to capture the remaining pixel-level details. Extensive experiments demonstrate the superior quality of image generation and shorter token length with ImageFolder tokenizer.

Autoregressive Image Generation을 가속하려면 토큰 시퀀스의 길이가 짧거나 혹은 여러 토큰을 한 번에 예측하는 것이 좋을 텐데, 토큰을 한 번에 예측하려면 토큰이 독립적인 쪽이 낫다는 아이디어군요. 그래서 디테일 토큰과 시맨틱 토큰을 분리해서 이 토큰의 결합을 통해 Reconstruction과 Generation을 한다는 아이디어입니다.

To accelerate autoregressive image generation, it's beneficial to have either shorter token sequences or the ability to predict multiple tokens at once. For predicting multiple tokens simultaneously, it's advantageous if the tokens are more independent. This paper presents an idea to separate tokens into detail tokens and semantic tokens, then uses the combination of these tokens for both reconstruction and generation.

#vq #autoregressive-model #image-generation

When a language model is optimized for reasoning, does it still show embers of autoregression? An analysis of OpenAI o1

(R. Thomas McCoy, Shunyu Yao, Dan Friedman, Mathew D. Hardy, Thomas L. Griffiths)

In "Embers of Autoregression" (McCoy et al., 2023), we showed that several large language models (LLMs) have some important limitations that are attributable to their origins in next-word prediction. Here we investigate whether these issues persist with o1, a new system from OpenAI that differs from previous LLMs in that it is optimized for reasoning. We find that o1 substantially outperforms previous LLMs in many cases, with particularly large improvements on rare variants of common tasks (e.g., forming acronyms from the second letter of each word in a list, rather than the first letter). Despite these quantitative improvements, however, o1 still displays the same qualitative trends that we observed in previous systems. Specifically, o1 - like previous LLMs - is sensitive to the probability of examples and tasks, performing better and requiring fewer "thinking tokens" in high-probability settings than in low-probability ones. These results show that optimizing a language model for reasoning can mitigate but might not fully overcome the language model's probability sensitivity.

Embers of Autoregression을 (https://arxiv.org/abs/2309.13638) o1에 대해 테스트해봤군요. 여전히 Autoregressive 학습에 의한 과제 빈도에 따른 성능 변화가 나타나지만 그 정도가 훨씬 나아지긴 했습니다.

한 가지 재미있는 것은 빈도가 낮은 과제일수록 생성하는 Reasoning 토큰의 수도 늘어난다는 것이네요.

o1은 굉장히 중요한 발전이고 과거의 LLM과는 질적으로 다른 모델이 될 수 있다는 생각이 강해집니다. 너무 당연한 이야기일까요?

They've tested o1 using the Embers of Autoregression framework (https://arxiv.org/abs/2309.13638). While performance variations based on task frequency due to autoregressive learning are still present, the extent has significantly improved.

An interesting observation is that as task frequency decreases, the number of generated reasoning tokens increases.

o1 seems to be a very significant advancement, and I'm increasingly convinced that it could be a qualitatively different model from previous LLMs. Am I stating the obvious?

#autoregressive-model

U-shaped and Inverted-U Scaling behind Emergent Abilities of Large Language Models

(Tung-Yu Wu, Pei-Yu Lo)

Large language models (LLMs) have been shown to exhibit emergent abilities in some downstream tasks, where performance seems to stagnate at first and then improve sharply and unpredictably with scale beyond a threshold. By dividing questions in the datasets according to difficulty level by average performance, we observe U-shaped scaling for hard questions, and inverted-U scaling followed by steady improvement for easy questions. Moreover, the emergence threshold roughly coincides with the point at which performance on easy questions reverts from inverse scaling to standard scaling. Capitalizing on the observable though opposing scaling trend on easy and hard questions, we propose a simple yet effective pipeline, called Slice-and-Sandwich, to predict both the emergence threshold and model performance beyond the threshold.

LLM의 창발 현상에 대한 분석. 모델의 규모에 대해 문제의 난이도로 나눠 분석했군요. 흥미로운 점은 쉬운 문제들에 대해서는 Inverse-U 형태의 Scaling이 나타나고 어려운 문제들에 대해서는 U 형태의 Scaling이 나타난다고 합니다. 그리고 쉬운 문제들에 대해서 커브가 꺾이는 지점에서 창발이 일어난다고 하네요.

Inverse U Scaling은 Double Descent와 비슷하고 U Scaling은 Inverse Scaling에서 모델 규모의 증가에 따라 Scaling 패턴으로 변화하는 형태죠. 상당히 흥미로운 설명이네요.

Analysis of emergent phenomena in LLMs. They analyzed model performance across different scales, categorized by problem difficulty. Interestingly, they found that for easy problems, there's an inverted U-shaped scaling pattern, while for difficult problems, there's a U-shaped scaling pattern. They also noted that emergence occurs at the point where the curve for easy problems begins to change direction.

Inverted U-shaped scaling is similar to the double descent phenomenon, while U-shaped scaling represents a transition from inverse scaling to normal scaling as model size increases. This provides a very interesting explanation for emergent phenomena.

#scaling-law

Fira: Can We Achieve Full-rank Training of LLMs Under Low-rank Constraint?

(Xi Chen, Kaituo Feng, Changsheng Li, Xunhao Lai, Xiangyu Yue, Ye Yuan, Guoren Wang)

Low-rank training has emerged as a promising approach for reducing memory usage in training Large Language Models (LLMs). Previous methods either rely on decomposing weight matrices (e.g., LoRA), or seek to decompose gradient matrices (e.g., GaLore) to ensure reduced memory consumption. However, both of them constrain the training in a low-rank subspace, thus inevitably leading to sub-optimal performance. This raises a question: whether it is possible to consistently preserve the low-rank constraint for memory efficiency, while achieving full-rank training (i.e., training with full-rank gradients of full-rank weights) to avoid inferior outcomes? In this paper, we propose a new plug-and-play training framework for LLMs called Fira, as the first attempt to achieve this goal. First, we observe an interesting phenomenon during LLM training: the scaling impact of adaptive optimizers (e.g., Adam) on the gradient norm remains similar from low-rank to full-rank training. Based on this observation, we propose a norm-based scaling method, which utilizes the scaling impact of low-rank optimizers as substitutes for that of original full-rank optimizers to enable full-rank training. In this way, we can preserve the low-rank constraint in the optimizer while achieving full-rank training for better performance. Moreover, we find that there are sudden gradient rises during the optimization process, potentially causing loss spikes. To address this, we further put forward a norm-growth limiter to smooth the gradient via regulating the relative increase of gradient norms. Extensive experiments on the pre-training and fine-tuning of LLMs show that Fira outperforms both LoRA and GaLore, achieving performance that is comparable to or even better than full-rank training.

Low Rank Training. GaLore에 (https://arxiv.org/abs/2403.03507) 더해 Low Rank 업데이트에 대한 Correction Term을 추가하고 싶은데 이 Correction Term에 대한 Adam Scaling을 구할 수가 없다, 그런데 Low Rank 학습과 Full Rank 학습에서 이 Scaling Factor가 비슷하기 때문에 이를 활용한다, 이런 아이디어입니다.

Low Rank Training. This paper builds upon GaLore (https://arxiv.org/abs/2403.03507) by attempting to add a correction term for low-rank updates. However, it's not possible to directly calculate the Adam scaling for this correction term. The key insight is that this scaling factor is similar between low-rank and full-rank training, which allows them to leverage this observation.

#efficient-training

Extending Context Window of Large Language Models from a Distributional Perspective

(Yingsheng Wu. Yuxuan Gu, Xiaocheng Feng, Weihong Zhong, Dongliang Xu, Qing Yang, Hongtao Liu, Bing Qin)

Scaling the rotary position embedding (RoPE) has become a common method for extending the context window of RoPE-based large language models (LLMs). However, existing scaling methods often rely on empirical approaches and lack a profound understanding of the internal distribution within RoPE, resulting in suboptimal performance in extending the context window length. In this paper, we propose to optimize the context window extending task from the view of rotary angle distribution. Specifically, we first estimate the distribution of the rotary angles within the model and analyze the extent to which length extension perturbs this distribution. Then, we present a novel extension strategy that minimizes the disturbance between rotary angle distributions to maintain consistency with the pre-training phase, enhancing the model's capability to generalize to longer sequences. Experimental results compared to the strong baseline methods demonstrate that our approach reduces by up to 72% of the distributional disturbance when extending LLaMA2's context window to 8k, and reduces by up to 32% when extending to 16k. On the LongBench-E benchmark, our method achieves an average improvement of up to 4.33% over existing state-of-the-art methods. Furthermore, Our method maintains the model's performance on the Hugging Face Open LLM benchmark after context window extension, with only an average performance fluctuation ranging from -0.12 to +0.22.

Context 확장 과정에서 RoPE의 각도 분포의 변화를 최소화하도록 Interpolation/Extrapolation 한다는 아이디어네요.

The idea is to interpolate or extrapolate in a way that minimizes changes in the angle distribution of RoPE during the context extension process.

#long-context #positional-encoding

VinePPO: Unlocking RL Potential For LLM Reasoning Through Refined Credit Assignment

(Amirhossein Kazemnejad, Milad Aghajohari, Eva Portelance, Alessandro Sordoni, Siva Reddy, Aaron Courville, Nicolas Le Roux)

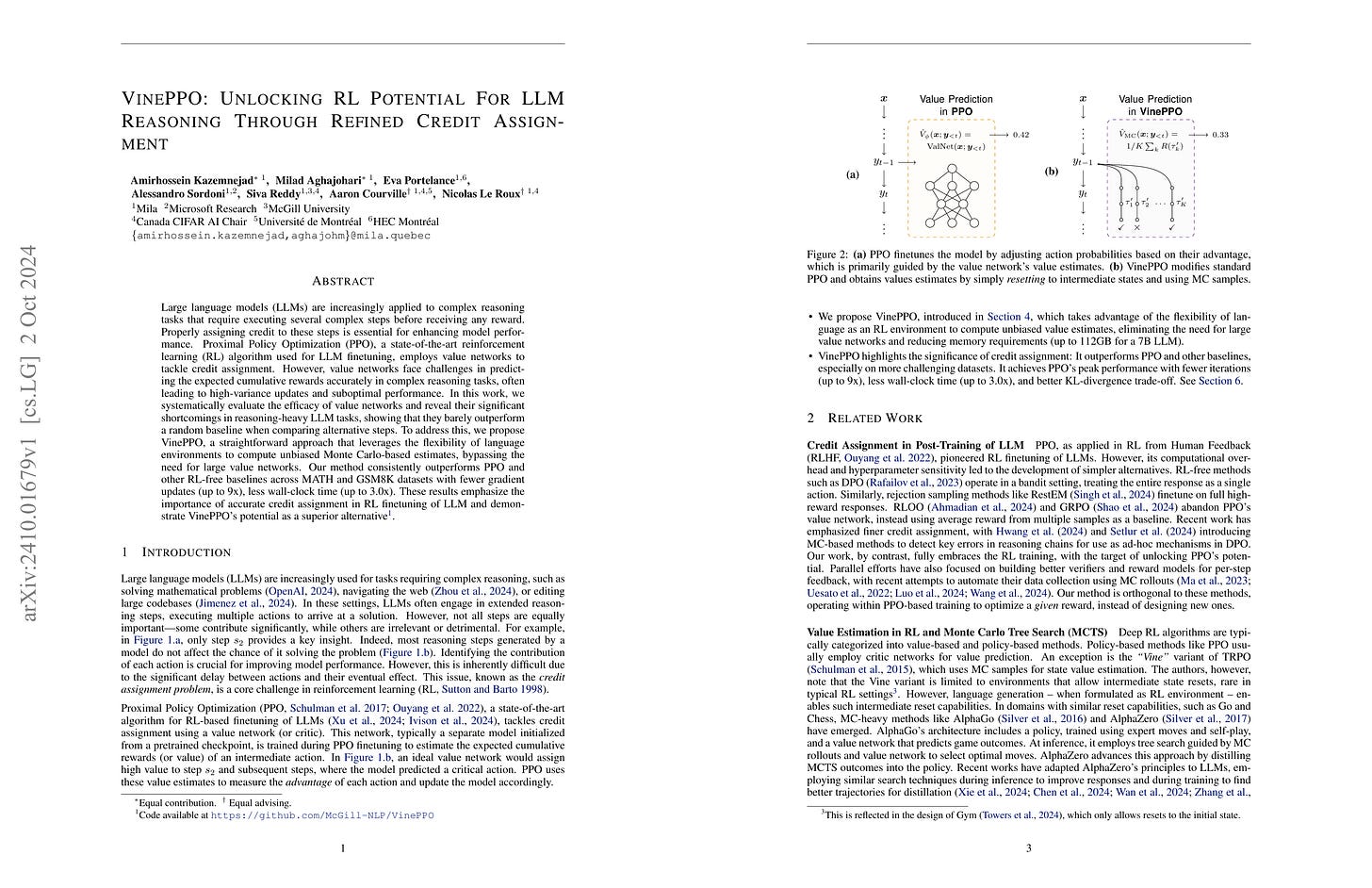

Large language models (LLMs) are increasingly applied to complex reasoning tasks that require executing several complex steps before receiving any reward. Properly assigning credit to these steps is essential for enhancing model performance. Proximal Policy Optimization (PPO), a state-of-the-art reinforcement learning (RL) algorithm used for LLM finetuning, employs value networks to tackle credit assignment. However, value networks face challenges in predicting the expected cumulative rewards accurately in complex reasoning tasks, often leading to high-variance updates and suboptimal performance. In this work, we systematically evaluate the efficacy of value networks and reveal their significant shortcomings in reasoning-heavy LLM tasks, showing that they barely outperform a random baseline when comparing alternative steps. To address this, we propose VinePPO, a straightforward approach that leverages the flexibility of language environments to compute unbiased Monte Carlo-based estimates, bypassing the need for large value networks. Our method consistently outperforms PPO and other RL-free baselines across MATH and GSM8K datasets with fewer gradient updates (up to 9x), less wall-clock time (up to 3.0x). These results emphasize the importance of accurate credit assignment in RL finetuning of LLM and demonstrate VinePPO's potential as a superior alternative.

추론 과제에 대해 RL을 진행할 때 추론 과정에서 중요한 지점을 Value Function이 찾아내야 하는데 보통 그러지 못한다는 지적. 이를 샘플링을 통한 Monte Carlo 추정으로 대체한다는 아이디어입니다. Value Function을 샘플링으로 대체하는 것은 GRPO와도 비슷하네요. (https://arxiv.org/abs/2402.03300)

When applying Reinforcement Learning (RL) to reasoning tasks, it's crucial for the value function to identify important steps in the reasoning process, but it often fails to do so. This paper proposes replacing the value function with Monte Carlo estimates through sampling. The idea of substituting the value function with sampling is similar to GRPO (https://arxiv.org/abs/2402.03300).

#reasoning #rl

HelpSteer2-Preference: Complementing Ratings with Preferences

(Zhilin Wang, Alexander Bukharin, Olivier Delalleau, Daniel Egert, Gerald Shen, Jiaqi Zeng, Oleksii Kuchaiev, Yi Dong)

Reward models are critical for aligning models to follow instructions, and are typically trained following one of two popular paradigms: Bradley-Terry style or Regression style. However, there is a lack of evidence that either approach is better than the other, when adequately matched for data. This is primarily because these approaches require data collected in different (but incompatible) formats, meaning that adequately matched data is not available in existing public datasets. To tackle this problem, we release preference annotations (designed for Bradley-Terry training) to complement existing ratings (designed for Regression style training) in the HelpSteer2 dataset. To improve data interpretability, preference annotations are accompanied with human-written justifications. Using this data, we conduct the first head-to-head comparison of Bradley-Terry and Regression models when adequately matched for data. Based on insights derived from such a comparison, we propose a novel approach to combine Bradley-Terry and Regression reward modeling. A Llama-3.1-70B-Instruct model tuned with this approach scores 94.1 on RewardBench, emerging top of more than 140 reward models as of 1 Oct 2024. We also demonstrate the effectiveness of this reward model at aligning models to follow instructions in RLHF. We open-source this dataset (CC-BY-4.0 license) at https://huggingface.co/datasets/nvidia/HelpSteer2 and openly release the trained Reward Model at https://huggingface.co/nvidia/Llama-3.1-Nemotron-70B-Reward

HelpSteer2에서 (https://arxiv.org/abs/2406.08673) 했던 Likert Scale 어노테이션에 대해 Pairwise Preference 스타일 어노테이션을 진행해 Reward Model을 Regression으로 학습한 것과 Bradley-Terry 모델로 학습한 것을 비교해봤네요.

In addition to the Likert scale annotations in HelpSteer2 (https://arxiv.org/abs/2406.08673), they conducted pairwise preference style annotations and compared reward models trained using regression versus the Bradley-Terry model.

#reward-model

OpenMathInstruct-2: Accelerating AI for Math with Massive Open-Source Instruction Data

(Shubham Toshniwal, Wei Du, Ivan Moshkov, Branislav Kisacanin, Alexan Ayrapetyan, Igor Gitman)

Mathematical reasoning continues to be a critical challenge in large language model (LLM) development with significant interest. However, most of the cutting-edge progress in mathematical reasoning with LLMs has become \emph{closed-source} due to lack of access to training data. This lack of data access limits researchers from understanding the impact of different choices for synthesizing and utilizing the data. With the goal of creating a high-quality finetuning (SFT) dataset for math reasoning, we conduct careful ablation experiments on data synthesis using the recently released \texttt{Llama3.1} family of models. Our experiments show that: (a) solution format matters, with excessively verbose solutions proving detrimental to SFT performance, (b) data generated by a strong teacher outperforms \emph{on-policy} data generated by a weak student model, (c) SFT is robust to low-quality solutions, allowing for imprecise data filtering, and (d) question diversity is crucial for achieving data scaling gains. Based on these insights, we create the OpenMathInstruct-2 dataset, which consists of 14M question-solution pairs (≈≈ 600K unique questions), making it nearly eight times larger than the previous largest open-source math reasoning dataset. Finetuning the \texttt{Llama-3.1-8B-Base} using OpenMathInstruct-2 outperforms \texttt{Llama3.1-8B-Instruct} on MATH by an absolute 15.9% (51.9% →→ 67.8%). Finally, to accelerate the open-source efforts, we release the code, the finetuned models, and the OpenMathInstruct-2 dataset under a commercially permissive license.

NVIDIA에서 대규모 수학 Instruction 데이터셋을 만들었군요.

Large scale instruction dataset for mathematics made by NVIDIA.

#dataset #instruction-tuning