2024년 10월 19일

Janus: Decoupling Visual Encoding for Unified Multimodal Understanding and Generation

(Chengyue Wu, Xiaokang Chen, Zhiyu Wu, Yiyang Ma, Xingchao Liu, Zizheng Pan, Wen Liu, Zhenda Xie, Xingkai Yu, Chong Ruan, Ping Luo)

In this paper, we introduce Janus, an autoregressive framework that unifies multimodal understanding and generation. Prior research often relies on a single visual encoder for both tasks, such as Chameleon. However, due to the differing levels of information granularity required by multimodal understanding and generation, this approach can lead to suboptimal performance, particularly in multimodal understanding. To address this issue, we decouple visual encoding into separate pathways, while still leveraging a single, unified transformer architecture for processing. The decoupling not only alleviates the conflict between the visual encoder's roles in understanding and generation, but also enhances the framework's flexibility. For instance, both the multimodal understanding and generation components can independently select their most suitable encoding methods. Experiments show that Janus surpasses previous unified model and matches or exceeds the performance of task-specific models. The simplicity, high flexibility, and effectiveness of Janus make it a strong candidate for next-generation unified multimodal models.

DeepSeek의 이미지 생성이 가능한 Multimodal 모델. 이미지 인식에는 SigLIP을 쓰고 생성에는 VQ와 Autoregression을 사용한 방법이군요. 그래서 야누스네요.

실용적으로는 이런 접근이 좋은 방법일 수 있을 텐데 계속 인식과 생성을 동일한 방식으로 하고 싶어지는 이유는 무엇일까요.

This is a multimodal model from DeepSeek capable of image generation. It uses SigLIP for image recognition and VQ with autoregression for generation. That would be reason why it's named Janus.

Practically, this approach could be effective. However, I always have a desire to unify recognition and generation methods.

#autoregressive-model #image-generation #multimodal

Fluid: Scaling Autoregressive Text-to-image Generative Models with Continuous Tokens

(Lijie Fan, Tianhong Li, Siyang Qin, Yuanzhen Li, Chen Sun, Michael Rubinstein, Deqing Sun, Kaiming He, Yonglong Tian)

Scaling up autoregressive models in vision has not proven as beneficial as in large language models. In this work, we investigate this scaling problem in the context of text-to-image generation, focusing on two critical factors: whether models use discrete or continuous tokens, and whether tokens are generated in a random or fixed raster order using BERT- or GPT-like transformer architectures. Our empirical results show that, while all models scale effectively in terms of validation loss, their evaluation performance -- measured by FID, GenEval score, and visual quality -- follows different trends. Models based on continuous tokens achieve significantly better visual quality than those using discrete tokens. Furthermore, the generation order and attention mechanisms significantly affect the GenEval score: random-order models achieve notably better GenEval scores compared to raster-order models. Inspired by these findings, we train Fluid, a random-order autoregressive model on continuous tokens. Fluid 10.5B model achieves a new state-of-the-art zero-shot FID of 6.16 on MS-COCO 30K, and 0.69 overall score on the GenEval benchmark. We hope our findings and results will encourage future efforts to further bridge the scaling gap between vision and language models.

Discrete Token vs Continuous Token + Diffusion, Next Token Prediction vs Masked Autoregression에 대한 Scaling 실험. 이전에 나온 연구와 유사한 세팅입니다. (https://arxiv.org/abs/2406.11838)

결과적으로는 Masked Autoregression + Contiuous Token Diffusion이 좋은 결과를 냈군요. 이 문제는 여전히 탐색할만한 것이 많다는 느낌입니다.

This paper presents scaling experiments comparing discrete tokens versus continuous tokens + diffusion, and next token prediction versus masked autoregression. The experimental setup is similar to a previous study (https://arxiv.org/abs/2406.11838).

The results show that masked autoregression + continuous token diffusion achieved the best performance. It seems there are still many aspects of this problem worth exploring further.

#autoregressive-model #diffusion #scaling-law

SeerAttention: Learning Intrinsic Sparse Attention in Your LLMs

(Yizhao Gao, Zhichen Zeng, Dayou Du, Shijie Cao, Hayden Kwok-Hay So, Ting Cao, Fan Yang, Mao Yang)

Attention is the cornerstone of modern Large Language Models (LLMs). Yet its quadratic complexity limits the efficiency and scalability of LLMs, especially for those with a long-context window. A promising approach addressing this limitation is to leverage the sparsity in attention. However, existing sparsity-based solutions predominantly rely on predefined patterns or heuristics to approximate sparsity. This practice falls short to fully capture the dynamic nature of attention sparsity in language-based tasks. This paper argues that attention sparsity should be learned rather than predefined. To this end, we design SeerAttention, a new Attention mechanism that augments the conventional attention with a learnable gate that adaptively selects significant blocks in an attention map and deems the rest blocks sparse. Such block-level sparsity effectively balances accuracy and speedup. To enable efficient learning of the gating network, we develop a customized FlashAttention implementation that extracts the block-level ground truth of attention map with minimum overhead. SeerAttention not only applies to post-training, but also excels in long-context fine-tuning. Our results show that at post-training stages, SeerAttention significantly outperforms state-of-the-art static or heuristic-based sparse attention methods, while also being more versatile and flexible to adapt to varying context lengths and sparsity ratios. When applied to long-context fine-tuning with YaRN, SeerAttention can achieve a remarkable 90% sparsity ratio at a 32k context length with minimal perplexity loss, offering a 5.67x speedup over FlashAttention-2.

Downsampling된 QK에서 Top-K를 뽑아 Blocksparse Attention을 계산하는 방법이네요.

It's a method that calculates blocksparse attention by selecting the top-K from downsampled QK.

#efficient-attention #sparsity

Artificial Kuramoto Oscillatory Neurons

(Takeru Miyato, Sindy Löwe, Andreas Geiger, Max Welling)

It has long been known in both neuroscience and AI that ``binding'' between neurons leads to a form of competitive learning where representations are compressed in order to represent more abstract concepts in deeper layers of the network. More recently, it was also hypothesized that dynamic (spatiotemporal) representations play an important role in both neuroscience and AI. Building on these ideas, we introduce Artificial Kuramoto Oscillatory Neurons (AKOrN) as a dynamical alternative to threshold units, which can be combined with arbitrary connectivity designs such as fully connected, convolutional, or attentive mechanisms. Our generalized Kuramoto updates bind neurons together through their synchronization dynamics. We show that this idea provides performance improvements across a wide spectrum of tasks such as unsupervised object discovery, adversarial robustness, calibrated uncertainty quantification, and reasoning. We believe that these empirical results show the importance of rethinking our assumptions at the most basic neuronal level of neural representation, and in particular show the importance of dynamical representations.

쿠라모토 모형이라면 동기화 현상을 다루는 그 모형이군요. 동기화 현상을 모델링하는 것으로 스도쿠 같은 문제를 풀 수 있었다는 결과. KAN처럼 한동안 이 모델을 여기저기 적용해봤다는 사례가 많이 나오겠군요.

여담이지만 Takeru Miyato는 Spectral Normalization의 그 Takeru Miyato네요. 반가운 이름이군요.

The Kuramoto model is the model that explains synchronization phenomena. This result shows that by modeling synchronization phenomena, they were able to solve problems like Sudoku. I expect we'll see many cases where this model is applied to various problems for a while, just like KAN.

As a side note, Takeru Miyato is the same Takeru Miyato known for Spectral Normalization. It's nice to see a familiar name.

#neural-network

PUMA: Empowering Unified MLLM with Multi-granular Visual Generation

(Rongyao Fang, Chengqi Duan, Kun Wang, Hao Li, Hao Tian, Xingyu Zeng, Rui Zhao, Jifeng Dai, Hongsheng Li, Xihui Liu)

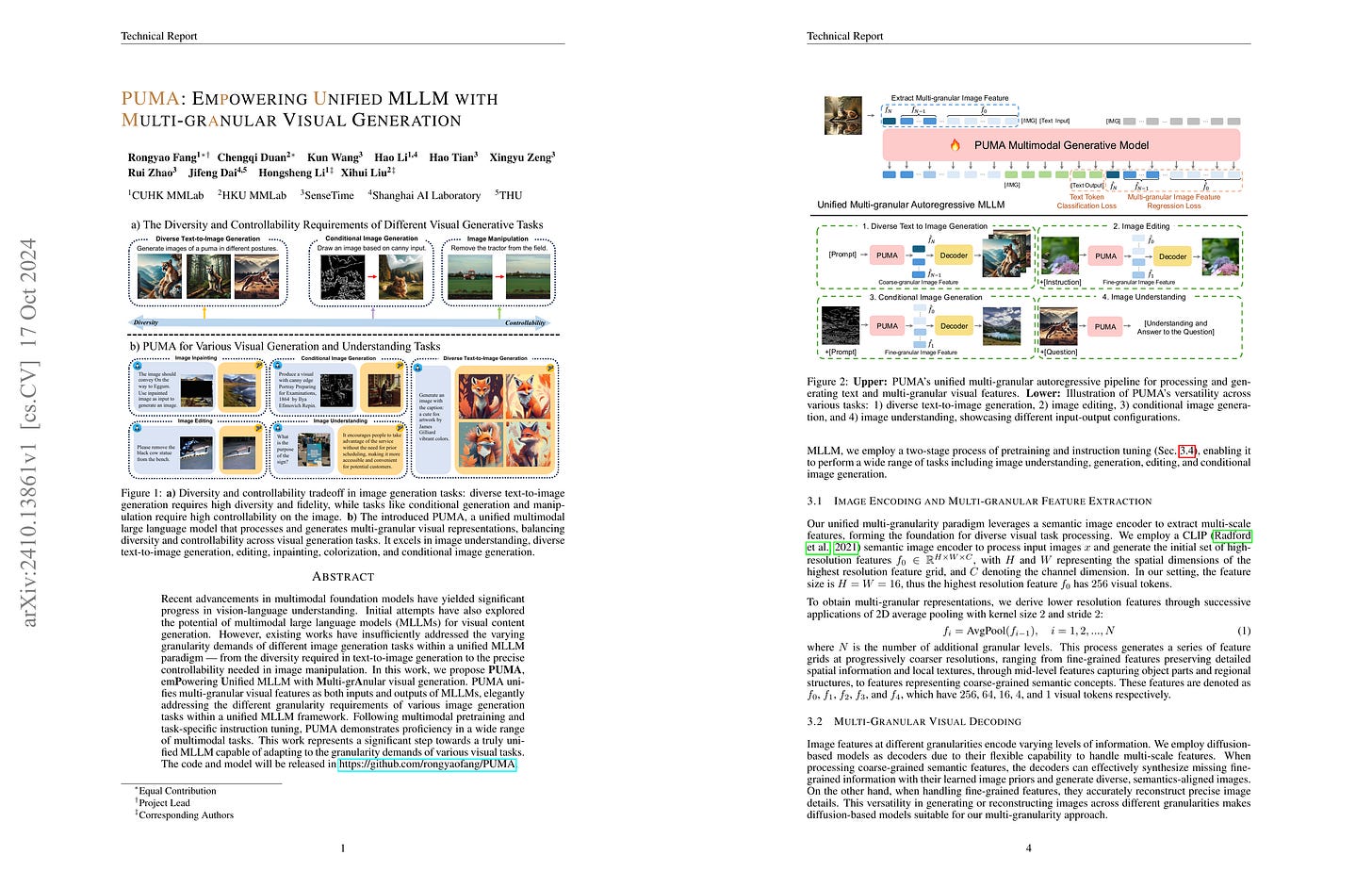

Recent advancements in multimodal foundation models have yielded significant progress in vision-language understanding. Initial attempts have also explored the potential of multimodal large language models (MLLMs) for visual content generation. However, existing works have insufficiently addressed the varying granularity demands of different image generation tasks within a unified MLLM paradigm - from the diversity required in text-to-image generation to the precise controllability needed in image manipulation. In this work, we propose PUMA, emPowering Unified MLLM with Multi-grAnular visual generation. PUMA unifies multi-granular visual features as both inputs and outputs of MLLMs, elegantly addressing the different granularity requirements of various image generation tasks within a unified MLLM framework. Following multimodal pretraining and task-specific instruction tuning, PUMA demonstrates proficiency in a wide range of multimodal tasks. This work represents a significant step towards a truly unified MLLM capable of adapting to the granularity demands of various visual tasks. The code and model will be released in https://github.com/rongyaofang/PUMA.

Multiscale CLIP Feature를 입력으로 받고 마찬가지로 CLIP Feature를 Autoregressive 생성하는 모델. CLIP Feature를 디코더에 넣어 생성하는 형태입니다.

This model uses multiscale CLIP features as input and generates CLIP features autoregressively in a similar manner. The generated CLIP features are then fed into a decoder to produce the final image.

#multimodal #image-generation

How Numerical Precision Affects Mathematical Reasoning Capabilities of LLMs

(Guhao Feng, Kai Yang, Yuntian Gu, Xinyue Ai, Shengjie Luo, Jiacheng Sun, Di He, Zhenguo Li, Liwei Wang)

Despite the remarkable success of Transformer-based Large Language Models (LLMs) across various domains, understanding and enhancing their mathematical capabilities remains a significant challenge. In this paper, we conduct a rigorous theoretical analysis of LLMs' mathematical abilities, with a specific focus on their arithmetic performances. We identify numerical precision as a key factor that influences their effectiveness in mathematical tasks. Our results show that Transformers operating with low numerical precision fail to address arithmetic tasks, such as iterated addition and integer multiplication, unless the model size grows super-polynomially with respect to the input length. In contrast, Transformers with standard numerical precision can efficiently handle these tasks with significantly smaller model sizes. We further support our theoretical findings through empirical experiments that explore the impact of varying numerical precision on arithmetic tasks, providing valuable insights for improving the mathematical reasoning capabilities of LLMs.

Low Precision 트랜스포머는 계산 문제에 대해서 표현력에 제약이 걸린다는 연구. 생각하지 못했던 문제인데 사실이라고 하면 흥미로운 문제네요.

This study suggests that low-precision Transformers have representational limitations for arithmetic tasks. It's an issue I hadn't thought about before, but if it's true, it's certainly an interesting problem.

#transformer

Looking Inward: Language Models Can Learn About Themselves by Introspection

(Felix J Binder, James Chua, Tomek Korbak, Henry Sleight, John Hughes, Robert Long, Ethan Perez, Miles Turpin, Owain Evans)

Humans acquire knowledge by observing the external world, but also by introspection. Introspection gives a person privileged access to their current state of mind (e.g., thoughts and feelings) that is not accessible to external observers. Can LLMs introspect? We define introspection as acquiring knowledge that is not contained in or derived from training data but instead originates from internal states. Such a capability could enhance model interpretability. Instead of painstakingly analyzing a model's internal workings, we could simply ask the model about its beliefs, world models, and goals. More speculatively, an introspective model might self-report on whether it possesses certain internal states such as subjective feelings or desires and this could inform us about the moral status of these states. Such self-reports would not be entirely dictated by the model's training data. We study introspection by finetuning LLMs to predict properties of their own behavior in hypothetical scenarios. For example, "Given the input P, would your output favor the short- or long-term option?" If a model M1 can introspect, it should outperform a different model M2 in predicting M1's behavior even if M2 is trained on M1's ground-truth behavior. The idea is that M1 has privileged access to its own behavioral tendencies, and this enables it to predict itself better than M2 (even if M2 is generally stronger). In experiments with GPT-4, GPT-4o, and Llama-3 models (each finetuned to predict itself), we find that the model M1 outperforms M2 in predicting itself, providing evidence for introspection. Notably, M1 continues to predict its behavior accurately even after we intentionally modify its ground-truth behavior. However, while we successfully elicit introspection on simple tasks, we are unsuccessful on more complex tasks or those requiring out-of-distribution generalization.

모델이 자기 자신의 특성을 예측하는 것이 다른 모델의 특성을 예측하는 것보다 쉽다는 연구. 모델이 자기 자신에 대한 Privileged Information을 갖고 있을 수 있다는 아이디어입니다. 재미있네요.

This research shows that it's easier for a model to predict its own characteristics than to predict those of other models. The idea is that a model may possess privileged information about itself. Interesting!

#llm

γ-MoD: Exploring Mixture-of-Depth Adaptation for Multimodal Large Language Models

(Yaxin Luo, Gen Luo, Jiayi Ji, Yiyi Zhou, Xiaoshuai Sun, Zhiqiang Shen, Rongrong Ji)

Despite the significant progress in multimodal large language models (MLLMs), their high computational cost remains a barrier to real-world deployment. Inspired by the mixture of depths (MoDs) in natural language processing, we aim to address this limitation from the perspective of ``activated tokens''. Our key insight is that if most tokens are redundant for the layer computation, then can be skipped directly via the MoD layer. However, directly converting the dense layers of MLLMs to MoD layers leads to substantial performance degradation. To address this issue, we propose an innovative MoD adaptation strategy for existing MLLMs called γ-MoD. In γ-MoD, a novel metric is proposed to guide the deployment of MoDs in the MLLM, namely rank of attention maps (ARank). Through ARank, we can effectively identify which layer is redundant and should be replaced with the MoD layer. Based on ARank, we further propose two novel designs to maximize the computational sparsity of MLLM while maintaining its performance, namely shared vision-language router and masked routing learning. With these designs, more than 90% dense layers of the MLLM can be effectively converted to the MoD ones. To validate our method, we apply it to three popular MLLMs, and conduct extensive experiments on 9 benchmark datasets. Experimental results not only validate the significant efficiency benefit of γ-MoD to existing MLLMs but also confirm its generalization ability on various MLLMs. For example, with a minor performance drop, i.e., -1.5%, γ-MoD can reduce the training and inference time of LLaVA-HR by 31.0% and 53.2%, respectively.

기존 Multimodal 모델에 Mixture of Depths를 (https://arxiv.org/abs/2404.02258) 적용하는 방법. Mixture of Depths를 적용할 레이어는 Attention 행렬의 랭크를 통해 찾아냅니다. 랭크가 낮다면 토큰에 중복이 많다는 것이죠. Multimodal 모델에 대해서는 다들 정보량이 낮은 토큰이 많다고 생각해서인지 Mixture of Depths를 적용한 사례가 종종 나오네요. (https://arxiv.org/abs/2408.16730)

This paper presents a method for applying Mixture of Depths (https://arxiv.org/abs/2404.02258) to existing multimodal models. The layers suitable for applying Mixture of Depths are identified using the rank of the attention matrix. A low rank indicates a high level of token redundancy. It seems that the frequent application of Mixture of Depths to multimodal models is due to the common belief that these models contain many tokens with low information content. (https://arxiv.org/abs/2408.16730)

#multimodal #moe #efficiency