2024년 10월 17일

Long-LRM: Long-sequence Large Reconstruction Model for Wide-coverage Gaussian Splats

(Chen Ziwen, Hao Tan, Kai Zhang, Sai Bi, Fujun Luan, Yicong Hong, Li Fuxin, Zexiang Xu)

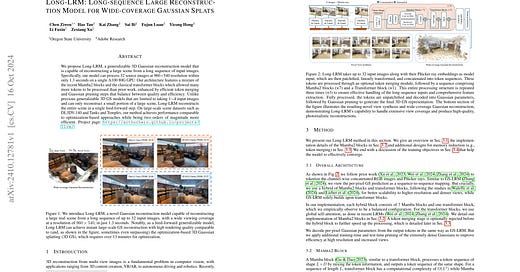

We propose Long-LRM, a generalizable 3D Gaussian reconstruction model that is capable of reconstructing a large scene from a long sequence of input images. Specifically, our model can process 32 source images at 960x540 resolution within only 1.3 seconds on a single A100 80G GPU. Our architecture features a mixture of the recent Mamba2 blocks and the classical transformer blocks which allowed many more tokens to be processed than prior work, enhanced by efficient token merging and Gaussian pruning steps that balance between quality and efficiency. Unlike previous feed-forward models that are limited to processing 1~4 input images and can only reconstruct a small portion of a large scene, Long-LRM reconstructs the entire scene in a single feed-forward step. On large-scale scene datasets such as DL3DV-140 and Tanks and Temples, our method achieves performance comparable to optimization-based approaches while being two orders of magnitude more efficient. Project page: https://arthurhero.github.io/projects/llrm

https://arthurhero.github.io/projects/llrm/

Long Context가 자연스러워지니 Novel View Synthesis 같은 문제에 대해서도 단순하게 이미지를 연결해버리는 접근이 가능하네요.

With the ability to handle long contexts becoming more natural, it's now possible to simply concatenate multiple images as input for tasks like novel view synthesis.

#long-context #novel-view-synthesis

One Step Diffusion via Shortcut Models

(Kevin Frans, Danijar Hafner, Sergey Levine, Pieter Abbeel)

Diffusion models and flow-matching models have enabled generating diverse and realistic images by learning to transfer noise to data. However, sampling from these models involves iterative denoising over many neural network passes, making generation slow and expensive. Previous approaches for speeding up sampling require complex training regimes, such as multiple training phases, multiple networks, or fragile scheduling. We introduce shortcut models, a family of generative models that use a single network and training phase to produce high-quality samples in a single or multiple sampling steps. Shortcut models condition the network not only on the current noise level but also on the desired step size, allowing the model to skip ahead in the generation process. Across a wide range of sampling step budgets, shortcut models consistently produce higher quality samples than previous approaches, such as consistency models and reflow. Compared to distillation, shortcut models reduce complexity to a single network and training phase and additionally allow varying step budgets at inference time.

가변 샘플링 스텝에 대응 가능한 단순한 방법이군요. 스텝 크기를 모델의 입력으로 주는데, 스텝 두 번이 2배 크기의 스텝과 같아야 한다는 Self Distillation 형태의 Objective를 사용합니다.

This is a simple approach that can handle variable sampling steps. The step size is used as an input to the model, and it employs a self-distillation-like objective where two steps should be equivalent to one step with twice the step size.

#diffusion

Stabilize the Latent Space for Image Autoregressive Modeling: A Unified Perspective

(Yongxin Zhu, Bocheng Li, Hang Zhang, Xin Li, Linli Xu, Lidong Bing)

Latent-based image generative models, such as Latent Diffusion Models (LDMs) and Mask Image Models (MIMs), have achieved notable success in image generation tasks. These models typically leverage reconstructive autoencoders like VQGAN or VAE to encode pixels into a more compact latent space and learn the data distribution in the latent space instead of directly from pixels. However, this practice raises a pertinent question: Is it truly the optimal choice? In response, we begin with an intriguing observation: despite sharing the same latent space, autoregressive models significantly lag behind LDMs and MIMs in image generation. This finding contrasts sharply with the field of NLP, where the autoregressive model GPT has established a commanding presence. To address this discrepancy, we introduce a unified perspective on the relationship between latent space and generative models, emphasizing the stability of latent space in image generative modeling. Furthermore, we propose a simple but effective discrete image tokenizer to stabilize the latent space for image generative modeling. Experimental results show that image autoregressive modeling with our tokenizer (DiGIT) benefits both image understanding and image generation with the next token prediction principle, which is inherently straightforward for GPT models but challenging for other generative models. Remarkably, for the first time, a GPT-style autoregressive model for images outperforms LDMs, which also exhibits substantial improvement akin to GPT when scaling up model size. Our findings underscore the potential of an optimized latent space and the integration of discrete tokenization in advancing the capabilities of image generative models. The code is available at \url{https://github.com/DAMO-NLP-SG/DiGIT}.

Reconstruction으로 학습한 VQ 토큰이 생성에는 적합하지 않을 수 있다는 아이디어. 그래서 SSL로 학습한 인코더의 Feature에 대해 K-Means로 토큰을 뽑는 방법을 사용했습니다. 비슷한 연구들이 이전에도 나왔었죠. (https://arxiv.org/abs/2406.11837, https://arxiv.org/abs/2407.09087)

이런 형태의 2단계 학습은 단계 사이에서 학습 시그널이 전달되지 않기 때문에 기본적인 문제가 있죠. 그리고 학습하는 것은 학습하지 않는 것보다 낫죠.

The idea is that VQ tokens learned through reconstruction may not be suitable for generation. Instead, they used an SSL-trained encoder and extracted tokens using K-means clustering on the image features. Similar research has been published earlier (https://arxiv.org/abs/2406.11837, https://arxiv.org/abs/2407.09087).

This two-stage training approach has an inherent problem that the training signal is not transferred between stages. Generally speaking, it's better to learn something than not to learn it.

#vq #autoregressive-model