2024년 10월 16일

Adaptive Data Optimization: Dynamic Sample Selection with Scaling Laws

(Yiding Jiang, Allan Zhou, Zhili Feng, Sadhika Malladi, J. Zico Kolter)

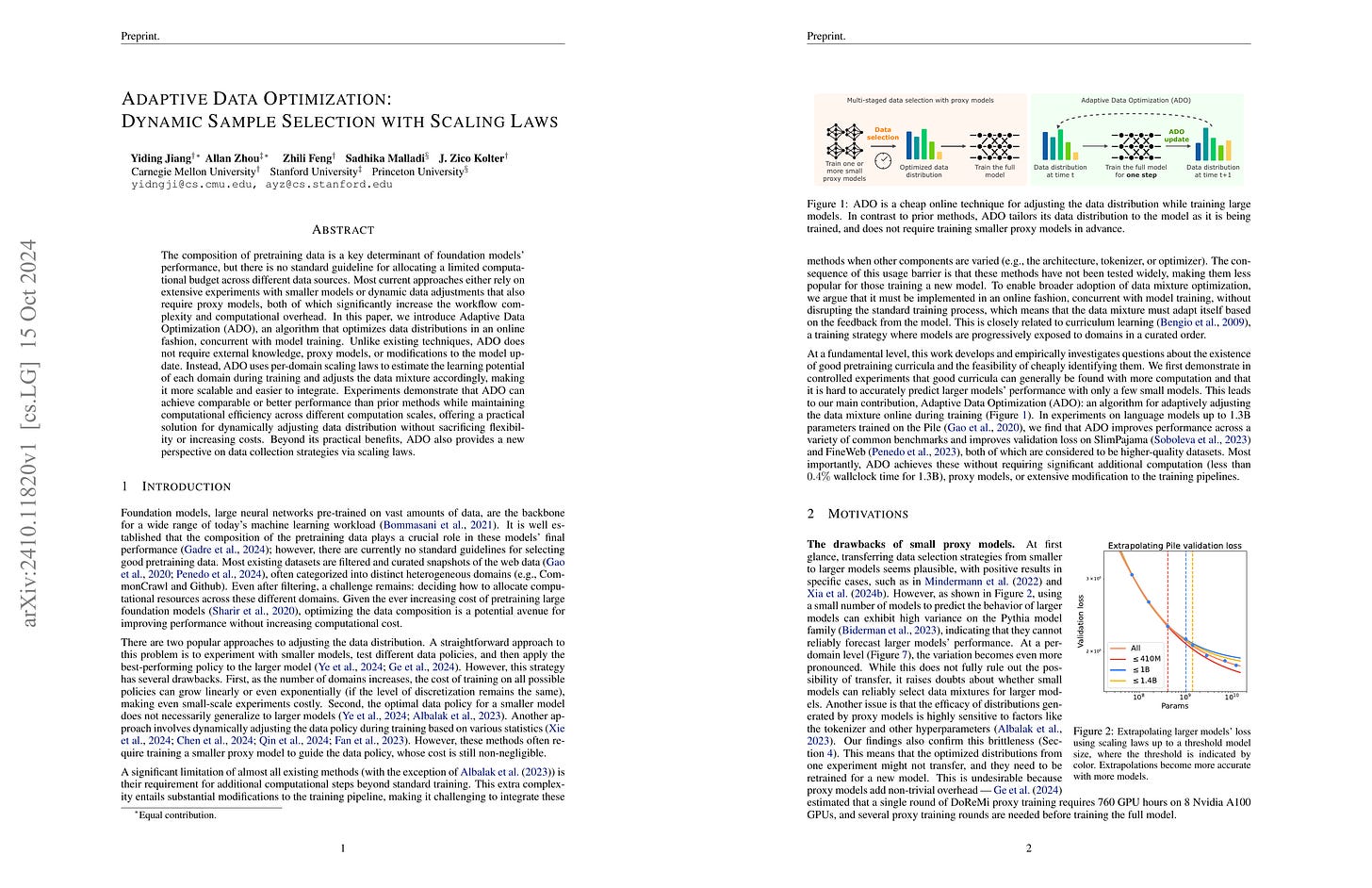

The composition of pretraining data is a key determinant of foundation models' performance, but there is no standard guideline for allocating a limited computational budget across different data sources. Most current approaches either rely on extensive experiments with smaller models or dynamic data adjustments that also require proxy models, both of which significantly increase the workflow complexity and computational overhead. In this paper, we introduce Adaptive Data Optimization (ADO), an algorithm that optimizes data distributions in an online fashion, concurrent with model training. Unlike existing techniques, ADO does not require external knowledge, proxy models, or modifications to the model update. Instead, ADO uses per-domain scaling laws to estimate the learning potential of each domain during training and adjusts the data mixture accordingly, making it more scalable and easier to integrate. Experiments demonstrate that ADO can achieve comparable or better performance than prior methods while maintaining computational efficiency across different computation scales, offering a practical solution for dynamically adjusting data distribution without sacrificing flexibility or increasing costs. Beyond its practical benefits, ADO also provides a new perspective on data collection strategies via scaling laws.

각 도메인에 대한 학습 Loss를 추정하는 Scaling Law를 사용해 데이터셋의 비율 결정. 재미있는 것은 각 도메인의 토큰 수의 비율로 비율을 설정하는 베이스라인이 상당히 강력하다는 것이네요.

The paper uses scaling laws that estimate training loss for each domain to determine the ratio of data from different domains in the dataset. Interestingly, the baseline method of setting the ratio based on the number of tokens per domain proves to be quite powerful.

#scaling-law #dataset

A Hitchhiker's Guide to Scaling Law Estimation

(Leshem Choshen, Yang Zhang, Jacob Andreas)

Scaling laws predict the loss of a target machine learning model by extrapolating from easier-to-train models with fewer parameters or smaller training sets. This provides an efficient way for practitioners and researchers alike to compare pretraining decisions involving optimizers, datasets, and model architectures. Despite the widespread use of scaling laws to model the dynamics of language model training, there has been little work on understanding how to best estimate and interpret them. We collect (and release) a large-scale dataset containing losses and downstream evaluations for 485 previously published pretrained models. We use these to estimate more than 1000 scaling laws, then derive a set of best practices for estimating scaling laws in new model families. We find that fitting scaling laws to intermediate checkpoints of training runs (and not just their final losses) substantially improves accuracy, and that -- all else equal -- estimates of performance are generally most accurate when derived from other models of similar sizes. However, because there is a significant degree of variability across model seeds, training multiple small models is sometimes more useful than training a single large one. Moreover, while different model families differ scaling behavior, they are often similar enough that a target model's behavior can be predicted from a single model with the same architecture, along with scaling parameter estimates derived from other model families.

Scaling Law를 효율적으로 추정할 수 있는 방법들에 대한 고찰. 예를 들어 학습 중간 체크포인트도 추정에 사용하는 것이 오히려 더 정확하다 같은 탐색입니다.

A study on efficient methods for estimating Scaling Laws. For instance, it explores findings such as how using intermediate checkpoints during training can actually lead to more accurate estimations.

#scaling-law

Simplifying, Stabilizing and Scaling Continuous-Time Consistency Models

(Cheng Lu, Yang Song)

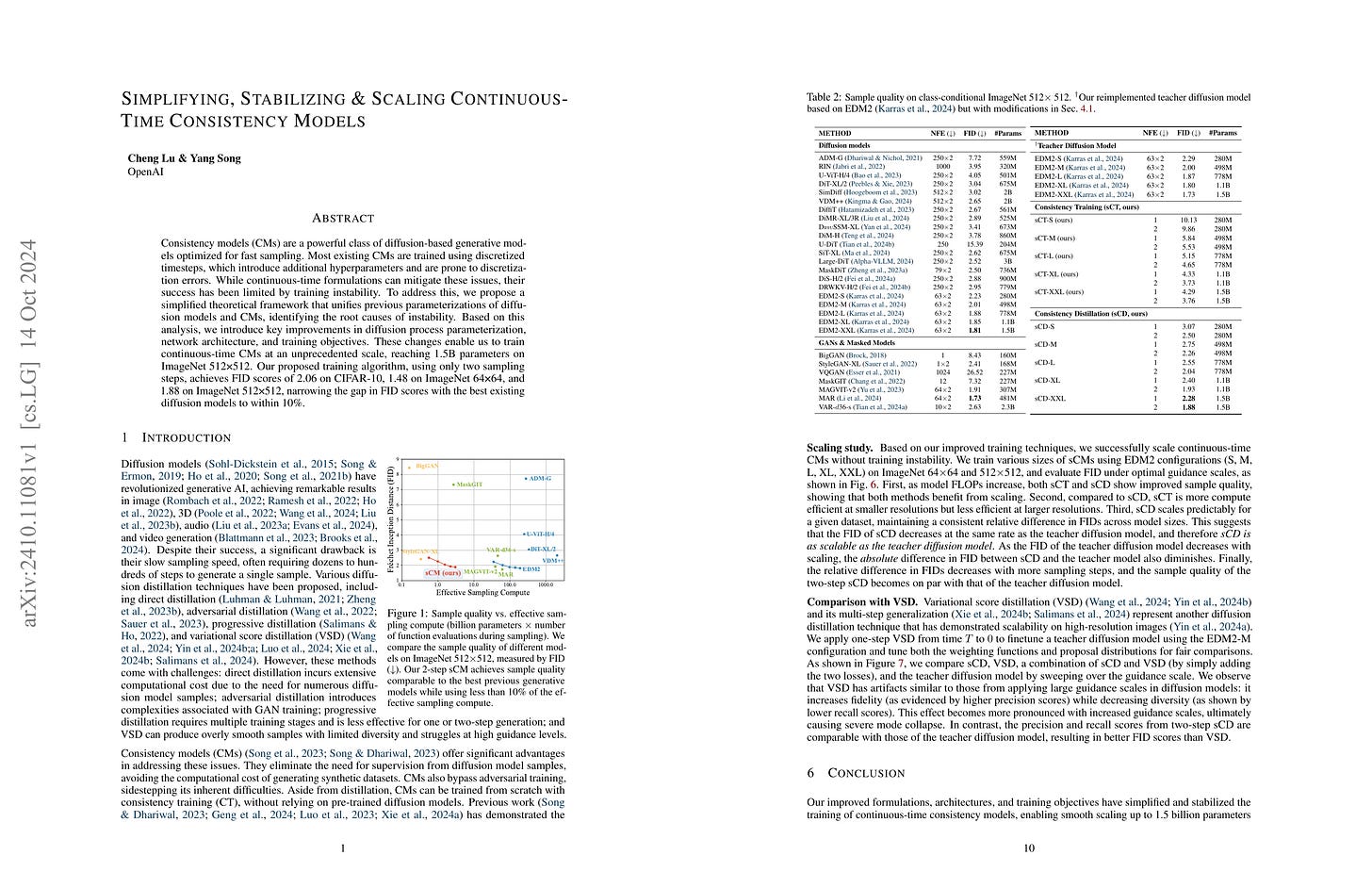

Consistency models (CMs) are a powerful class of diffusion-based generative models optimized for fast sampling. Most existing CMs are trained using discretized timesteps, which introduce additional hyperparameters and are prone to discretization errors. While continuous-time formulations can mitigate these issues, their success has been limited by training instability. To address this, we propose a simplified theoretical framework that unifies previous parameterizations of diffusion models and CMs, identifying the root causes of instability. Based on this analysis, we introduce key improvements in diffusion process parameterization, network architecture, and training objectives. These changes enable us to train continuous-time CMs at an unprecedented scale, reaching 1.5B parameters on ImageNet 512x512. Our proposed training algorithm, using only two sampling steps, achieves FID scores of 2.06 on CIFAR-10, 1.48 on ImageNet 64x64, and 1.88 on ImageNet 512x512, narrowing the gap in FID scores with the best existing diffusion models to within 10%.

Continuous-Time Consistency Model에 대한 학습 안정성 개선. 이쪽은 Consistency Model에 대한 개선 작업을 계속하고 있군요.

Improvements in training stability for continuous-time consistency models. It seems the authors are continuing their work on enhancing consistency models.

#diffusion