2024년 10월 15일

Thinking LLMs: General Instruction Following with Thought Generation

(Tianhao Wu, Janice Lan, Weizhe Yuan, Jiantao Jiao, Jason Weston, Sainbayar Sukhbaatar)

LLMs are typically trained to answer user questions or follow instructions similarly to how human experts respond. However, in the standard alignment framework they lack the basic ability of explicit thinking before answering. Thinking is important for complex questions that require reasoning and planning -- but can be applied to any task. We propose a training method for equipping existing LLMs with such thinking abilities for general instruction following without use of additional human data. We achieve this by an iterative search and optimization procedure that explores the space of possible thought generations, allowing the model to learn how to think without direct supervision. For each instruction, the thought candidates are scored using a judge model to evaluate their responses only, and then optimized via preference optimization. We show that this procedure leads to superior performance on AlpacaEval and Arena-Hard, and shows gains from thinking on non-reasoning categories such as marketing, health and general knowledge, in addition to more traditional reasoning & problem-solving tasks.

일반적인 Instruction Following 능력에 생각을 하는 과정을 추가. 생각을 생성하도록 프롬프트를 사용한 다음 생각을 제외한 최종 결과만 Reward Model로 평가하게 하는 형태로 진행.

Adding a thinking process to general instruction following capabilities. First thoughts generated using prompts, and then a reward model judges only the final response, excluding the generated thoughts.

#instruction-tuning #reasoning

Rethinking Data Selection at Scale: Random Selection is Almost All You Need

(Tingyu Xia, Bowen Yu, Kai Dang, An Yang, Yuan Wu, Yuan Tian, Yi Chang, Junyang Lin)

Supervised fine-tuning (SFT) is crucial for aligning Large Language Models (LLMs) with human instructions. The primary goal during SFT is to select a small yet representative subset of training data from the larger pool, such that fine-tuning with this subset achieves results comparable to or even exceeding those obtained using the entire dataset. However, most existing data selection techniques are designed for small-scale data pools, which fail to meet the demands of real-world SFT scenarios. In this paper, we replicated several self-scoring methods those that do not rely on external model assistance on two million scale datasets, and found that nearly all methods struggled to significantly outperform random selection when dealing with such large-scale data pools. Moreover, our comparisons suggest that, during SFT, diversity in data selection is more critical than simply focusing on high quality data. We also analyzed the limitations of several current approaches, explaining why they perform poorly on large-scale datasets and why they are unsuitable for such contexts. Finally, we found that filtering data by token length offers a stable and efficient method for improving results. This approach, particularly when training on long text data, proves highly beneficial for relatively weaker base models, such as Llama3.

SFT 데이터셋 샘플링 문제에서 데이터셋 규모가 증가했을 때 랜덤 선택이 다른 방법을 사용한 것보다 나았다는 결과.

In the context of SFT dataset sampling, random selection outperformed other methods when the dataset size was increased.

#alignment #dataset

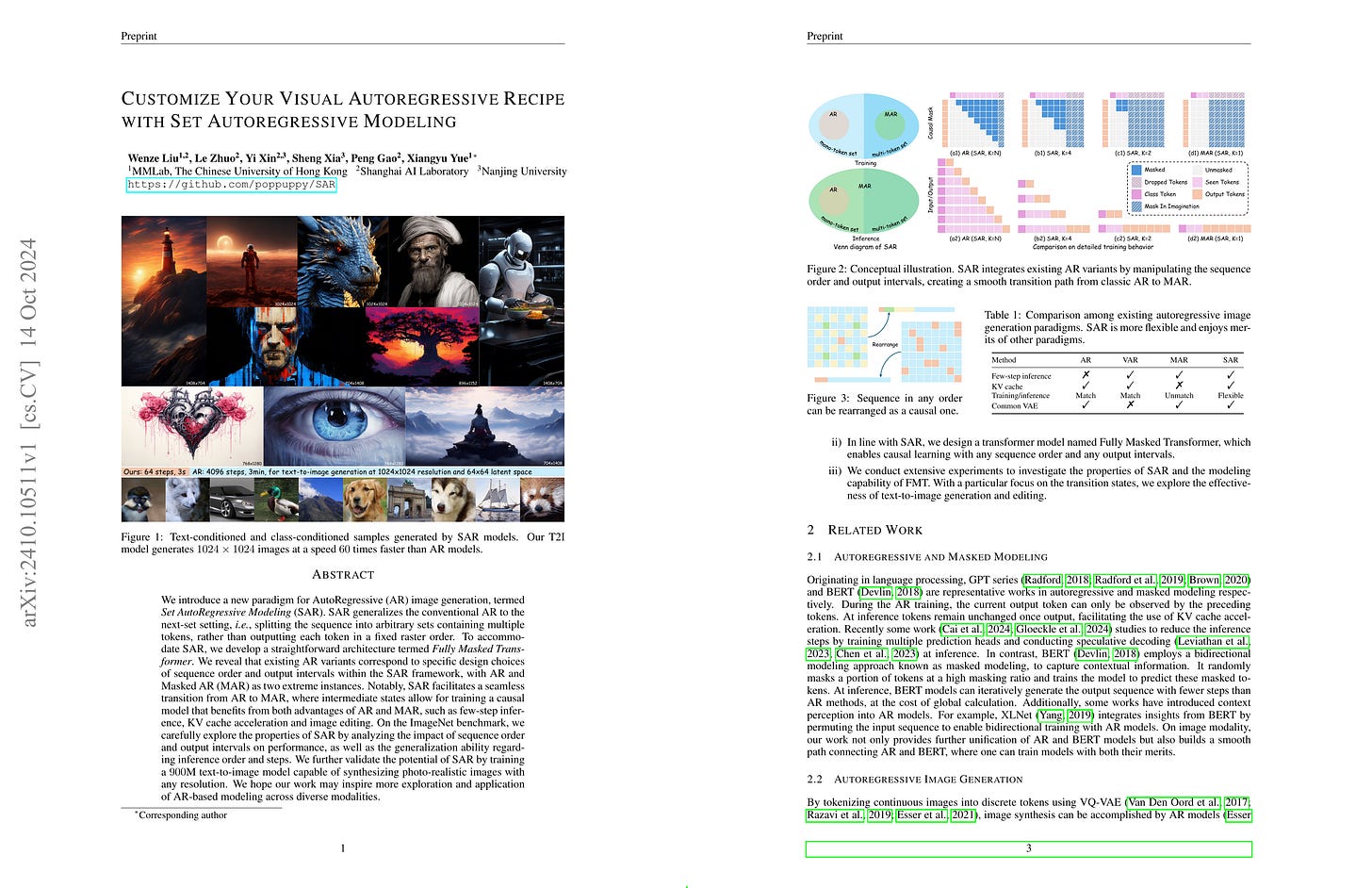

Customize Your Visual Autoregressive Recipe with Set Autoregressive Modeling

(Wenze Liu, Le Zhuo, Yi Xin, Sheng Xia, Peng Gao, Xiangyu Yue)

We introduce a new paradigm for AutoRegressive (AR) image generation, termed Set AutoRegressive Modeling (SAR). SAR generalizes the conventional AR to the next-set setting, i.e., splitting the sequence into arbitrary sets containing multiple tokens, rather than outputting each token in a fixed raster order. To accommodate SAR, we develop a straightforward architecture termed Fully Masked Transformer. We reveal that existing AR variants correspond to specific design choices of sequence order and output intervals within the SAR framework, with AR and Masked AR (MAR) as two extreme instances. Notably, SAR facilitates a seamless transition from AR to MAR, where intermediate states allow for training a causal model that benefits from both few-step inference and KV cache acceleration, thus leveraging the advantages of both AR and MAR. On the ImageNet benchmark, we carefully explore the properties of SAR by analyzing the impact of sequence order and output intervals on performance, as well as the generalization ability regarding inference order and steps. We further validate the potential of SAR by training a 900M text-to-image model capable of synthesizing photo-realistic images with any resolution. We hope our work may inspire more exploration and application of AR-based modeling across diverse modalities.

이미지 토큰 시퀀스의 특정 집합들을 예측하는 형태로 Autoregressive 생성을 일반화. 구현은 인코더-디코더를 사용해서 했습니다. Autoregressive 생성으로도 좋은 퀄리티를 낼 수 있는가? 이 질문에 대한 다음 질문은 실용적으로 생성 속도가 충분한가가 되긴 하겠죠.

This paper generalizes autoregressive generation by predicting specific sets within image token sequences. The implementation uses an encoder-decoder architecture. Can autoregressive generation produce high-quality results? The next practical question following this would be whether the generation speed is sufficiently fast.

#autoregressive-model #image-generation

HART: Efficient Visual Generation with Hybrid Autoregressive Transformer

(Haotian Tang, Yecheng Wu, Shang Yang, Enze Xie, Junsong Chen, Junyu Chen, Zhuoyang Zhang, Han Cai, Yao Lu, Song Han)

We introduce Hybrid Autoregressive Transformer (HART), an autoregressive (AR) visual generation model capable of directly generating 1024x1024 images, rivaling diffusion models in image generation quality. Existing AR models face limitations due to the poor image reconstruction quality of their discrete tokenizers and the prohibitive training costs associated with generating 1024px images. To address these challenges, we present the hybrid tokenizer, which decomposes the continuous latents from the autoencoder into two components: discrete tokens representing the big picture and continuous tokens representing the residual components that cannot be represented by the discrete tokens. The discrete component is modeled by a scalable-resolution discrete AR model, while the continuous component is learned with a lightweight residual diffusion module with only 37M parameters. Compared with the discrete-only VAR tokenizer, our hybrid approach improves reconstruction FID from 2.11 to 0.30 on MJHQ-30K, leading to a 31% generation FID improvement from 7.85 to 5.38. HART also outperforms state-of-the-art diffusion models in both FID and CLIP score, with 4.5-7.7x higher throughput and 6.9-13.4x lower MACs. Our code is open sourced at https://github.com/mit-han-lab/hart.

VQ에서 Continuous Token을 디코딩 할 수 있게 만든 다음 Discrete Token과 Continuous Token의 잔차를 사용해 학습. Autoregressive Model 위에서 Diffusion을 올려 이 잔차를 학습시킨다는 아이디어입니다. 그나저나 Visual Autoregressive Model은 은근슬쩍 자리를 잡았군요. (https://arxiv.org/abs/2404.02905)

They modified VQ to enable decoding of continuous tokens, then trained the model using the residual between discrete and continuous tokens. The key idea is to add a diffusion model on top of the autoregressive model to learn these residuals. Interestingly, visual autoregressive models seem to have quietly established their place in the field. (https://arxiv.org/abs/2404.02905)

#vq #autoregressive-model #diffusion