2024년 10월 14일

On the token distance modeling ability of higher RoPE attention dimension

(Xiangyu Hong, Che Jiang, Biqing Qi, Fandong Meng, Mo Yu, Bowen Zhou, Jie Zhou)

Length extrapolation algorithms based on Rotary position embedding (RoPE) have shown promising results in extending the context length of language models. However, understanding how position embedding can capture longer-range contextual information remains elusive. Based on the intuition that different dimensions correspond to different frequency of changes in RoPE encoding, we conducted a dimension-level analysis to investigate the correlation between a hidden dimension of an attention head and its contribution to capturing long-distance dependencies. Using our correlation metric, we identified a particular type of attention heads, which we named Positional Heads, from various length-extrapolated models. These heads exhibit a strong focus on long-range information interaction and play a pivotal role in long input processing, as evidence by our ablation. We further demonstrate the correlation between the efficiency of length extrapolation and the extension of the high-dimensional attention allocation of these heads. The identification of Positional Heads provides insights for future research in long-text comprehension.

토큰 사이의 거리 증가에 따라 RoPE의 저주파수 차원을 선호하는 헤드가 있고 이 헤드가 Long Context 문제에 대해서 중요하다는 아이디어. 저주파수 영역이 Semantic Attention의 기능을 한다는 분석과 (https://arxiv.org/abs/2410.06205) 결합해서 생각하는 것이 좋겠죠.

This paper presents the idea that there are attention heads which prefer low-frequency dimensions in RoPE as the distance between tokens increases, and these heads are crucial for handling long-context problems. It would be beneficial to consider this in conjunction with the analysis that low-frequency domains function as semantic attention (https://arxiv.org/abs/2410.06205).

#positional-encoding #long-context

Scaling Laws for Predicting Downstream Performance in LLMs

(Yangyi Chen, Binxuan Huang, Yifan Gao, Zhengyang Wang, Jingfeng Yang, Heng Ji)

Precise estimation of downstream performance in large language models (LLMs) prior to training is essential for guiding their development process. Scaling laws analysis utilizes the statistics of a series of significantly smaller sampling language models (LMs) to predict the performance of the target LLM. For downstream performance prediction, the critical challenge lies in the emergent abilities in LLMs that occur beyond task-specific computational thresholds. In this work, we focus on the pre-training loss as a more computation-efficient metric for performance estimation. Our two-stage approach consists of first estimating a function that maps computational resources (e.g., FLOPs) to the pre-training Loss using a series of sampling models, followed by mapping the pre-training loss to downstream task Performance after the critical "emergent phase". In preliminary experiments, this FLP solution accurately predicts the performance of LLMs with 7B and 13B parameters using a series of sampling LMs up to 3B, achieving error margins of 5% and 10%, respectively, and significantly outperforming the FLOPs-to-Performance approach. This motivates FLP-M, a fundamental approach for performance prediction that addresses the practical need to integrate datasets from multiple sources during pre-training, specifically blending general corpora with code data to accurately represent the common necessity. FLP-M extends the power law analytical function to predict domain-specific pre-training loss based on FLOPs across data sources, and employs a two-layer neural network to model the non-linear relationship between multiple domain-specific loss and downstream performance. By utilizing a 3B LLM trained on a specific ratio and a series of smaller sampling LMs, FLP-M can effectively forecast the performance of 3B and 7B LLMs across various data mixtures for most benchmarks within 10% error margins.

도메인에 대한 Loss의 Scaling Law와 도메인 Loss를 사용한 벤치마크 점수 예측을 조합하여 벤치마크에 대한 Scaling Law를 구성. 과거 벤치마크에 대한 Scaling Law 추정을 시도한 작업들과 (https://arxiv.org/abs/2403.08540, https://arxiv.org/abs/2404.09937) 비슷한 점이 있습니다.

This work constructs a scaling law for benchmarks by combining the scaling law for domain-specific loss with benchmark score prediction using domain loss. There are similarities to previous works that attempted to estimate scaling laws for benchmarks (https://arxiv.org/abs/2403.08540, https://arxiv.org/abs/2404.09937).

#scaling-law

ElasticTok: Adaptive Tokenization for Image and Video

(Wilson Yan, Matei Zaharia, Volodymyr Mnih, Pieter Abbeel, Aleksandra Faust, Hao Liu)

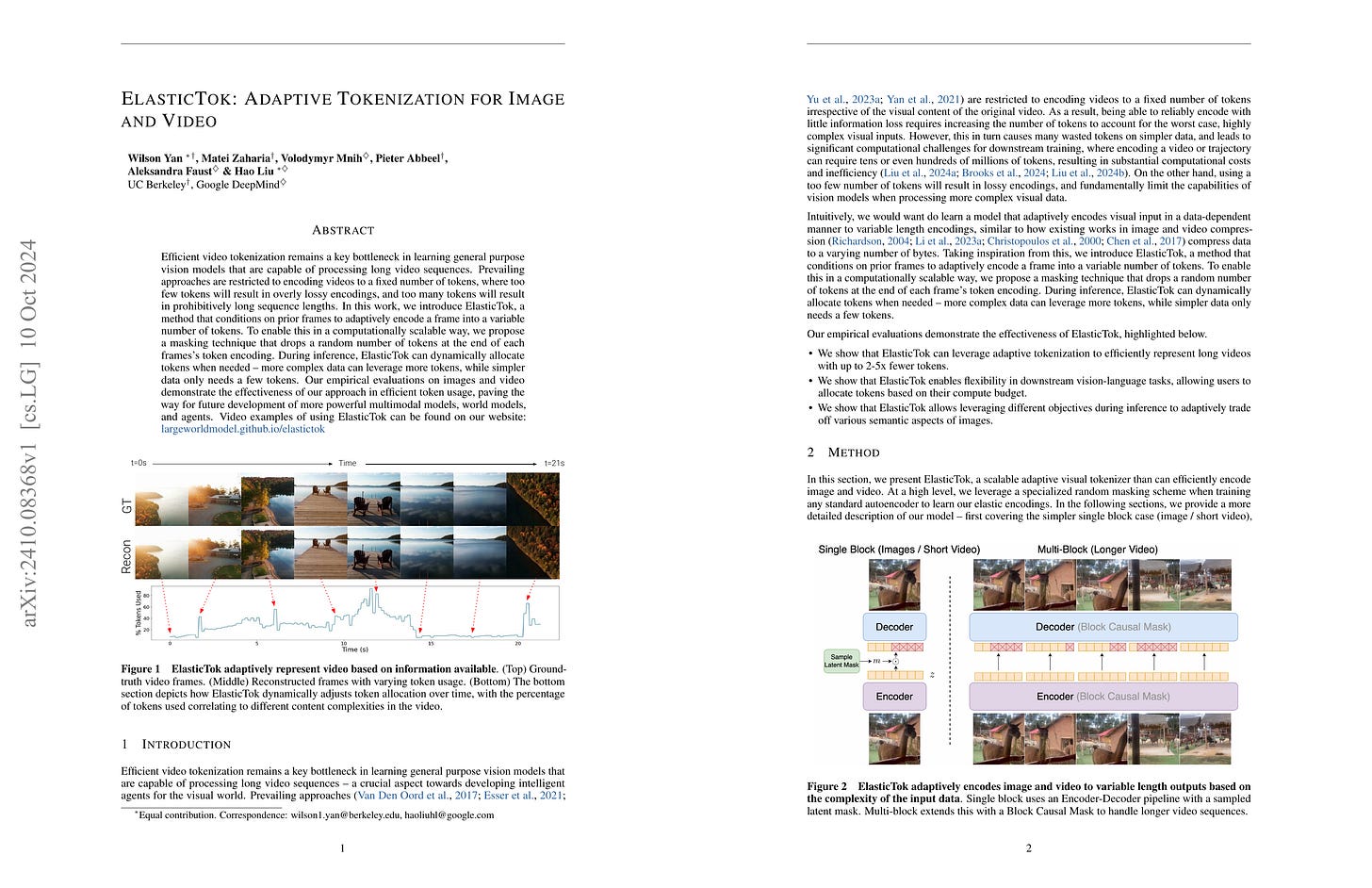

Efficient video tokenization remains a key bottleneck in learning general purpose vision models that are capable of processing long video sequences. Prevailing approaches are restricted to encoding videos to a fixed number of tokens, where too few tokens will result in overly lossy encodings, and too many tokens will result in prohibitively long sequence lengths. In this work, we introduce ElasticTok, a method that conditions on prior frames to adaptively encode a frame into a variable number of tokens. To enable this in a computationally scalable way, we propose a masking technique that drops a random number of tokens at the end of each frames's token encoding. During inference, ElasticTok can dynamically allocate tokens when needed -- more complex data can leverage more tokens, while simpler data only needs a few tokens. Our empirical evaluations on images and video demonstrate the effectiveness of our approach in efficient token usage, paving the way for future development of more powerful multimodal models, world models, and agents.

장면의 인코딩 난이도에 따라 사용하는 토큰 수를 바꾸는 접근. 랜덤한 숫자의 토큰만을 사용하도록 마스킹해서 학습한 다음 추론 시점에는 MSE 같은 지표를 일정 수준 이상 달성할 수 있는 최소의 토큰 수를 찾는 방법을 사용합니다. 재미있네요.

This approach adjusts the number of tokens used based on the encoding difficulty of the scene. During training, it masks a random number of tokens, and during inference, it uses a search to find the minimum number of tokens that can achieve a certain level of performance metrics like MSE. Interesting approach.

#vq

O1 Replication Journey: A Strategic Progress Report - Part 1

(Yiwei Qin, Xuefeng Li, Haoyang Zou, Yixiu Liu, Shijie Xia, Zhen Huang, Yixin Ye, Weizhe Yuan, Zhengzhong Liu, Yuanzhi Li, Pengfei Liu)

This paper introduces a pioneering approach to artificial intelligence research, embodied in our O1 Replication Journey. In response to the announcement of OpenAI’s groundbreaking O1 model, we embark on a transparent, real-time exploration to replicate its capabilities while reimagining the process of conducting and communicating AI research. Our methodology addresses critical challenges in modern AI research, including the insularity of prolonged team-based projects, delayed information sharing, and the lack of recognition for diverse contributions. By providing comprehensive, real-time documentation of our replication efforts, including both successes and failures, we aim to foster open science, accelerate collective advancement, and lay the groundwork for AI-driven scientific discovery. Our research progress report diverges significantly from traditional research papers, offering continuous updates, full process transparency, and active community engagement throughout the research journey. Technologically, we proposed the “journey learning” paradigm, which encourages models to learn not just shortcuts, but the complete exploration process, including trial and error, reflection, and backtracking. With only 327 training samples and without any additional tricks, journey learning outperformed conventional supervised learning by over 8% on the MATH dataset, demonstrating its extremely powerful potential. We believe this to be the most crucial component of O1 technology that we have successfully decoded. We share valuable resources including technical hypotheses and insights, cognitive exploration maps, custom-developed tools, etc at https://github.com/GAIR-NLP/O1-Journey

다들 o1을 어떻게 재현할 수 있을 것인가라는 문제에 뛰어들었군요. 여기서 생각한 방법은 Journey Learning이라고 이름 붙인, 정답으로 곧바로 이어지는 체인만이 아니라 오답이나 실수로 연결되는 체인들도 거쳐 정답으로 이어지는 체인을 통해 학습하는 방법이군요. Reward Model을 통해 추론 트리를 구성한 다음 이 트리에 대한 DFS로 체인을 만드는 방식입니다.

o1은 방법이 알려지지 않았기에 o1을 재현하자면 이 방법적인 측면에서 올바르게 접근해야 하고, 따라서 수많은 잠재적인 방법들 중 옳은 접근을 골라낼 좋은 판단력이 필요하겠죠.

It seems everyone has jumped into the problem of how to replicate O1. The method proposed here is named as journey learning, which involves learning not only through chains that lead directly to the correct answer but also through chains that pass through incorrect answers or mistakes before reaching the correct solution. The approach involves constructing a reasoning tree using a reward model and then creating chains through a DFS on this tree.

Since the methods behind O1 are not publicly known, replicating O1 requires taking the correct methodological approach. Consequently, good judgment is necessary to select the right approach from among numerous potential methods.

#reasoning #search