2024년 10월 10일

Everything Everywhere All at Once: LLMs can In-Context Learn Multiple Tasks in Superposition

(Zheyang Xiong, Ziyang Cai, John Cooper, Albert Ge, Vasilis Papageorgiou, Zack Sifakis, Angeliki Giannou, Ziqian Lin, Liu Yang, Saurabh Agarwal, Grigorios G Chrysos, Samet Oymak, Kangwook Lee, Dimitris Papailiopoulos)

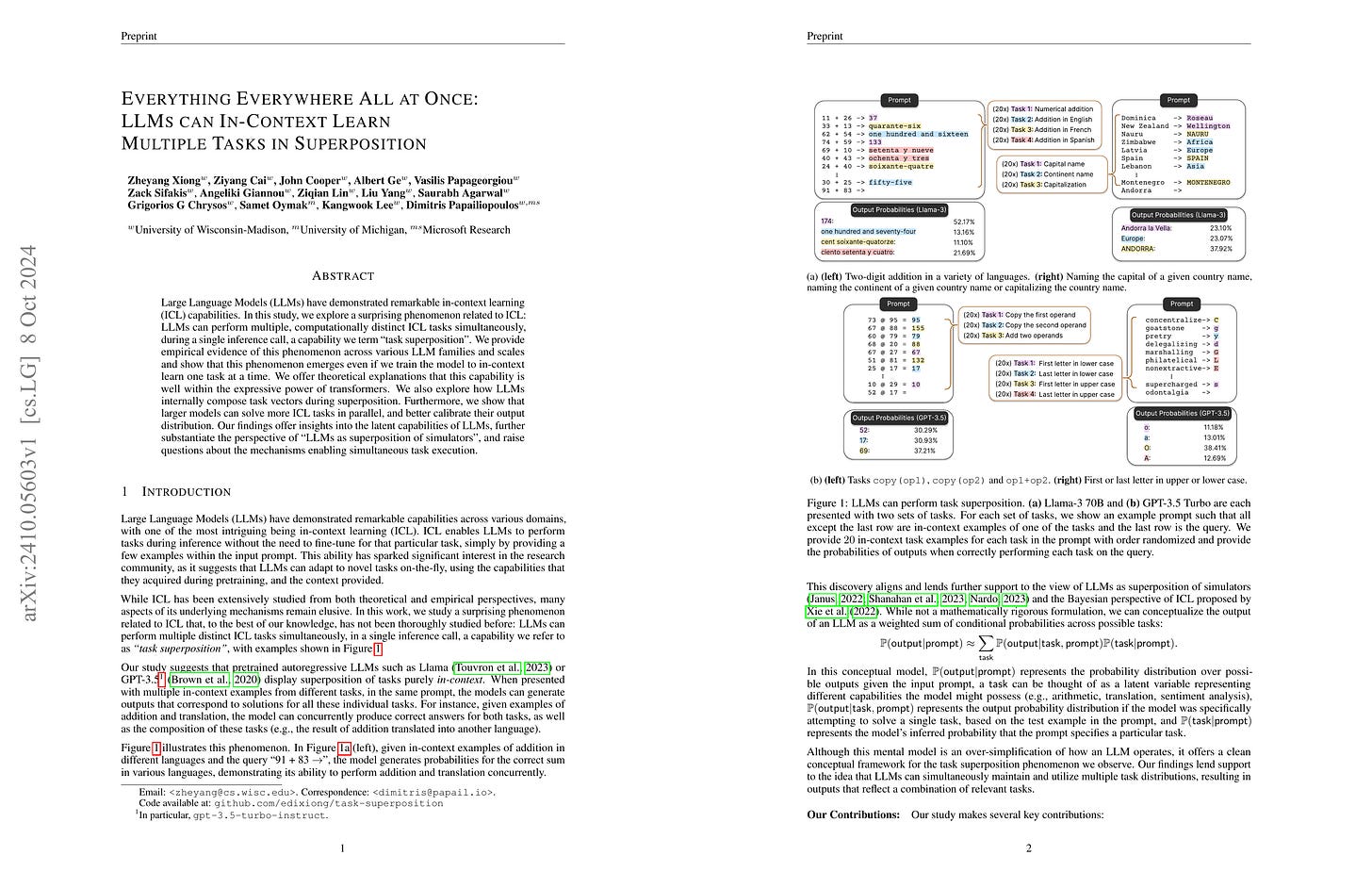

Large Language Models (LLMs) have demonstrated remarkable in-context learning (ICL) capabilities. In this study, we explore a surprising phenomenon related to ICL: LLMs can perform multiple, computationally distinct ICL tasks simultaneously, during a single inference call, a capability we term "task superposition". We provide empirical evidence of this phenomenon across various LLM families and scales and show that this phenomenon emerges even if we train the model to in-context learn one task at a time. We offer theoretical explanations that this capability is well within the expressive power of transformers. We also explore how LLMs internally compose task vectors during superposition. Furthermore, we show that larger models can solve more ICL tasks in parallel, and better calibrate their output distribution. Our findings offer insights into the latent capabilities of LLMs, further substantiate the perspective of "LLMs as superposition of simulators", and raise questions about the mechanisms enabling simultaneous task execution.

In-context Learning에서 여러 과제를 수행하도록 예제를 섞었을 때에도 그 과제들을 모두 반영할 수 있다는 결과. 심지어 하나의 과제만 수행하도록 학습한 경우에도 여러 과제에 대한 대응이 가능했다고 하네요.

This paper shows that models can handle multiple tasks simultaneously in in-context learning, even when examples from different tasks are mixed together. Surprisingly, the models were able to respond to multiple tasks even when they were trained to perform only one task at a time.

#in-context-learning

Pyramidal Flow Matching for Efficient Video Generative Modeling

(Yang Jin, Zhicheng Sun, Ningyuan Li, Kun Xu, Kun Xu, Hao Jiang, Nan Zhuang, Quzhe Huang, Yang Song, Yadong Mu, Zhouchen Lin)

Video generation requires modeling a vast spatiotemporal space, which demands significant computational resources and data usage. To reduce the complexity, the prevailing approaches employ a cascaded architecture to avoid direct training with full resolution. Despite reducing computational demands, the separate optimization of each sub-stage hinders knowledge sharing and sacrifices flexibility. This work introduces a unified pyramidal flow matching algorithm. It reinterprets the original denoising trajectory as a series of pyramid stages, where only the final stage operates at the full resolution, thereby enabling more efficient video generative modeling. Through our sophisticated design, the flows of different pyramid stages can be interlinked to maintain continuity. Moreover, we craft autoregressive video generation with a temporal pyramid to compress the full-resolution history. The entire framework can be optimized in an end-to-end manner and with a single unified Diffusion Transformer (DiT). Extensive experiments demonstrate that our method supports generating high-quality 5-second (up to 10-second) videos at 768p resolution and 24 FPS within 20.7k A100 GPU training hours. All code and models will be open-sourced at

https://pyramid-flow.github.io

.

비디오 생성 모델. 스케줄을 나눠서 대부분의 샘플링 과정을 저해상도에서 수행하게 하고, Autoregressive한 구조로 시간축에 대한 Conditioning을 하면서 과거일수록 저해상도의 정보를 사용하는 형태로 만들었군요.

Video generation model. They've divided the schedule so that most of the sampling process is performed at low resolution. They've also implemented an autoregressive structure that conditions on the time axis, using lower resolution information for frames further in the past.

#video-generation

TxT360: A Top-Quality LLM Pre-training Dataset Requires the Perfect Blend

(Liping Tang, Nikhil Ranjan, Omkar Pangarkar, Xuezhi Liang, Zhen Wang, Li An, Bhaskar Rao, Zhoujun Cheng, Suqi Sun, Cun Mu, Victor Miller, Yue Peng, Eric P. Xing, Zhengzhong Liu)

LLM 프리트레이닝을 위한 코퍼스인데 Common Crawl 뿐만 아니라 StackExchange 같은 데이터 소스도 전처리하고 Global Deduplication을 했네요.

Common Crawl의 경우에는 Trafilatura + 휴리스틱 필터링 기반입니다. 모델 기반 필터링은 사용하지 않았네요. 특이하게도 Duplication 횟수에 따라 Upsampling을 하는 방법을 사용했습니다. Duplicate의 수가 문서의 퀄리티에 대한 간접적인 지표가 될 수 있다는 직관입니다.

Corpus for LLM pre-training that not only uses Common Crawl but also preprocessed and globally deduplicated other data sources like StackExchange.

For Common Crawl, they used Trafilatura and heuristic filtering. Model-based filtering was not employed. Interestingly, they used an upsampling method based on the number of duplications. The intuition is that the number of duplicates can serve as an indirect indicator of document quality.

#corpus

Round and Round We Go! What makes Rotary Positional Encodings useful?

(Federico Barbero, Alex Vitvitskyi, Christos Perivolaropoulos, Razvan Pascanu, Petar Veličković)

Positional Encodings (PEs) are a critical component of Transformer-based Large Language Models (LLMs), providing the attention mechanism with important sequence-position information. One of the most popular types of encoding used today in LLMs are Rotary Positional Encodings (RoPE), that rotate the queries and keys based on their relative distance. A common belief is that RoPE is useful because it helps to decay token dependency as relative distance increases. In this work, we argue that this is unlikely to be the core reason. We study the internals of a trained Gemma 7B model to understand how RoPE is being used at a mechanical level. We find that Gemma learns to use RoPE to construct robust "positional" attention patterns by exploiting the highest frequencies. We also find that, in general, Gemma greatly prefers to use the lowest frequencies of RoPE, which we suspect are used to carry semantic information. We mathematically prove interesting behaviours of RoPE and conduct experiments to verify our findings, proposing a modification of RoPE that fixes some highlighted issues and improves performance. We believe that this work represents an interesting step in better understanding PEs in LLMs, which we believe holds crucial value for scaling LLMs to large sizes and context lengths.

RoPE에서 고주파수 차원들은 위치 기반 Attention에 사용되고 저주파수 차원들은 의미 기반 Attention에 사용된다는 발견. 저주파수 차원들이 토큰 위치에 따른 변화가 적기에 의미 기반 Attention에 사용되는 것일 텐데, 그렇다면 저주파수 차원이 없는 쪽이 더 나을 수도 있겠죠.

그래서 부분적으로만 RoPE를 적용하는 실험을 했습니다. 사실 RoPE에 본래 있었던 옵션이기도 하죠. Long Context에서의 특성은 어떨지도 궁금하네요.

The study found that in RoPE, high-frequency dimensions are used for position-based attention, while low-frequency dimensions are used for semantic-based attention. The low-frequency dimensions likely serve this purpose because they change less with token position. This raises the question of whether it might be better to eliminate the low-frequency dimensions altogether.

Consequently, the researchers conducted experiments applying RoPE only partially. In fact, this was originally an option in RoPE. I'm curious about how this would affect performance in long-context scenarios.

#positional-encoding

Scaling Laws Across Model Architectures: A Comparative Analysis of Dense and MoE Models in Large Language Models

(Siqi Wang, Zhengyu Chen, Bei Li, Keqing He, Min Zhang, Jingang Wang)

The scaling of large language models (LLMs) is a critical research area for the efficiency and effectiveness of model training and deployment. Our work investigates the transferability and discrepancies of scaling laws between Dense Models and Mixture of Experts (MoE) models. Through a combination of theoretical analysis and extensive experiments, including consistent loss scaling, optimal batch size and learning rate scaling, and resource allocation strategies scaling, our findings reveal that the power-law scaling framework also applies to MoE Models, indicating that the fundamental principles governing the scaling behavior of these models are preserved, even though the architecture differs. Additionally, MoE Models demonstrate superior generalization, resulting in lower testing losses with the same training compute budget compared to Dense Models. These findings indicate the scaling consistency and transfer generalization capabilities of MoE Models, providing new insights for optimizing MoE Model training and deployment strategies.

MoE에 대한 Scaling Law. 최적 모델 규모에 대해서는 MoE 모델이 Dense 모델보다 지수가 더 크네요. (더 큰 모델 규모를 선호.) 최적 LR과 배치 크기에 대한 Scaling Law에 대해서는 본문에서는 차이가 있다고 하는데 Loss 타겟에 대해서는 사실 꽤 비슷한 것 같습니다. 모델 규모에 대해서는 차이가 있는 듯 하지만 그래프만으로는 알기 어렵네요.

Scaling law for MoE. For optimal model scales, MoE models have a larger exponent compared to dense models (indicating a preference for larger model sizes). Regarding the scaling laws for optimal learning rate and batch size, while the paper suggests there are differences, I think they're quite similar with respect to specific loss targets. There might be differences in relation to model scale, but it's difficult to determine this from the plots alone.

#scaling-law #moe

Time Transfer: On Optimal Learning Rate and Batch Size In The Infinite Data Limit

(Oleg Filatov, Jan Ebert, Jiangtao Wang, Stefan Kesselheim)

One of the main challenges in optimal scaling of large language models (LLMs) is the prohibitive cost of hyperparameter tuning, particularly learning rate η and batch size B. While techniques like μP (Yang et al., 2022) provide scaling rules for optimal η transfer in the infinite model size limit, the optimal scaling behavior in the infinite data size limit (T → ∞) remains unknown. We fill in this gap by observing for the first time an interplay of three optimal η scaling regimes: η ∝ sqrt(T), η ∝ 1, and η ∝ 1/sqrt(T) with transitions controlled by B and its relation to the time-evolving critical batch size B_crit ∝ T. Furthermore, we show that the optimal batch size is positively correlated with B_crit: keeping it fixed becomes suboptimal over time even if learning rate is scaled optimally. Surprisingly, our results demonstrate that the observed optimal η and B dynamics are preserved with μP model scaling, challenging the conventional view of B_crit dependence solely on loss value. Complementing optimality, we examine the sensitivity of loss to changes in learning rate, where we find the sensitivity to decrease with T → ∞ and to remain constant with μP model scaling. We hope our results make the first step towards a unified picture of the joint optimal data and model scaling.

요즘 자주 이야기가 나오는 학습량 증가에 따른 최적 LR과 배치 크기의 변화. LR과 배치 크기의 상호작용이 있어서 해석이 복잡하긴 한데 일단 최적 Loss 타겟에서는 학습량에 따라 LR과 배치 크기가 단조 증가하는 것 같네요.

문제가 복잡해지긴 하지만 그래도 다행스러운 것은 학습량 증가에 따라 LR에 대한 민감도가 감소하는 것 같다는 것이네요. μP도 모델 크기 증가에 따른 영향을 제거하는데 성공했습니다.

This paper discusses the changes in optimal learning rate (LR) and batch size as training time increases, a topic that has been frequently discussed recently. The analysis is complex due to the interaction between LR and batch size, but it appears that for optimal loss targets, both LR and batch size increase monotonically with longer training times.

While this does make the problem more complex, there's a fortunate finding: the sensitivity to LR seems to decrease as training duration increases. Additionally, μP has successfully managed to eliminate the effects of increasing model size.

#hyperparameter #scaling-law

Deciphering Cross-Modal Alignment in Large Vision-Language Models with Modality Integration Rate

(Qidong Huang, Xiaoyi Dong, Pan Zhang, Yuhang Zang, Yuhang Cao, Jiaqi Wang, Dahua Lin, Weiming Zhang, Nenghai Yu)

We present the Modality Integration Rate (MIR), an effective, robust, and generalized metric to indicate the multi-modal pre-training quality of Large Vision Language Models (LVLMs). Large-scale pre-training plays a critical role in building capable LVLMs, while evaluating its training quality without the costly supervised fine-tuning stage is under-explored. Loss, perplexity, and in-context evaluation results are commonly used pre-training metrics for Large Language Models (LLMs), while we observed that these metrics are less indicative when aligning a well-trained LLM with a new modality. Due to the lack of proper metrics, the research of LVLMs in the critical pre-training stage is hindered greatly, including the training data choice, efficient module design, etc. In this paper, we propose evaluating the pre-training quality from the inter-modal distribution distance perspective and present MIR, the Modality Integration Rate, which is 1) \textbf{Effective} to represent the pre-training quality and show a positive relation with the benchmark performance after supervised fine-tuning. 2) \textbf{Robust} toward different training/evaluation data. 3) \textbf{Generalize} across training configurations and architecture choices. We conduct a series of pre-training experiments to explore the effectiveness of MIR and observe satisfactory results that MIR is indicative about training data selection, training strategy schedule, and model architecture design to get better pre-training results. We hope MIR could be a helpful metric for building capable LVLMs and inspire the following research about modality alignment in different areas. Our code is at: https://github.com/shikiw/Modality-Integration-Rate.

이미지 토큰과 텍스트 토큰의 분포의 유사도가 (FID) 모델의 성능과 연관성이 높다는 아이디어. 재미있네요. Loss와 비슷한 느낌이지만 Loss보다 좀 더 강인한 지표일 수 있다는 이야기를 합니다.

This paper presents an interesting idea: the similarity of distribution (FID) between image and text tokens is highly correlated with model performance. It's reminiscent of loss, but the authors argue that it could be a more robust indicator than loss.

#multimodal #metric

The Accuracy Paradox in RLHF: When Better Reward Models Don't Yield Better Language Models

(Yanjun Chen, Dawei Zhu, Yirong Sun, Xinghao Chen, Wei Zhang, Xiaoyu Shen)

Reinforcement Learning from Human Feedback significantly enhances Natural Language Processing by aligning language models with human expectations. A critical factor in this alignment is the strength of reward models used during training. This study explores whether stronger reward models invariably lead to better language models. In this paper, through experiments on relevance, factuality, and completeness tasks using the QA-FEEDBACK dataset and reward models based on Longformer, we uncover a surprising paradox: language models trained with moderately accurate reward models outperform those guided by highly accurate ones. This challenges the widely held belief that stronger reward models always lead to better language models, and opens up new avenues for future research into the key factors driving model performance and how to choose the most suitable reward models. Code and additional details are available at https://github.com/EIT-NLP/AccuracyParadox-RLHF.

정확도가 더 높은 Reward Model이 더 나은 RLHF 결과로 이어지지 않는다는 결과. Reward Model의 역할을 생각하면 정확도 이상으로 Reward Score의 거동과 특성이 중요하겠죠.

This study shows that more accurate reward models do not necessarily lead to better RLHF results. Considering the role of reward models, the behavior and characteristics of reward scores may be more important than mere accuracy.

#reward-model