2024년 1월 31

SelectLLM: Can LLMs Select Important Instructions to Annotate?

(Ritik Sachin Parkar, Jaehyung Kim, Jong Inn Park, Dongyeop Kang)

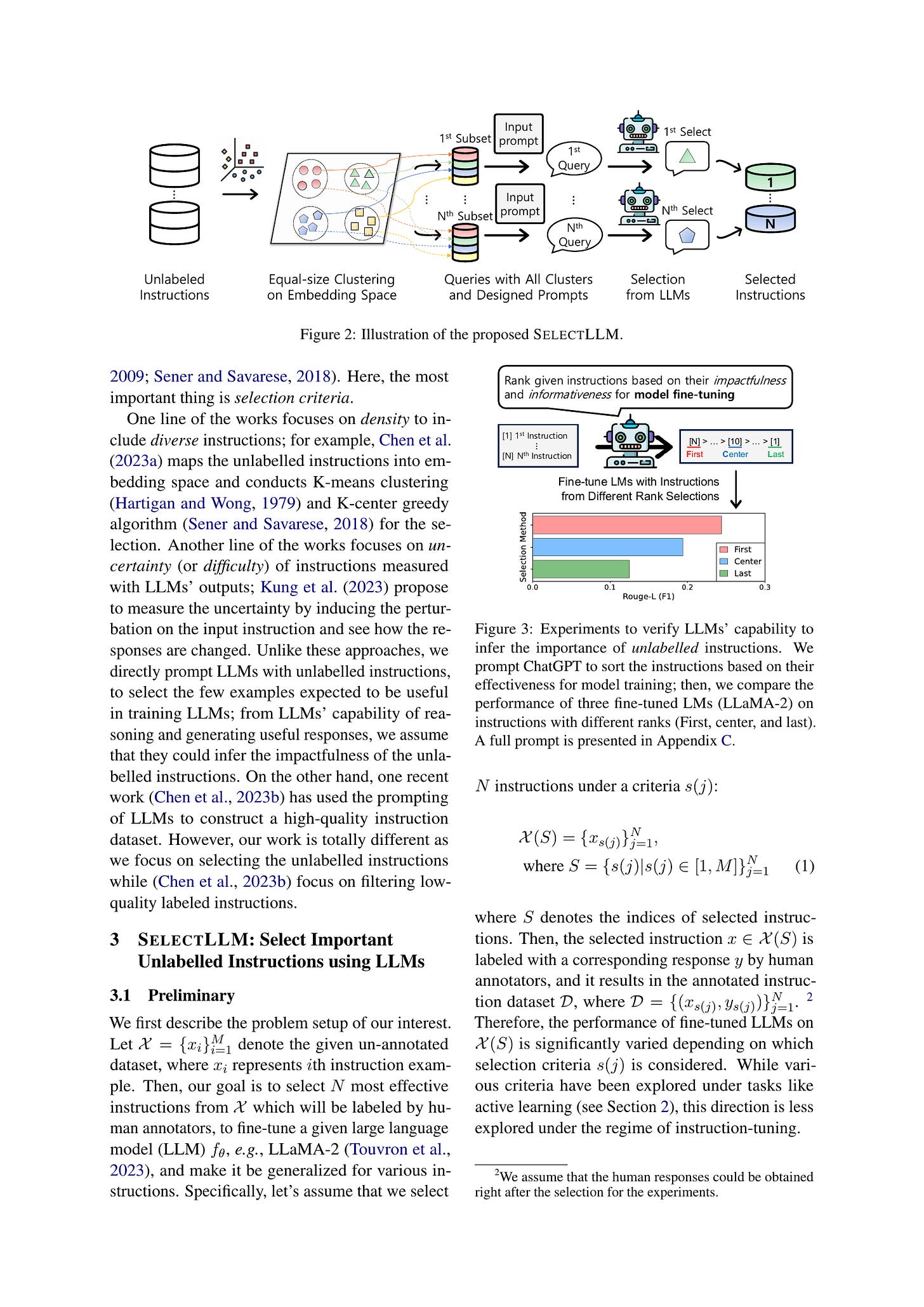

Training large language models (LLMs) with a large and diverse instruction dataset aligns the models to comprehend and follow human instructions. Recent works have shown that using a small set of high-quality instructions can outperform using large yet more noisy ones. Because instructions are unlabeled and their responses are natural text, traditional active learning schemes with the model's confidence cannot be directly applied to the selection of unlabeled instructions. In this work, we propose a novel method for instruction selection, called SelectLLM, that leverages LLMs for the selection of high-quality instructions. Our high-level idea is to use LLMs to estimate the usefulness and impactfulness of each instruction without the corresponding labels (i.e., responses), via prompting. SelectLLM involves two steps: dividing the unlabelled instructions using a clustering algorithm (e.g., CoreSet) to multiple clusters, and then prompting LLMs to choose high-quality instructions within each cluster. SelectLLM showed comparable or slightly better performance on the popular instruction benchmarks, compared to the recent state-of-the-art selection methods. All code and data are publicly available (https://github.com/minnesotanlp/select-llm).

Active Learning 같은 세팅이라고 할 수 있겠네요. 응답을 아직 작성하지 않은 Instruction들 중 어떤 Instruction을 어노테이션할 것인가를 결정하는 문제입니다. 여기서는 파인튜닝을 할 건데 어떤 Instruction을 어노테이션하면 좋을지 LLM에게 물어봤습니다. 정확히는 Instruction에 대한 질적인 퀄리티를 LLM으로 분류했다고 할 수 있겠네요.

#instruction-tuning

MouSi: Poly-Visual-Expert Vision-Language Models

(Xiaoran Fan, Tao Ji, Changhao Jiang, Shuo Li, Senjie Jin, Sirui Song, Junke Wang, Boyang Hong, Lu Chen, Guodong Zheng, Ming Zhang, Caishuang Huang, Rui Zheng, Zhiheng Xi, Yuhao Zhou, Shihan Dou, Junjie Ye, Hang Yan, Tao Gui, Qi Zhang, Xipeng Qiu, Xuanjing Huang, Zuxuan Wu, Yu-Gang Jiang)

Current large vision-language models (VLMs) often encounter challenges such as insufficient capabilities of a single visual component and excessively long visual tokens. These issues can limit the model's effectiveness in accurately interpreting complex visual information and over-lengthy contextual information. Addressing these challenges is crucial for enhancing the performance and applicability of VLMs. This paper proposes the use of ensemble experts technique to synergizes the capabilities of individual visual encoders, including those skilled in image-text matching, OCR, image segmentation, etc. This technique introduces a fusion network to unify the processing of outputs from different visual experts, while bridging the gap between image encoders and pre-trained LLMs. In addition, we explore different positional encoding schemes to alleviate the waste of positional encoding caused by lengthy image feature sequences, effectively addressing the issue of position overflow and length limitations. For instance, in our implementation, this technique significantly reduces the positional occupancy in models like SAM, from a substantial 4096 to a more efficient and manageable 64 or even down to 1. Experimental results demonstrate that VLMs with multiple experts exhibit consistently superior performance over isolated visual encoders and mark a significant performance boost as more experts are integrated. We have open-sourced the training code used in this report. All of these resources can be found on our project website.

VLM에서 비전 인코더를 여러 개 쓰자는 발상. 이렇게까지 해야 하는가? 라는 질문과는 별개로 비전 인코더를 어떻게 프리트레이닝할 것인가는 비전 인코더를 프리트레이닝하고, 특히 붙인 다음에 얼려버리는 시나리오에서는 계속 나올 수밖에 없는 질문이겠다 싶네요.

차이가 아주 크진 않은 것 같습니다만 여기서도 CLIP + DINO가 가장 괜찮은 조합으로 보인다는 게 재미있네요.

#vision-language #contrastive-learning #self-supervision

Can Large Language Models be Trusted for Evaluation? Scalable Meta-Evaluation of LLMs as Evaluators via Agent Debate

(Steffi Chern, Ethan Chern, Graham Neubig, Pengfei Liu)

Despite the utility of Large Language Models (LLMs) across a wide range of tasks and scenarios, developing a method for reliably evaluating LLMs across varied contexts continues to be challenging. Modern evaluation approaches often use LLMs to assess responses generated by LLMs. However, the meta-evaluation conducted to assess the effectiveness of these LLMs as evaluators is typically constrained by the coverage of existing benchmarks or requires extensive human annotation. This underscores the urgency of methods for scalable meta-evaluation that can effectively, reliably, and efficiently evaluate the performance of LLMs as evaluators across diverse tasks and scenarios, particularly in potentially new, user-defined scenarios. To fill this gap, we propose ScaleEval, an agent-debate-assisted meta-evaluation framework that leverages the capabilities of multiple communicative LLM agents. This framework supports multi-round discussions to assist human annotators in discerning the most capable LLMs as evaluators, which significantly eases their workload in cases that used to require large-scale annotations during meta-evaluation. We release the code for our framework, which is publicly available at: \url{https://github.com/GAIR-NLP/scaleeval}.

모델을 사용한 평가가 사람의 평가와 잘 정렬되어 있는지를 판단하기 위한 방법. 사람의 평가 데이터를 모델로 대체하기 위한 방법입니다. 여기서는 여러 모델에게 평가를 하게 한 다음, 각 모델이 서로의 평가 결과를 보고 자신의 평가를 업데이트해서 합의에 이르게 합니다. 합의하지 못한 경우에는 사람이 직접 어노테이션을 하고요.

모델을 쓰는 방법이기에 사람이 이 방법이 잘 정렬되어 있는지에 대한 메타-메타 평가를 진행했습니다. 반대로 이 방법 자체를 더 나은 평가 방법으로서 사용할 수 있지 않을까 하는 생각도 드네요.

#evaluation