2024년 1월 26일

DeepSeek-Coder: When the Large Language Model Meets Programming -- The Rise of Code Intelligence

(Daya Guo, Qihao Zhu, Dejian Yang, Zhenda Xie, Kai Dong, Wentao Zhang, Guanting Chen, Xiao Bi, Y. Wu, Y.K. Li, Fuli Luo, Yingfei Xiong, Wenfeng Liang)

The rapid development of large language models has revolutionized code intelligence in software development. However, the predominance of closed-source models has restricted extensive research and development. To address this, we introduce the DeepSeek-Coder series, a range of open-source code models with sizes from 1.3B to 33B, trained from scratch on 2 trillion tokens. These models are pre-trained on a high-quality project-level code corpus and employ a fill-in-the-blank task with a 16K window to enhance code generation and infilling. Our extensive evaluations demonstrate that DeepSeek-Coder not only achieves state-of-the-art performance among open-source code models across multiple benchmarks but also surpasses existing closed-source models like Codex and GPT-3.5. Furthermore, DeepSeek-Coder models are under a permissive license that allows for both research and unrestricted commercial use.

DeepSeek Coder 리포트. 2T 학습한 모델이었군요.

DeepSeek Coder의 디테일에서 가장 흥미로운 부분은 코드 파일들 사이의 의존성을 고려했다는 것이 아닐까 싶습니다. 이 선택의 효과가 Cross File Code Completion에서 살짝 나오는데 약간의 향상으로 보입니다. 다만 해로울 이유는 없지 않을까 싶네요.

#code #llm #pretraining

Rethinking Patch Dependence for Masked Autoencoders

(Letian Fu, Long Lian, Renhao Wang, Baifeng Shi, Xudong Wang, Adam Yala, Trevor Darrell, Alexei A. Efros, Ken Goldberg)

In this work, we re-examine inter-patch dependencies in the decoding mechanism of masked autoencoders (MAE). We decompose this decoding mechanism for masked patch reconstruction in MAE into self-attention and cross-attention. Our investigations suggest that self-attention between mask patches is not essential for learning good representations. To this end, we propose a novel pretraining framework: Cross-Attention Masked Autoencoders (CrossMAE). CrossMAE's decoder leverages only cross-attention between masked and visible tokens, with no degradation in downstream performance. This design also enables decoding only a small subset of mask tokens, boosting efficiency. Furthermore, each decoder block can now leverage different encoder features, resulting in improved representation learning. CrossMAE matches MAE in performance with 2.5 to 3.7$\times$ less decoding compute. It also surpasses MAE on ImageNet classification and COCO instance segmentation under the same compute. Code and models:

https://crossmae.github.io

Masked Autoencoder에서 디코더를 이미지 패치와 마스크 토큰을 입력으로 받는 트랜스포머로 설정하는 대신 마스크 토큰과 이미지 패치 사이의 Cross Attention으로 바꾸고 Self Attention을 아예 빼버렸습니다. 따라서 마스크 토큰들은 서로 독립이 되고 그래서 일부만 디코딩하는 것도 가능해집니다. 추가로 여러 단계의 Feature를 선형 결합하는 디자인도 고려했네요.

전반적으로 성능을 동등하게 유지하면서 효율성을 높인다는 형태의 결과이긴 합니다. 제안하는 것처럼 비디오 같은 대상에 대해서 고려가 가능하겠네요.

#self-supervision #mlm

New embedding models and API updates

OpenAI의 API 업데이트. 일단 임베딩 모델의 성능을 개선하고 가격을 낮췄습니다. 추가적으로 임베딩 벡터의 차원을 줄일 수 있는 옵션이 생겼습니다.

GPT-3.5는 가격이 낮아졌고 GPT-4 Turbo는 새 버전이 나오면서 게으름 현상을 줄였다고 하네요. 게으름 현상의 정확한 원인은 알 수 없지만 OpenAI도 이런 현상들을 다 잡아내는 게 쉽지 않은 모양입니다.

https://leaderboard.withmartian.com/

API 비용과 관련해서는 현재 치열한 경쟁이 붙은 상황이죠. 오픈소스 모델들은 말 그대로 오픈소스이니 이윤이 없는 수준까지 가격이 낮아질 수밖에 없는 상황인 듯 싶습니다.

#news

Genie: Achieving Human Parity in Content-Grounded Datasets Generation

(Asaf Yehudai, Boaz Carmeli, Yosi Mass, Ofir Arviv, Nathaniel Mills, Assaf Toledo, Eyal Shnarch, Leshem Choshen)

The lack of high-quality data for content-grounded generation tasks has been identified as a major obstacle to advancing these tasks. To address this gap, we propose Genie, a novel method for automatically generating high-quality content-grounded data. It consists of three stages: (a) Content Preparation, (b) Generation: creating task-specific examples from the content (e.g., question-answer pairs or summaries). (c) Filtering mechanism aiming to ensure the quality and faithfulness of the generated data. We showcase this methodology by generating three large-scale synthetic data, making wishes, for Long-Form Question-Answering (LFQA), summarization, and information extraction. In a human evaluation, our generated data was found to be natural and of high quality. Furthermore, we compare models trained on our data with models trained on human-written data -- ELI5 and ASQA for LFQA and CNN-DailyMail for Summarization. We show that our models are on par with or outperforming models trained on human-generated data and consistently outperforming them in faithfulness. Finally, we applied our method to create LFQA data within the medical domain and compared a model trained on it with models trained on other domains.

컨텍스트에 대한 QA 혹은 요약 데이터 구축 방법. 위키피디아 페이지를 가져와서 LLM으로 데이터 샘플을 생성시키고 NLI나 Reward Model으로 필터링하는 방식으로 구축했습니다.

#synthetic-data

Deconstructing Denoising Diffusion Models for Self-Supervised Learning

(Xinlei Chen, Zhuang Liu, Saining Xie, Kaiming He)

In this study, we examine the representation learning abilities of Denoising Diffusion Models (DDM) that were originally purposed for image generation. Our philosophy is to deconstruct a DDM, gradually transforming it into a classical Denoising Autoencoder (DAE). This deconstructive procedure allows us to explore how various components of modern DDMs influence self-supervised representation learning. We observe that only a very few modern components are critical for learning good representations, while many others are nonessential. Our study ultimately arrives at an approach that is highly simplified and to a large extent resembles a classical DAE. We hope our study will rekindle interest in a family of classical methods within the realm of modern self-supervised learning.

Diffusion Model을 변형해 Denoising Auto Encoder와 비슷하게 맞추면서 Self Supervision에 특화시키기. 픽셀 단위의 노이즈는 충분하지 않은 듯 하나 VAE를 쓸 필요는 없어서 PCA로 축소한 다음 노이즈를 걸고 Inverse PCA로 복원한 이미지를 입력으로 쓰는 방식을 택했습니다. 그 입력에 대해 원본 이미지를 Reconstruction 하는 것을 학습 타겟으로 만들었네요. 생성을 위한 노이즈 스케쥴 등도 빼버렸습니다.

생성과 Self Supervision 사이의 갭에 대해 생각해보게 되네요. 이 갭을 줄이거나 없애는 것이 흥미로운 목표겠죠.

#diffusion #self-supervision

FP6-LLM: Efficiently Serving Large Language Models Through FP6-Centric Algorithm-System Co-Design

(Haojun Xia, Zhen Zheng, Xiaoxia Wu, Shiyang Chen, Zhewei Yao, Stephen Youn, Arash Bakhtiari, Michael Wyatt, Donglin Zhuang, Zhongzhu Zhou, Olatunji Ruwase, Yuxiong He, Shuaiwen Leon Song)

Six-bit quantization (FP6) can effectively reduce the size of large language models (LLMs) and preserve the model quality consistently across varied applications. However, existing systems do not provide Tensor Core support for FP6 quantization and struggle to achieve practical performance improvements during LLM inference. It is challenging to support FP6 quantization on GPUs due to (1) unfriendly memory access of model weights with irregular bit-width and (2) high runtime overhead of weight de-quantization. To address these problems, we propose TC-FPx, the first full-stack GPU kernel design scheme with unified Tensor Core support of float-point weights for various quantization bit-width. We integrate TC-FPx kernel into an existing inference system, providing new end-to-end support (called FP6-LLM) for quantized LLM inference, where better trade-offs between inference cost and model quality are achieved. Experiments show that FP6-LLM enables the inference of LLaMA-70b using only a single GPU, achieving 1.69x-2.65x higher normalized inference throughput than the FP16 baseline. The source code will be publicly available soon.

8 bit보다는 빨랐으면 좋겠는데 4 bit에서 성능을 유지하는 게 쉽지 않다. 그래서 선택한 6 bit Quantization에 대한 최적화된 커널이네요.

학습에서나 추론에서나 더 낮은 Precision을 통한 더 빠른 연산을 생각하지 않을 수 없는 것 같네요. 6 bit Precision은 재미있는 선택인 것 같습니다. 관련해서 Microscaling (https://arxiv.org/abs/2310.10537) 같은 형태도 흥미로운 방향일 듯 싶네요.

#quantization #efficiency

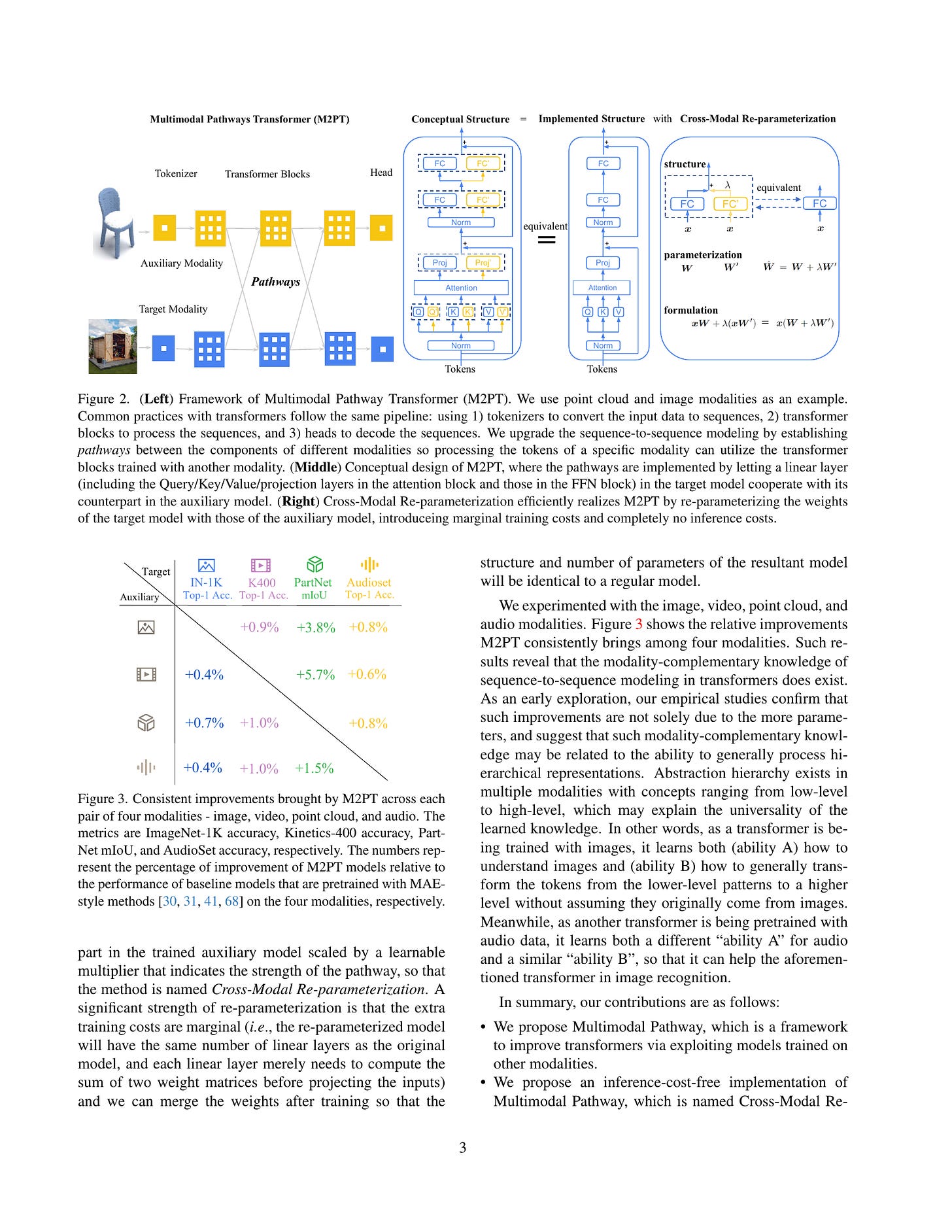

Multimodal Pathway: Improve Transformers with Irrelevant Data from Other Modalities

(Yiyuan Zhang, Xiaohan Ding, Kaixiong Gong, Yixiao Ge, Ying Shan, Xiangyu Yue)

We propose to improve transformers of a specific modality with irrelevant data from other modalities, e.g., improve an ImageNet model with audio or point cloud datasets. We would like to highlight that the data samples of the target modality are irrelevant to the other modalities, which distinguishes our method from other works utilizing paired (e.g., CLIP) or interleaved data of different modalities. We propose a methodology named Multimodal Pathway - given a target modality and a transformer designed for it, we use an auxiliary transformer trained with data of another modality and construct pathways to connect components of the two models so that data of the target modality can be processed by both models. In this way, we utilize the universal sequence-to-sequence modeling abilities of transformers obtained from two modalities. As a concrete implementation, we use a modality-specific tokenizer and task-specific head as usual but utilize the transformer blocks of the auxiliary model via a proposed method named Cross-Modal Re-parameterization, which exploits the auxiliary weights without any inference costs. On the image, point cloud, video, and audio recognition tasks, we observe significant and consistent performance improvements with irrelevant data from other modalities. The code and models are available at https://github.com/AILab-CVC/M2PT.

완전히 동떨어진 모달리티를 결합했을 때에도 상승 효과가 있을지에 대한 탐색. 텍스트에 대해 학습한 모델이 이미지 학습에도 도움이 된다고 하는 결과들과 연관지을 수 있겠네요.

서로 다른 모달리티에 대한 모델을 결합하는 방식이 특이한데...일단 각 모달리티에 대해 Self Supervision으로 학습한 모델들의 Weight를 선형 결합한 다음 과제에 대해 파인튜닝 하는 방법을 썼습니다.

최근 나온 모델 결합 시도들이 생각나네요. (https://arxiv.org/abs/2401.02412, https://arxiv.org/abs/2401.08525) 동일한 모델 하나에 모든 모달리티를 집어넣는 것이 이상적이지 않나 생각하지만 동시에 모델 결합이라는 시도도 생각해 볼 만하다 싶습니다.

#multimodal