2024년 1월 23일

WARM: On the Benefits of Weight Averaged Reward Models

(Alexandre Ramé, Nino Vieillard, Léonard Hussenot, Robert Dadashi, Geoffrey Cideron, Olivier Bachem, Johan Ferret)

Aligning large language models (LLMs) with human preferences through reinforcement learning (RLHF) can lead to reward hacking, where LLMs exploit failures in the reward model (RM) to achieve seemingly high rewards without meeting the underlying objectives. We identify two primary challenges when designing RMs to mitigate reward hacking: distribution shifts during the RL process and inconsistencies in human preferences. As a solution, we propose Weight Averaged Reward Models (WARM), first fine-tuning multiple RMs, then averaging them in the weight space. This strategy follows the observation that fine-tuned weights remain linearly mode connected when sharing the same pre-training. By averaging weights, WARM improves efficiency compared to the traditional ensembling of predictions, while improving reliability under distribution shifts and robustness to preference inconsistencies. Our experiments on summarization tasks, using best-of-N and RL methods, shows that WARM improves the overall quality and alignment of LLM predictions; for example, a policy RL fine-tuned with WARM has a 79.4% win rate against a policy RL fine-tuned with a single RM.

Reward Model 앙상블에 이어 Reward Model의 Weight Averaging이 따라나왔군요. Weight Averaging이 작동하면서 동시에 Diverse 하도록 데이터 순서와 하이퍼파라미터를 바꾸고 SFT 학습 과정의 서로 다른 시점에서 시작하는 방식을 시도했습니다. Validation 상황에서 앙상블보다 나은 성능을 보여주네요. 효율성까지 고려하면 반드시 참고해야할 방법으로 보입니다.

Weight Averaging도 꽤 오래된 방법인데 이런 곳에서 다시 등장하는군요.

#ensemble #reward-model #rlhf

Zero Bubble Pipeline Parallelism

(Penghui Qi, Xinyi Wan, Guangxing Huang, Min Lin)

Pipeline parallelism is one of the key components for large-scale distributed training, yet its efficiency suffers from pipeline bubbles which were deemed inevitable. In this work, we introduce a scheduling strategy that, to our knowledge, is the first to successfully achieve zero pipeline bubbles under synchronous training semantics. The key idea behind this improvement is to split the backward computation into two parts, one that computes gradient for the input and another that computes for the parameters. Based on this idea, we handcraft novel pipeline schedules that significantly outperform the baseline methods. We further develop an algorithm that automatically finds an optimal schedule based on specific model configuration and memory limit. Additionally, to truly achieve zero bubble, we introduce a novel technique to bypass synchronizations during the optimizer step. Experimental evaluations show that our method outperforms the 1F1B schedule up to 23% in throughput under a similar memory limit. This number can be further pushed to 31% when the memory constraint is relaxed. We believe our results mark a major step forward in harnessing the true potential of pipeline parallelism. We open sourced our implementation based on the popular Megatron-LM repository on https://github.com/sail-sg/zero-bubble-pipeline-parallelism.

Pipeline Parallel에서 버블을 없애는 방법. Weight에 대한 그래디언트 계산을 분리해서 위치를 바꾸고 Optimizer에서의 Synchronization(Gradient Norm, Overflow 체크)을 없애고 문제가 생기면 롤백하는 방식을 채택했습니다. 현실적인 시나리오에서 효과가 좋을지 궁금하긴 하네요.

#efficient_training

SpatialVLM: Endowing Vision-Language Models with Spatial Reasoning Capabilities

(Boyuan Chen, Zhuo Xu, Sean Kirmani, Brian Ichter, Danny Driess, Pete Florence, Dorsa Sadigh, Leonidas Guibas, Fei Xia)

Understanding and reasoning about spatial relationships is a fundamental capability for Visual Question Answering (VQA) and robotics. While Vision Language Models (VLM) have demonstrated remarkable performance in certain VQA benchmarks, they still lack capabilities in 3D spatial reasoning, such as recognizing quantitative relationships of physical objects like distances or size differences. We hypothesize that VLMs' limited spatial reasoning capability is due to the lack of 3D spatial knowledge in training data and aim to solve this problem by training VLMs with Internet-scale spatial reasoning data. To this end, we present a system to facilitate this approach. We first develop an automatic 3D spatial VQA data generation framework that scales up to 2 billion VQA examples on 10 million real-world images. We then investigate various factors in the training recipe, including data quality, training pipeline, and VLM architecture. Our work features the first internet-scale 3D spatial reasoning dataset in metric space. By training a VLM on such data, we significantly enhance its ability on both qualitative and quantitative spatial VQA. Finally, we demonstrate that this VLM unlocks novel downstream applications in chain-of-thought spatial reasoning and robotics due to its quantitative estimation capability. Project website:

https://spatial-vlm.github.io/

VLM의 공간적 추론 능력을 향상시키기. Segmentation 등으로 객체들에 대한 정보를 뽑고 Depth Estimation으로 3D 정보를 추출한 다음 이 둘을 결합해 템플릿으로 VQA 데이터를 구축해서 학습하는 방법입니다. Vision-Language 데이터의 Recaptioning/Synthetic Data라는 흐름에서 3D에 대한 정보를 주입하는 것이라고 볼 수도 있겠네요.

데이터셋에서 더 많은 정보를 추출하기 위한 방법으로서의 Synthetic Data라는 것도 흥미로운 방향이라고 봅니다.

#vision-language #dataset #3d

Linear Alignment: A Closed-form Solution for Aligning Human Preferences without Tuning and Feedback

(Songyang Gao, Qiming Ge, Wei Shen, Shihan Dou, Junjie Ye, Xiao Wang, Rui Zheng, Yicheng Zou, Zhi Chen, Hang Yan, Qi Zhang, Dahua Lin)

The success of AI assistants based on Language Models (LLMs) hinges on Reinforcement Learning from Human Feedback (RLHF) to comprehend and align with user intentions. However, traditional alignment algorithms, such as PPO, are hampered by complex annotation and training requirements. This reliance limits the applicability of RLHF and hinders the development of professional assistants tailored to diverse human preferences. In this work, we introduce \textit{Linear Alignment}, a novel algorithm that aligns language models with human preferences in one single inference step, eliminating the reliance on data annotation and model training. Linear alignment incorporates a new parameterization for policy optimization under divergence constraints, which enables the extraction of optimal policy in a closed-form manner and facilitates the direct estimation of the aligned response. Extensive experiments on both general and personalized preference datasets demonstrate that linear alignment significantly enhances the performance and efficiency of LLM alignment across diverse scenarios. Our code and dataset will be published on \url{https://github.com/Wizardcoast/Linear_Alignment.git}.

최적 Policy에서의 Action의 분포를 근사해서 Q에 대한 그래디언트로 표현하고, Q의 그래디언트를 Preference Principle을 준 모델과 아닌 모델의 차이로 표현해서 모델 학습 없이 토큰 분포에 대한 제어로 Alignment를 시도. 재미있네요.

#rlhf #alignment

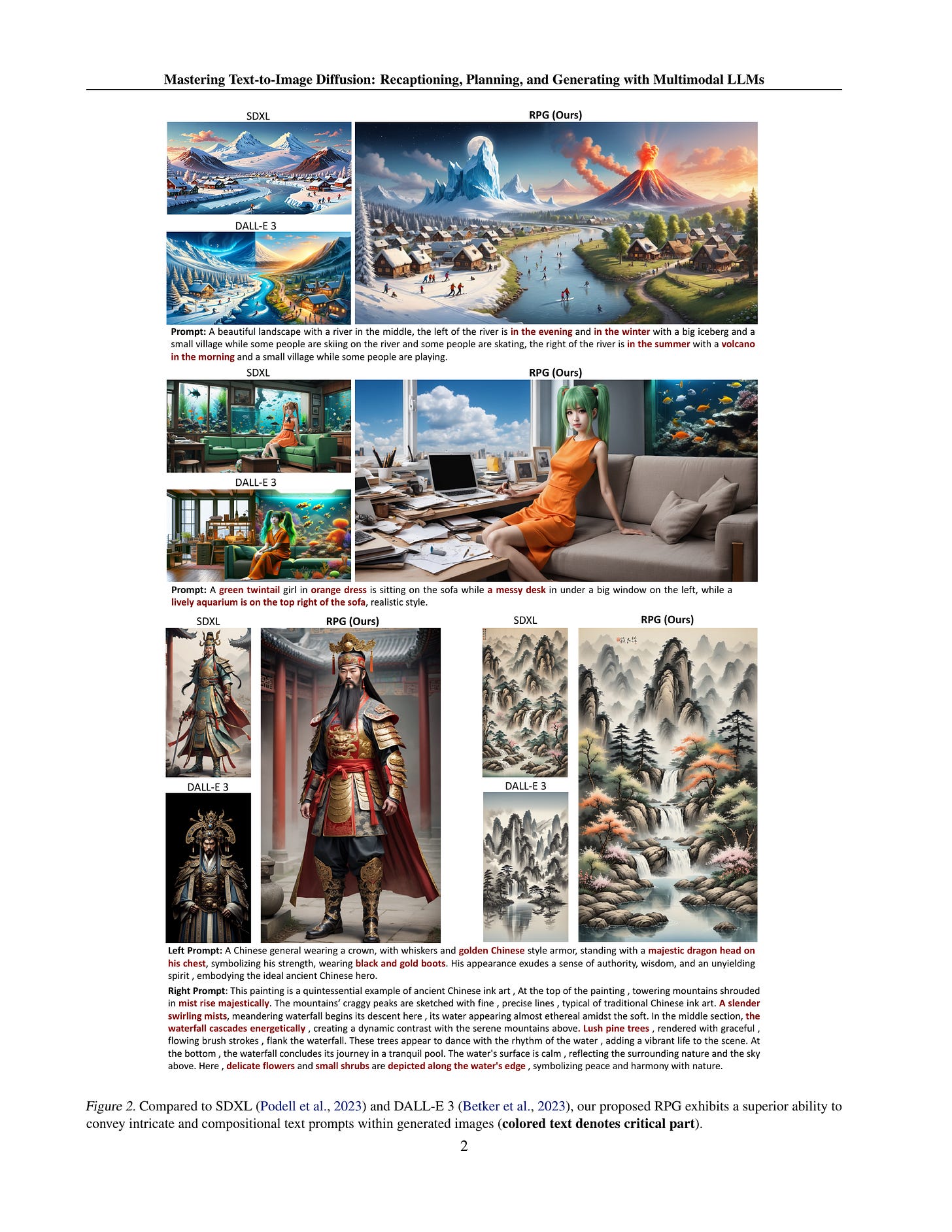

Mastering Text-to-Image Diffusion: Recaptioning, Planning, and Generating with Multimodal LLMs

(Ling Yang, Zhaochen Yu, Chenlin Meng, Minkai Xu, Stefano Ermon, Bin Cui)

Diffusion models have exhibit exceptional performance in text-to-image generation and editing. However, existing methods often face challenges when handling complex text prompts that involve multiple objects with multiple attributes and relationships. In this paper, we propose a brand new training-free text-to-image generation/editing framework, namely Recaption, Plan and Generate (RPG), harnessing the powerful chain-of-thought reasoning ability of multimodal LLMs to enhance the compositionality of text-to-image diffusion models. Our approach employs the MLLM as a global planner to decompose the process of generating complex images into multiple simpler generation tasks within subregions. We propose complementary regional diffusion to enable region-wise compositional generation. Furthermore, we integrate text-guided image generation and editing within the proposed RPG in a closed-loop fashion, thereby enhancing generalization ability. Extensive experiments demonstrate our RPG outperforms state-of-the-art text-to-image diffusion models, including DALL-E 3 and SDXL, particularly in multi-category object composition and text-image semantic alignment. Notably, our RPG framework exhibits wide compatibility with various MLLM architectures (e.g., MiniGPT-4) and diffusion backbones (e.g., ControlNet). Our code is available at: https://github.com/YangLing0818/RPG-DiffusionMaster

Compositional한 생성 과제를 Multimodal LM과의 조합으로 풀었네요. 일단 유저 입력을 정리한 다음 Recaptioning해서 상세하게 만들고, CoT를 사용해서 상세해진 Caption을 영역별로 부여합니다. 그리고 Diffusion 모델로 영역 기반의 Compositional Generation을 수행하네요. 이 과정에서 마스크를 만들어서 이미지 편집까지 가능하게 하면 이미지를 생성하고 편집해나가는 루프를 돌 수 있다...는 설계네요.

#vision-language #text2img #image_editing

With Greater Text Comes Greater Necessity: Inference-Time Training Helps Long Text Generation

(Y. Wang, D. Ma, D. Cai)

Long text generation, such as novel writing or discourse-level translation with extremely long contexts, presents significant challenges to current language models. Existing methods mainly focus on extending the model's context window through strategies like length extrapolation. However, these approaches demand substantial hardware resources during the training and/or inference phases. Our proposed method, Temp-Lora, introduces an alternative concept. Instead of relying on the KV cache to store all context information, Temp-Lora embeds this information directly into the model's parameters. In the process of long text generation, we use a temporary Lora module, progressively trained with text generated previously. This approach not only efficiently preserves contextual knowledge but also prevents any permanent alteration to the model's parameters given that the module is discarded post-generation. Extensive experiments on the PG19 language modeling benchmark and the GuoFeng discourse-level translation benchmark validate the effectiveness of Temp-Lora. Our results show that: 1) Temp-Lora substantially enhances generation quality for long texts, as indicated by a 13.2% decrease in perplexity on a subset of PG19, and a 29.6% decrease in perplexity along with a 53.2% increase in BLEU score on GuoFeng, 2) Temp-Lora is compatible with and enhances most existing long text generation methods, and 3) Temp-Lora can greatly reduce computational costs by shortening the context window. While ensuring a slight improvement in generation quality (a decrease of 3.8% in PPL), it enables a reduction of 70.5% in the FLOPs required for inference and a 51.5% decrease in latency.

Dynamic Evaluation에 대해 생각해보면서 모델 자체에 컨텍스트를 입력하는 방법에 대해 생각해봤었는데 그런 시도가 나왔네요. 생성해나가면서 생성 결과를 사용해 LoRA를 업데이트하는 방식입니다. 평가 세팅을 정확하게 이해하기 어렵긴 한데 코드를 기다려봐야 할 것 같네요.

#long-context #continual-learning

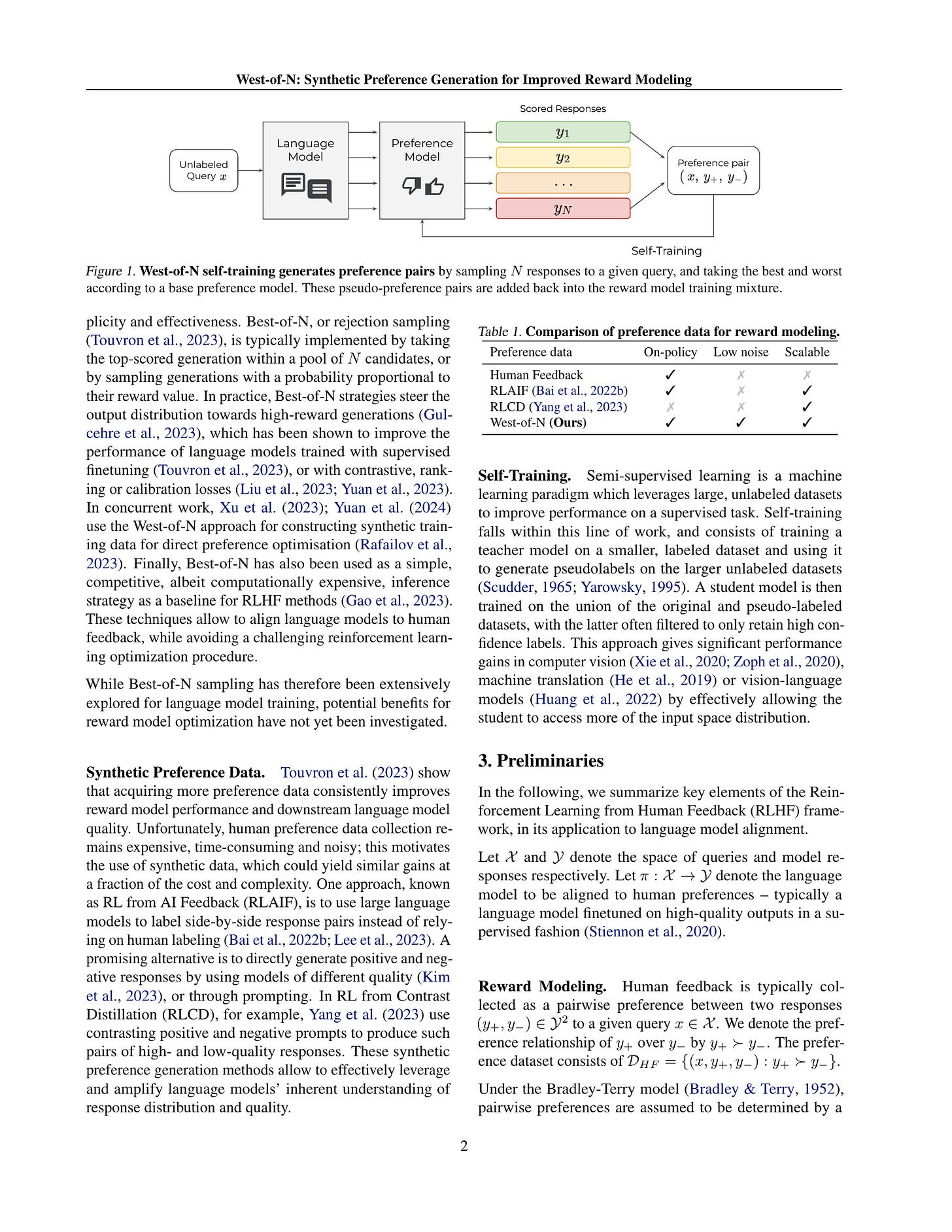

West-of-N: Synthetic Preference Generation for Improved Reward Modeling

(Alizée Pace, Jonathan Mallinson, Eric Malmi, Sebastian Krause, Aliaksei Severyn)

The success of reinforcement learning from human feedback (RLHF) in language model alignment is strongly dependent on the quality of the underlying reward model. In this paper, we present a novel approach to improve reward model quality by generating synthetic preference data, thereby augmenting the training dataset with on-policy, high-quality preference pairs. Motivated by the promising results of Best-of-N sampling strategies in language model training, we extend their application to reward model training. This results in a self-training strategy to generate preference pairs by selecting the best and worst candidates in a pool of responses to a given query. Empirically, we find that this approach improves the performance of any reward model, with an effect comparable to the addition of a similar quantity of human preference data. This work opens up new avenues of research for improving RLHF for language model alignment, by offering synthetic preference generation as a solution to reward modeling challenges.

West = Best + Worst입니다. 얼마 전에 나온 Self-Rewarding Language Models (https://arxiv.org/abs/2401.10020) 와 비슷하게 약간의 Preference Pair로 모델을 학습해서 Reward Model로의 기능을 하게 한 다음 모델 샘플을 이 Reward Model로 분류해서 데이터셋을 구축하는 방법입니다.

여기서의 차이는 두 개 샘플만 뽑는 대신 샘플을 여러 개 뽑아서 Preference의 정도가 가장 다른 페어를 학습에 쓴다는 것이겠네요. 모델의 성능에 따른 노이즈를 줄이기 위함입니다.

샘플을 여러 개 뽑고 그 안에서 비교한다는 점에서는 RSO (https://arxiv.org/abs/2309.06657) 가 떠오르기도 하고 그렇네요. 물론 여러 이터레이션을 도는 것도 가능합니다. 이런 방법은 LM이 강해질수록 더 효과적일 것이라 아주 강력한 수준의 모델에서 어디까지 가능할지 궁금해지네요.

#reward-model #rlhf #alignment

Spotting LLMs With Binoculars: Zero-Shot Detection of Machine-Generated Text

(Abhimanyu Hans, Avi Schwarzschild, Valeriia Cherepanova, Hamid Kazemi, Aniruddha Saha, Micah Goldblum, Jonas Geiping, Tom Goldstein)

Detecting text generated by modern large language models is thought to be hard, as both LLMs and humans can exhibit a wide range of complex behaviors. However, we find that a score based on contrasting two closely related language models is highly accurate at separating human-generated and machine-generated text. Based on this mechanism, we propose a novel LLM detector that only requires simple calculations using a pair of pre-trained LLMs. The method, called Binoculars, achieves state-of-the-art accuracy without any training data. It is capable of spotting machine text from a range of modern LLMs without any model-specific modifications. We comprehensively evaluate Binoculars on a number of text sources and in varied situations. Over a wide range of document types, Binoculars detects over 90% of generated samples from ChatGPT (and other LLMs) at a false positive rate of 0.01%, despite not being trained on any ChatGPT data.

LLM이 생성한 텍스트 검출. LLM이 생성한 텍스트는 LLM의 Perplexity가 낮을 것이기에 Perplexity를 쓸 수 있겠지만, 프롬프트가 없으면 LLM에게도 Perplexity가 높을 수 있습니다. 예를 들어 카피바라와 천체물리학자라는 주제로 생성한 텍스트는 프롬프트 없이 보면 Perplexity가 높겠죠.

그래서 모델 두 개를 준비해서 모델 사이의 Cross Entropy로 Normalize를 해줍니다. 모델 두 개 사이의 차이보다 사람의 텍스트의 차이가 클 것이라는 가정이죠. 표절 검출 같이 사용하기에는 1%의 에러율도 조심스럽지만 검출할 수 있다는 것 자체는 여러모로 유용할 수 있을 것 같네요.

#llm

CMMMU: A Chinese Massive Multi-discipline Multimodal Understanding Benchmark

(Ge Zhang, Xinrun Du, Bei Chen, Yiming Liang, Tongxu Luo, Tianyu Zheng, Kang Zhu, Yuyang Cheng, Chunpu Xu, Shuyue Guo, Haoran Zhang, Xingwei Qu, Junjie Wang, Ruibin Yuan, Yizhi Li, Zekun Wang, Yudong Liu, Yu-Hsuan Tsai, Fengji Zhang, Chenghua Lin, Wenhao Huang, Wenhu Chen, Jie Fu)

As the capabilities of large multimodal models (LMMs) continue to advance, evaluating the performance of LMMs emerges as an increasing need. Additionally, there is an even larger gap in evaluating the advanced knowledge and reasoning abilities of LMMs in non-English contexts such as Chinese. We introduce CMMMU, a new Chinese Massive Multi-discipline Multimodal Understanding benchmark designed to evaluate LMMs on tasks demanding college-level subject knowledge and deliberate reasoning in a Chinese context. CMMMU is inspired by and strictly follows the annotation and analysis pattern of MMMU. CMMMU includes 12k manually collected multimodal questions from college exams, quizzes, and textbooks, covering six core disciplines: Art & Design, Business, Science, Health & Medicine, Humanities & Social Science, and Tech & Engineering, like its companion, MMMU. These questions span 30 subjects and comprise 39 highly heterogeneous image types, such as charts, diagrams, maps, tables, music sheets, and chemical structures. CMMMU focuses on complex perception and reasoning with domain-specific knowledge in the Chinese context. We evaluate 11 open-source LLMs and one proprietary GPT-4V(ision). Even GPT-4V only achieves accuracies of 42%, indicating a large space for improvement. CMMMU will boost the community to build the next-generation LMMs towards expert artificial intelligence and promote the democratization of LMMs by providing diverse language contexts.

중국의 Multimodal 벤치마크군요. Yi-34B의 Vision Language 모델 공개와 같이 공개됐네요. (https://huggingface.co/01-ai/Yi-VL-34B) 중국 쪽에서 LLM이 쏟아져 나오고 있는데 마찬가지로 VLM도 쏟아질 듯합니다.

#vision-language #benchmark