2024년 1월 19일

https://arxiv.org/abs/2401.10020

Self-Rewarding Language Models (Weizhe Yuan, Richard Yuanzhe Pang, Kyunghyun Cho, Sainbayar Sukhbaatar, Jing Xu, Jason Weston)

We posit that to achieve superhuman agents, future models require superhuman feedback in order to provide an adequate training signal. Current approaches commonly train reward models from human preferences, which may then be bottlenecked by human performance level, and secondly these separate frozen reward models cannot then learn to improve during LLM training. In this work, we study Self-Rewarding Language Models, where the language model itself is used via LLM-as-a-Judge prompting to provide its own rewards during training. We show that during Iterative DPO training that not only does instruction following ability improve, but also the ability to provide high-quality rewards to itself. Fine-tuning Llama 2 70B on three iterations of our approach yields a model that outperforms many existing systems on the AlpacaEval 2.0 leaderboard, including Claude 2, Gemini Pro, and GPT-4 0613. While only a preliminary study, this work opens the door to the possibility of models that can continually improve in both axes.

일단 SFT 데이터와 평가 능력을 탑재하기 위한 데이터로 SFT를 합니다. 그 다음 모델로 프롬프트와 그에 대한 응답 샘플을 뽑은 다음 모델 자신이 응답을 평가하게 합니다. 평가한 결과를 사용해 DPO 스타일로 학습하죠. 튜닝된 모델을 사용해 이 과정을 반복합니다.

반복할수록 모델 성능이 향상된다는 것이 나타났군요. Policy 뿐만 아니라 Reward Model 또한 튜닝 과정에서 성능이 향상된다는 것이 포인트라고 할 수 있겠습니다. 단순하면서도 많은 생각을 해보게 하는 아이디어네요.

#alignment #feedback

https://arxiv.org/abs/2401.10208

MM-Interleaved: Interleaved Image-Text Generative Modeling via Multi-modal Feature Synchronizer (Changyao Tian, Xizhou Zhu, Yuwen Xiong, Weiyun Wang, Zhe Chen, Wenhai Wang, Yuntao Chen, Lewei Lu, Tong Lu, Jie Zhou, Hongsheng Li, Yu Qiao, Jifeng Dai)

Developing generative models for interleaved image-text data has both research and practical value. It requires models to understand the interleaved sequences and subsequently generate images and text. However, existing attempts are limited by the issue that the fixed number of visual tokens cannot efficiently capture image details, which is particularly problematic in the multi-image scenarios. To address this, this paper presents MM-Interleaved, an end-to-end generative model for interleaved image-text data. It introduces a multi-scale and multi-image feature synchronizer module, allowing direct access to fine-grained image features in the previous context during the generation process. MM-Interleaved is end-to-end pre-trained on both paired and interleaved image-text corpora. It is further enhanced through a supervised fine-tuning phase, wherein the model improves its ability to follow complex multi-modal instructions. Experiments demonstrate the versatility of MM-Interleaved in recognizing visual details following multi-modal instructions and generating consistent images following both textual and visual conditions. Code and models are available at \url{https://github.com/OpenGVLab/MM-Interleaved}.

Interleaved Vision-Language 모델 + 이미지 생성. Resampler를 사용하면서 발생하는 정보 손실에 대해 Multiscale Feature를 사용해서 커버하는 방식입니다. Multiscale Feature에 대한 결합은 Deformable Attention을 사용했네요. 생각해보면 Deformable Attention은 기본적으로 Multiscale Cross Attention을 효율적으로 위해서 나온 방법이기도 하죠. 비전 하던 사람들이 붙으니 과거의 비전 쪽 접근들의 향취가 나는군요.

더해서 구글이 요즘 모델을 결합할 때 중간 레이어들을 연결하는 방법을 쓰고 있는데 (https://arxiv.org/abs/2401.02412, https://arxiv.org/abs/2401.08525) 관련지어 생각해볼 수 있지 않을까 싶네요.

#vision-language

https://arxiv.org/abs/2401.10225

ChatQA: Building GPT-4 Level Conversational QA Models (Zihan Liu, Wei Ping, Rajarshi Roy, Peng Xu, Mohammad Shoeybi, Bryan Catanzaro)

In this work, we introduce ChatQA, a family of conversational question answering (QA) models, that obtain GPT-4 level accuracies. Specifically, we propose a two-stage instruction tuning method that can significantly improve the zero-shot conversational QA results from large language models (LLMs). To handle retrieval in conversational QA, we fine-tune a dense retriever on a multi-turn QA dataset, which provides comparable results to using the state-of-the-art query rewriting model while largely reducing deployment cost. Notably, our ChatQA-70B can outperform GPT-4 in terms of average score on 10 conversational QA datasets (54.14 vs. 53.90), without relying on any synthetic data from OpenAI GPT models.

NVIDIA의 Retrieval 기반 QA 모델. NVIDIA는 Retrieval을 꽤 좋아하네요. SFT 이후 문서를 컨텍스트로 하는 QA 데이터로 다시 튜닝했습니다. 추가적으로 멀티 턴 상황에서 Retriever가 적절한 문서를 가져올 수 있도록 Retriever를 파인튜닝하는 작업도 했습니다.

#qa

https://arxiv.org/abs/2401.09865

Improving fine-grained understanding in image-text pre-training (Ioana Bica, Anastasija Ilić, Matthias Bauer, Goker Erdogan, Matko Bošnjak, Christos Kaplanis, Alexey A. Gritsenko, Matthias Minderer, Charles Blundell, Razvan Pascanu, Jovana Mitrović)

We introduce SPARse Fine-grained Contrastive Alignment (SPARC), a simple method for pretraining more fine-grained multimodal representations from image-text pairs. Given that multiple image patches often correspond to single words, we propose to learn a grouping of image patches for every token in the caption. To achieve this, we use a sparse similarity metric between image patches and language tokens and compute for each token a language-grouped vision embedding as the weighted average of patches. The token and language-grouped vision embeddings are then contrasted through a fine-grained sequence-wise loss that only depends on individual samples and does not require other batch samples as negatives. This enables more detailed information to be learned in a computationally inexpensive manner. SPARC combines this fine-grained loss with a contrastive loss between global image and text embeddings to learn representations that simultaneously encode global and local information. We thoroughly evaluate our proposed method and show improved performance over competing approaches both on image-level tasks relying on coarse-grained information, e.g. classification, as well as region-level tasks relying on fine-grained information, e.g. retrieval, object detection, and segmentation. Moreover, SPARC improves model faithfulness and captioning in foundational vision-language models.

이미지-텍스트 레벨의 Contrast에 추가로 패치-토큰 단위의 Contrast를 추가한 시도. 패치-토큰 임베딩에 대한 내적에 Threshold를 걸어 Sparse하게 만든 Weight로 패치 임베딩의 가중 평균을 구해 텍스트 토큰과 Contrast Loss를 걸어주는 방식입니다. 단순하게 토큰에 상응하는 이미지 패치들의 묶음을 찾아내는 방법이라고 할 수 있겠네요.

#contrastive_learning #vision-language

https://arxiv.org/abs/2401.09985

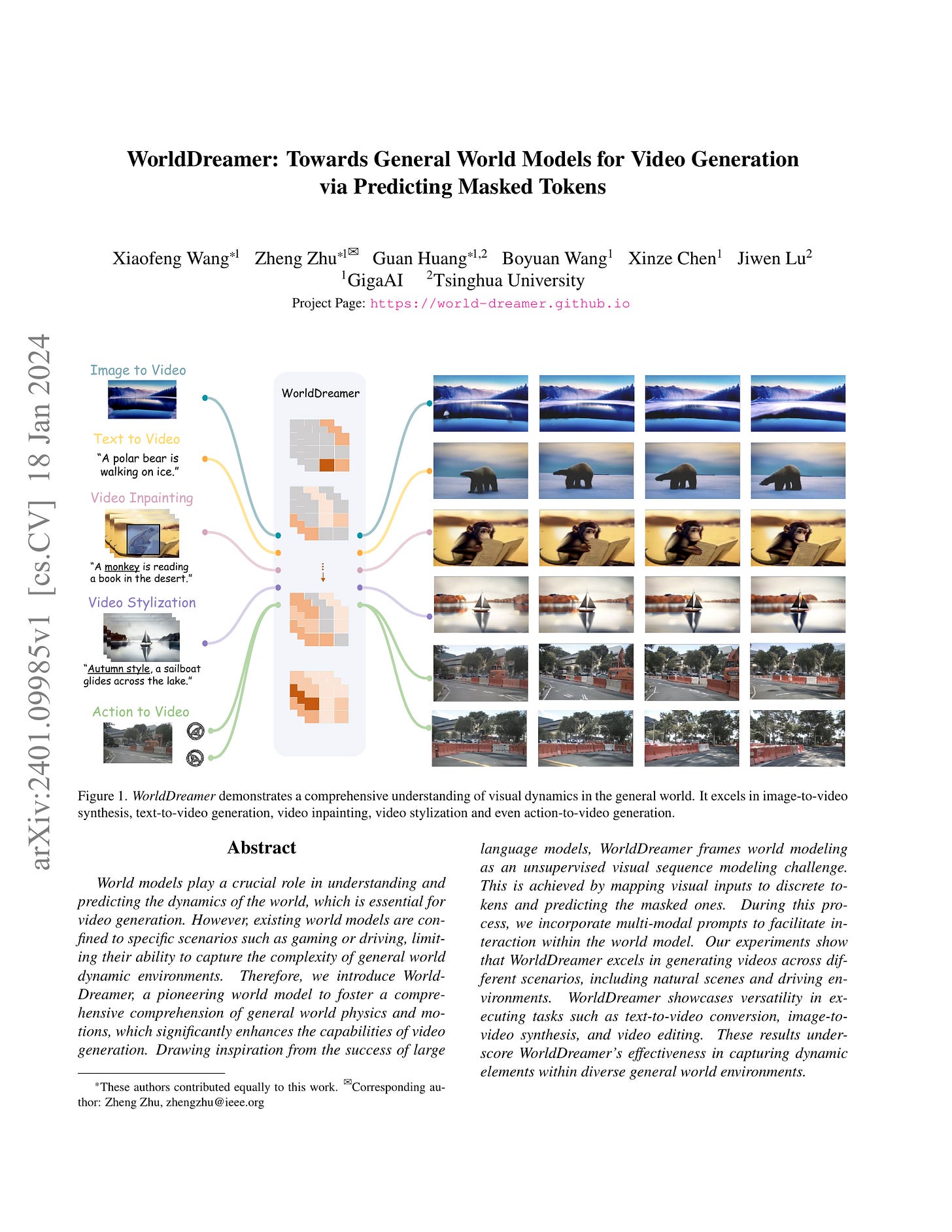

WorldDreamer: Towards General World Models for Video Generation via Predicting Masked Tokens (Xiaofeng Wang, Zheng Zhu, Guan Huang, Boyuan Wang, Xinze Chen, Jiwen Lu)

World models play a crucial role in understanding and predicting the dynamics of the world, which is essential for video generation. However, existing world models are confined to specific scenarios such as gaming or driving, limiting their ability to capture the complexity of general world dynamic environments. Therefore, we introduce WorldDreamer, a pioneering world model to foster a comprehensive comprehension of general world physics and motions, which significantly enhances the capabilities of video generation. Drawing inspiration from the success of large language models, WorldDreamer frames world modeling as an unsupervised visual sequence modeling challenge. This is achieved by mapping visual inputs to discrete tokens and predicting the masked ones. During this process, we incorporate multi-modal prompts to facilitate interaction within the world model. Our experiments show that WorldDreamer excels in generating videos across different scenarios, including natural scenes and driving environments. WorldDreamer showcases versatility in executing tasks such as text-to-video conversion, image-tovideo synthesis, and video editing. These results underscore WorldDreamer's effectiveness in capturing dynamic elements within diverse general world environments.

Masked Token Prediction + Spatiotemporal Patch에 대한 Local Attention Transformer 기반으로 비디오 생성 모델을 구성하고, 비디오 생성 모델에 다른 모달리티에 대한 Cross Attention을 붙인 형태의 모델. 따라서 이미지, 텍스트, 비디오, 그리고 운전 같은 액션을 컨디션으로 주는 것이 가능합니다.

아무래도 World Models라는 표현이 눈에 띌 수밖에 없네요. 비디오에 대한 학습이 세계에 대해 굉장히 강력한 정보를 줄 것이라는 가정이 많은데 실제로 그럴지가 앞으로 또 흥미로울 부분이 아닐까 싶습니다.

#video-generation #multimodal